Paper:

A Socially Interactive Robot Partner Using Content-Based Conversation System for Information Support

Jinseok Woo*, János Botzheim**, and Naoyuki Kubota*

*Tokyo Metropolitan University

6-6 Asahigaoka, Hino, Tokyo 191-0065, Japan

**Department of Mechatronics, Optics and Mechanical Engineering Informatics, Budapest University of Technology and Economics

4-6 Bertalan Lajos Street, Budapest 1111, Hungary

The development of robot partners for supporting human life has been growing for many years. One main feature that should be considered in developing such robots is the conversation system. In this study, a conversation system called iPhonoid-C is introduced. The iPhonoid-C is a robot partner based on a smart device. A conversation is a form of communication in which two or more people exchange words and information. Therefore, one important part of judging the effectiveness of the interaction must be to evaluate if the appropriate amount of information is provided by the robot. In this research, we focused on a time-dependent utterance system to adjust the amount of conversation based on Grice’s maxim of quantity. By utilizing Grice’s theory, it is possible to tailor the robot’s communication by selecting the Grice value to correspond to the human’s condition. Using this method, the robot partner can control the amount of information it communicates to adapt to the human’s situation based on Grice’s maxim of quantity. An experimental result with the robot partner is presented to validate the proposed time-dependent conversation system.

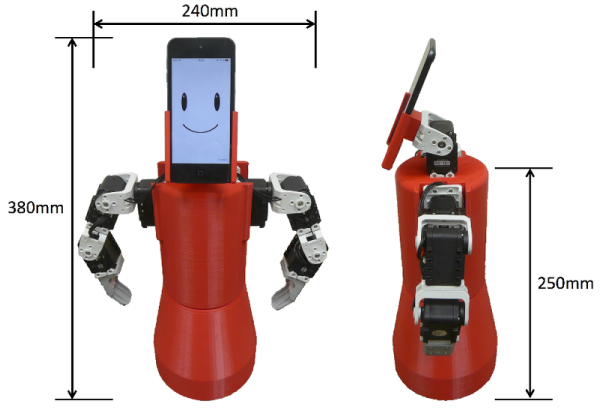

The robot partner iPhonoid-C

- [1] T. Belpaeme, P. E. Baxter, R. Read, R. Wood, H. Cuayáhuitl, B. Kiefer, S. Racioppa, I. Kruijff-Korbayová, G. Athanasopoulos, V. Enescu, et al., “Multimodal child-robot interaction: Building social bonds,” J. of Human-Robot Interaction, Vol.1, No.2, pp. 33-53, 2012.

- [2] C. Breazeal, “Social interactions in HRI: the robot view,” IEEE Trans. on Systems, Man, and Cybernetics, Part C (Applications and Reviews), Vol.34, No.2, pp. 181-186, 2004.

- [3] M. K. Lee, S. Kiesler, J. Forlizzi, and P. Rybski, “Ripple effects of an embedded social agent: a field study of a social robot in the workplace,” Proc. of the SIGCHI Conf. on Human Factors in Computing Systems, pp. 695-704, 2012.

- [4] T. Takeda, Y. Hirata, and K. Kosuge, “Dance step estimation method based on HMM for dance partner robot,” IEEE Trans. on Industrial Electronics, Vol.54, No.2, pp. 699-706, 2007.

- [5] Y. Yoshikawa, K. Shinozawa, H. Ishiguro, N. Hagita, and T. Miyamoto, “Responsive Robot Gaze to Interaction Partner,” Robotics: Science and Systems, pp. 37-43, 2006.

- [6] C. Breazeal, “Emotion and sociable humanoid robots,” Int. J. of Human-Computer Studies, Vol.59, No.1-2, pp. 119-155, 2003.

- [7] C. Breazeal, “Toward sociable robots,” Robotics and Autonomous Systems, Vol.42, No.3-4, pp. 167-175, 2003.

- [8] T. Fong, C. Thorpe, and C. Baur, “Collaboration, dialogue, human-robot interaction,” Robotics Research, pp. 255-266, 2003.

- [9] M. S. Prewett, R. C. Johnson, K. N. Saboe, L. R. Elliott, and M. D. Coovert, “Managing workload in human–robot interaction: A review of empirical studies,” Computers in Human Behavior, Vol.26, No.5, pp. 840-856, 2010.

- [10] H. P. Grice, “Logic and conversation,” The Semantics-Pragmatics Boundary in Philosophy, pp. 41-58, 1975.

- [11] D. Wilson and D. Sperber, “On Grice’s theory of conversation,” Conversation and Discourse, pp. 155-178, 1981.

- [12] J. Woo, K. Wada, and N. Kubota, “Robot partner system for elderly people care by using sensor network,” 2012 4th IEEE RAS & EMBS Int. Conf. on Biomedical Robotics and Biomechatronics (BioRob), pp. 1329-1334, 2012.

- [13] R. Carston, “Quantity maxims and generalised implicature,” Lingua, Vol.96, No.4, pp. 213-244, 1995.

- [14] J. S. Chenail and R. J. Chenail, “Communicating qualitative analytical results following Grice’s conversational maxims,” The Qualitative Report, Vol.16, No.1, pp. 276-285, 2011.

- [15] N. Mavridis, “A review of verbal and non-verbal human–robot interactive communication,” Robotics and Autonomous Systems, Vol.63, Part 1, pp. 22-35, 2015.

- [16] R. M. Young, “Using Grice’s maxim of quantity to select the content of plan descriptions,” Artificial Intelligence, Vol.115, No.2, pp. 215-256, 1999.

- [17] S. Ono, J. Woo, Y. Matsuo, J. Kusaka, K. Wada, and N. Kubota, “A Health Promotion Support System for Increasing Motivation Using a Robot Partner,” Trans. of the Institute of Systems, Control and Information Engineers, Vol.28, No.4, pp. 161-171, 2015.

- [18] J. Woo and N. Kubota, “Recognition of indoor environment by robot partner using conversation,” J. Adv. Comput. Intell. Intell. Inform., Vol.17, No.5, pp. 753-760, 2013.

- [19] J. Woo, N. Kubota, J. Shimazaki, H. Masuta, Y. Matsuo, and H. Lim, “Communication based on Frankl’s psychology for humanoid robot partners using emotional model,” 2013 IEEE Int. Conf. on Fuzzy Systems (FUZZ-IEEE), pp. 1-8, 2013.

- [20] A. Yorita and N. Kubota, “Cognitive development in partner robots for information support to elderly people,” IEEE Trans. on Autonomous Mental Development, Vol.3, No.1, pp. 64-73, 2011.

- [21] Y. Sakata, J. Botzheim, and N. Kubota, “Development platform for robot partners using smart phones,” 2013 Int. Symp. on Micro-NanoMechatronics and Human Science (MHS), pp. 1-6, 2013.

- [22] J. Woo, J. Botzheim, and N. Kubota, “Facial and gestural expression generation for robot partners,” 2014 Int. Symp. on Micro-NanoMechatronics and Human Science (MHS), pp. 1-6, 2014.

- [23] J. Woo, J. Botzheim, and N. Kubota, “System Integration for Cognitive Model of a Robot Partner,” Intelligent Automation & Soft Computing, pp. 1-14, 2017.

- [24] S. Miki, Y. Matsuo, and N. Kubota, “Speech System For Health Promotion Support Using a Robot Partner,” The Robotics and Mechatronics Conf. 2015, pp. _1A1-S05_1-_1A1-S05_3, 2015 (in Japanese).

- [25] J. Woo, J. Botzheim, and N. Kubota, “Conversation system for natural communication with robot partner,” 2014 10th France-Japan/8th Europe-Asia Congress on Mecatronics (MECATRONICS), pp. 349-354, 2014.

- [26] J. Woo, J. Botzheim, and N. Kubota, “Verbal conversation system for a socially embedded robot partner using emotional model,” 2015 24th IEEE Int. Symp. on Robot and Human Interactive Communication (RO-MAN), pp. 37-42, 2015.

- [27] J. Woo and N. Kubota, “Conversation system based on computational intelligence for robot partner using smart phone,” 2013 IEEE Int. Conf. on Systems, Man, and Cybernetics (SMC), pp. 2927-2932, 2013.

- [28] J. Szeles, N. Kubota, and J. Woo, “Weather forecast support system implemented into robot partner for supporting elderly people using fuzzy logic,” 2017 Joint 17th World Congress of Int. Fuzzy Systems Association and 9th Int. Conf. on Soft Computing and Intelligent Systems (IFSA-SCIS), pp. 1-5, 2017.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.