Paper:

Natural Language Generation Using Monte Carlo Tree Search

Kaori Kumagai*1, Ichiro Kobayashi*1, Daichi Mochihashi*2, Hideki Asoh*3, Tomoaki Nakamura*4, and Takayuki Nagai*4

*1Advanced Sciences, Graduate School of Humanities and Sciences, Ochanomizu University

2-1-1 Ohtsuka, Bunkyo-ku, Tokyo 112-8610, Japan

*2The Institute of Statistical Mathematics

10-3 Midori-cho, Tachikawa city, Tokyo 190-0014, Japan

*3National Institute of Advanced Industrial Science and Technology (AIST)

1-1-1 Umezono, Tsukuba, Ibaraki 305-8560, Japan

*4The University of Electro-Communications

1-5-1 Chofugaoka, Chofu, Tokyo 182-8585, Japan

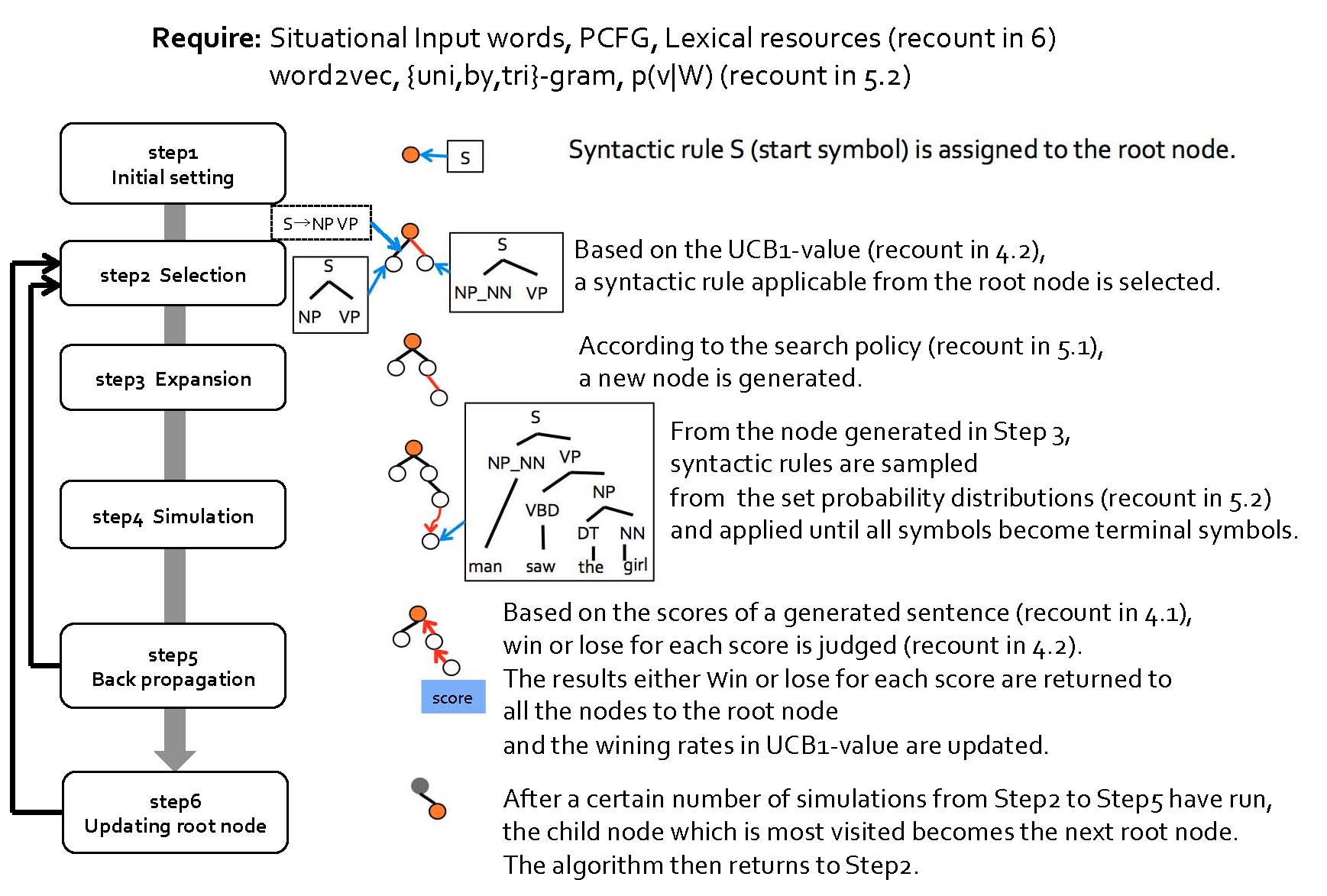

We propose a method of simulation-based natural language generation that accounts for both building a correct syntactic structure and reflecting the given situational information as input for the generated sentence. We employ the Monte Carlo tree search for this nontrivial search problem in simulation, using context-free grammar rules as search operators. We evaluated numerous generation results from two aspects: the appropriateness of sentence contents for the given input information and the sequence of words in a generated sentence. Furthermore, in order to realize an efficient search in simulation, we introduced procedures to unfold syntactic structures from words strongly related to the given situational information, and increased the probability of selecting those related words. Through a numbers of experiments, we confirmed that our method can effectively generate a sentence with various words and phrasings.

MCTS algorithm for NLG

- [1] K. Kumagai, I. Kobayashi, D. Mochihashi, H. Asoh, T. Nakamura, and T. Nagai, “Human-like Natural Language Generation Using Monte Carlo Tree Search,” Proc. of the INLG 2016 Workshop on Computational Creativity in Natural Language Generation, pp. 11-18, 2016.

- [2] L. Kocsis and C. Szepesvari, “Bandit based Monte Carlo planning,” ECML 2006, pp. 282-293, 2006.

- [3] C. Browne, E. Powley, D. Whitehouse, S. Lucas, P. I. Cowling, P. Rohlfshagen, S. Tavener, D. Perez, S. Samothrakis, and S. Colton, “Survey of Monte Carlo Tree Search Methods,” Technical Report 1, 2012.

- [4] E. Reiter and R. Dale, “Building applied natural language generation systems,” Nat. Lang. Eng., Vol.3, No.1, pp. 57-87, 1997.

- [5] A. Koller and M. Stone, “Sentence generation as a planning problem,” Int. Natural Language Generation Workshop, Vol.12, pp. 17-24, 2007.

- [6] D. Bauer and A. Koller, “Sentence generation as planning with probabilistic LTAG,” Proc. of the 10th Int. Workshop on Tree Adjoining Grammar and Related Formalisms, 2010.

- [7] O. Lemon, “Adaptive natural language generation in dialogue using reinforcement learning,” Workshop on the Semantics and Pragmatics of Dialogue (SEMDIAL), pp. 141-148, 2008.

- [8] O. Lemon, “Learning what to say and how to say it: joint optimization of spoken dialogue management and natural language generation,” Computer Speech and Language, Vol.25, No.2, pp. 210-221, 2011.

- [9] V. Rieser and O. Lemon, “Natural Language Generation as Planning under Uncertainty for Spoken Dialogue Systems,” EACL 2009, pp. 683-691, 2009.

- [10] R. S. Sutton and A. G. Barto, “Reinforcement Learning: An Introduction,” MIT Press, 1998.

- [11] N. McKinley and S. Ray, “A Decision-Theoretic Approach to Natural Language Generation,” Proc. of the 52nd Annual Meeting of the Association for Computational Linguistics, Vol.1: Long Papers, pp. 552-561, 2014.

- [12] J. Pfeil and S. Ray, “Scaling a Natural Language Generation System,” Proc. of the 54th Annual Meeting of the Association for Computational Linguistics, Vol.1: Long Papers, pp. 1148-1157, 2016.

- [13] Y. Bengio, R. Ducharme, P. Vincent, and C. Janvin, “A Neural Probabilistic Language Model,” J. of Machine Learning Research, Vol.3, pp. 1137-1155, 2003.

- [14] T.-H. Wen, M. Gašić, D. Kim, N. Mrkšić, P.-H. Su, D. Vandyke, and S. Young, “Stochastic Language Generation in Dialogue using Recurrent Neural Networks with Convolutional Sentence Reranking,” Proc. of the 16th Annual Meeting of the Special Interest Group on Discourse and Dialogue (SIGDIAL), 2015.

- [15] T.-H. Wen, M. Gašić, N. Mrkšić, P.-H. Su, D. Vandyke, and S. Young, “Semantically Conditioned LSTM-based Natural Language Generation for Spoken Dialogue Systems,” Proc. of the 2015 Conf. on Empirical Methods in Natural Language Processing (EMNLP), 2015.

- [16] T.-H. Wen, M. Gašić, N. Mrkšić, L. M. Rojas-Barahona, P.-H. Su, D. Vandyke, and S. Young, “Multi-domain Neural Network Language Generation for Spoken Dialogue Systems,” Proc. of the 2016 Conf. on North American Chapter of the Association for Computational Linguistics (NAACL), 2016.

- [17] Y. Liu, Y. Zhang, W. Che, and B. Qin, “Transition-Based Syntactic Linearization,” NAACL 2015, pp. 113-122, 2015.

- [18] D. Silver, A. Huang, C. J. Maddison, A. Guez, L. Sifre, G. Driessche, J. Schrittwieser, I. Antonoglou, V. Panneershelvam, M. Lanctot, S. Dieleman, D. Grewe, J. Nham, N. Kalchbrenner, I. Sutskever, T. Lillicrap, M. Leach, K. Kavukcuoglu, T. Graepel, and D. Hassabis, “Mastering the game of Go with deep neural networks and tree search,” Nature, Vol.529, pp. 484-489, 2016.

- [19] M. N. Katehakis and A. F. Veinott, “The Multi-Armed Bandit Problem: Decomposition and Computation,” Mathematics of Operations Research, Vol.12, No.2, pp. 262-268, 1987.

- [20] P. Auer, N. Cesa-Bianchi, and P. Fischer, “Finite-time analysis of the multi-armed bandit problem,” Machine Learning, Vol.47, pp. 235-256, 2002.

- [21] M. P. Marcus, B. Santorini, and M. A. Marcinkiewicz, “Building a Large Annotated Corpus of English: The Penn Treebank,” Computational Linguistics, Vol.19, No.2, pp. 313-330, 1993.

- [22] R. Kneser and H. Ney, “Improved backing-off for n-gram language modeling,” ICASSP, Vol.1, pp. 181-184, 1995.

- [23] J. H. Lau, A. Clark, and S. Lappin, “Unsupervised Prediction of Acceptability Judgements,” ACL 2015, Vol.53, pp. 15-1000, 2015.

- [24] D. Blei, A. Ng, and M. Jordan, “Latent Dirichlet Allocation,” J. of Machine Learning Research, Vol.3, pp. 993-1022, 2003.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.