Paper:

A Development of Robotic Scrub Nurse System - Detection for Surgical Instruments Using Faster Region-Based Convolutional Neural Network –

Akito Nakano† and Kouki Nagamune

Graduate School of Engineering, University of Fukui

3-9-1 Bunkyo, Fukui, Fukui 910-8507, Japan

†Corresponding author

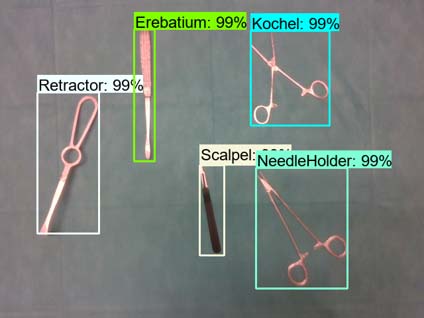

There is presently a shortage of nurses in Japan, with a further shortage of 3,000–130,000 nurses expected. There is also shortage of scrub nurses. Scrub nurses are nurses who work in the operating room. The main job of scrub nurses is to assist surgeons. Scrub nurses are a high turnover rate, because it is a difficult job. Therefore, system for assisting scrub nurses are needed. The purpose of this study was to develop a robotic scrub nurse. As a first step, a detection system for surgical instruments was developed using the “Faster Region-Based Convolutional Neural Network” (Faster R-CNN). In experiments, computer graphics (CG) model images and 3D-printed model images were evaluated, and the system showed high accuracy. Consequently, the Faster R-CNN system can be considered as suitable for detecting surgical instruments.

Detection system for surgical instruments

- [1] M. Marć, A. Bartosiewicz, J. Burzyńska, Z. Chmiel, and P. Januszewicz, “A nursing shortage – a prospect of global and local policies,” Int. Nursing Review, Vol.66, No.1, pp. 9-16, 2019.

- [2] T. Zhou and J. P. Wachs, “Early Prediction for Physical Human Robot Collaboration in Operating Room,” Autonomous Robots, Vol.42, pp. 977-995, 2018.

- [3] L. Mitchell, R. Flin, S. Yule, J. Mitchell, K. Coutts, and G. Youngson, “Thinking Ahead of the Surgion. An Interview Study to Identify Scrub Nurses’s Non-technical Skills,” Int. J. of Nursing Studies, Vol.48, pp. 818-828, 2011.

- [4] A. B. Haynes, T. G. Weiser, W. R. Berry et al., “A Surgical Safety Checklist to Reduce Morbidity and Mortality in a Global Population,” The New England J. of Medicine, Vol.360, No.5, pp. 491-499, 2009.

- [5] Z. Uğurlu, A. Karahan, H. Ünulü, A. Abbasoğlu, N. Elbaş, S. A. lşik, and A. Tepe, “The Effects of Workload and Working Conditions on Operating Room Nurses and Technicians,” Workplace Health & Safety, Vol.63, No.9, pp. 399-407, 2015.

- [6] M. Hoeckelmann, I. J. Rudas, P. Firorini, F. Kirchner, and T. Haidegger, “Current Capability and Development Potential in Surgical,” Int. J. of Advanced Robotic Systems, Vol.12, No.5, doi: 10.5772/60133, 2014.

- [7] T. L. Ghezzi and O. C. Corleta, “30 Years of Robotic Surgery,” World J. of Surgery, Vol.40, pp. 2550-2557, 2016.

- [8] G. I. Barbash and S. A. Glid, “New Technology and Health Care Costs – The Case of Robot-Assisted Surgery,” The New England J. of Medicine, Vol.363, No.8, pp. 701-704, 2010.

- [9] C. Perez-Vidal, E. Carpintero, N. Garcia-Aracil, J. M. Sabater-Navarro, J.-M. Azorin, A. Candela, and E. Fernandez, “Steps in the Development of a Robotic Scrub Nurse,” Robotic and Autonomous Systems, Vol.60, pp. 901-911, 2012.

- [10] T. Zhou and J. P. Wachs, “Needle in a Haystack: Interactive Surgical Instrument Recognition Through Perception and Manipulation,” Robotic and Autonomous Systems, Vol.97, pp. 182-192, 2017.

- [11] B. Glaser, T. Schellenberg, L. Koch, M. Hofer, S. Modemann, P. Dubach, and T. Neumuth, “Design and Evaluation of an Interactive Training System for Scrub Nurses,” Int. J. of Computer Assisted Radiology and Surgery, Vol.11, pp. 818-828, 2016.

- [12] M. R. Treat, S. E. Amory, P. E. Downey, and D. A. Taliaferro, “Initial Clinical Experience with a Partly Autonomous Robotic Surgical Instrument Server,” Surgical Endoscopy and Other Interventional Techniques, Vol.20, pp. 1310-1314, 2006.

- [13] W. Liu, D. Anguelov, D. Erhan, C. Szegedy, S. Reed, C. Y. Fu, and A. C. Berg, “SSD: Single shot multibox detector,” European Conf. on Computer Vision (ECCV 2016), Lecture Notes in Computer Science, Vol.9905, pp. 21-37, 2016.

- [14] J. Redmon, S. Divvala, R. Girshick, and A. Farhadi, “You only look once: Unified, real-time object detection,” Proc. of the 2016 IEEE Conf. on Computer Vision and Pattern Recognition (CVPR2016), pp. 779-788, 2016.

- [15] K. He, G. Gkioxari, P. Dollár, and R. Girshick, “Mask R-CNN,” IEEE Trans. on Pattern Analysis and Machine Intelligence, Vol.42, No.2, pp. 386-397, 2020.

- [16] Y. Xu, G. Yu, Y. Wang, X. Wu, and Y. Ma, “Car Detection from Low-Altitude UAV Imagery with the Faster R-CNN,” J. of Advanced Transportation, Vol.2017, Article ID 2823617, doi: 10.1155/2017/2823617, 2017.

- [17] X. Wang, W. Zhang, X. Wu, L. Xiao, Y. Qian, and Z. Fang, “Real-time Vehicle Type Classification with Deep Conventional Neural Networks,” J. of Real-Time Image Processing, Vol.16, pp. 5-14, 2017.

- [18] J. Li, D. Zhang, J. Zhang, J. Zhang, T. Li, Y. Xia, Q. Yan, and L. Xun, “Facial Expression Recognition with Faster R-CNN,” Procedia Computer Science, Vol.107, pp. 135-140, 2017.

- [19] S. Ren, K. He, R. Girshick, and J. Sun, “Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks,” arXiv preprint, arXiv:1506.01497, 2015.

- [20] G. Ross, “Fast R-CNN,” 2015 IEEE Int. Conf. on Computer Vision (ICCV), pp. 1440-1448, 2015.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.