Paper:

Enhancement of the Individual Selectness Using Local Spatial Weighting for Immune Cells

Shoya Kusunose*, Yuki Shinomiya*, Takashi Ushiwaka**, Nagamasa Maeda***, and Yukinobu Hoshino*

*Kochi Unversity of Technology

185 Miyanokuchi, Tosayamada, Kami, Kochi 782-8502, Japan

**Kagoshima University

8-35-1 Sakuragaoka, Kagoshima, Kagoshima 890-8544, Japan

***Kochi Medical School

Kohasu, Oko-cho, Nankoku, Kochi 783-8505, Japan

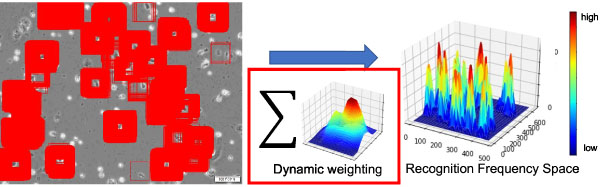

This paper focuses on the analysis of the activity of immune cells for supporting medical workers. Recognition frequency space selects a region including neighboring multiple cells as a single cell is one of the major issues in activity analysis of immune cells. This study focuses on the locality of immune cell features and uses a high-velocity weighting method for the analysis while the Gaussian distribution is used in the literature. The analysis was conducted for a few well-known methods such as final feature maps, class activation mapping (CAM), gradient weighted class activation mapping (Grad-CAM), Grad-CAM++, and Eigen-CAM. The results show that the densely inhabited immune cells are correctly selected by CAM, Grad-CAM, Grad-CAM++, and Eigen-CAM. These algorithms also show stability with respect to the threshold used to select tracking targets. In addition, the higher threshold makes the selection robust, and the lower one is useful for analyzing tends of multiple cells in a whole frame efficiently.

Proposal approach: Dynamic weighting, like activation mappings, is to weight RFS instead of Gaussian distribution. The weighting should be suitable for each patch image. It is possible to set the weight uniquely and suitably for each patch

- [1] D. Ardila, A. P. Kiraly, S. Bharadwaj et al., “End-to-end lung cancer screening with three-dimensional deep learning on low-dose chest computed tomography,” Nature Medicine, Vol.25, No.6, pp. 954-961, 2019.

- [2] H. A. Leopold, J. Orchard, J. S. Zelek, and V. Lakshminarayanan, “PixelBNN: Augmenting the PixelCNN with batch normalization and the presentation of a fast architecture for retinal vessel segmentation,” J. of Imaging, Vol.5, No.2, Article No.26, 2019.

- [3] Q. Li, W. Cai, X. Wang, Y. Zhou, D. D. Feng, and M. Chen “Medical image classification with convolutional neural network,” Proc. of 2014 13th Int. Conf. on control Automation Robotics & Vision (ICARCV), pp. 844-848, 2014.

- [4] C. Izumiya, T. Ushiwaka, T. Tsuduki, C. Yoshii, K. Taniguchi, and N. Maeda, “Decreased peritoneal NK cell movement in women with endometriosis by time-lapse imaging estimation,” Reproductive Immunology and Biology, Vol.32, pp. 21-26, 2017 (in Japanese).

- [5] J. Redmon, S. Divvala, R. Girshick, and A. Farhadi, “You Only Look Once: Unified, Real-Time Object Detection,” Proc. of the IEEE Conf. on Computer Vision and Pattern Recognition (CVPR), pp. 779-788, 2016.

- [6] J. Redmon and A. Farhadi, “YOLO9000: Better, Faster, Stronger,” Proc. of the IEEE Conf. on Computer Vision and Pattern Recognition (CVPR), pp. 7263-7271, 2017.

- [7] W. Liu, D. Anguelov, D. Erhan, C. Szegedy, S. Reed, C. Fu, and A. C. Berg, “SSD: Single Shot MultiBox Detector,” Proc. of Computer Vision – ECCV 2016, pp. 21-37, 2016.

- [8] S. Ren, K. He, R. Girshick, and J. Sun, “Faster R-CNN: Towards real-time object detection with region proposal networks,” IEEE Trans. on Pattern Analysis and Machine Intelligence, Vol.39, No.6, pp. 1137-1149, 2016.

- [9] Q. Zhao, T. Sheng, Y. Wang, Z. Tang, Y. Chen, L. Cai, and H. Ling, “M2Det: A Single-Shot Object Detector Based on Multi-Level Feature Pyramid Network,” Proc. of the 33rd AAAI Conf. on Artificial Intelligence, pp. 9259-9266, 2019.

- [10] S. Kusunose, Y. Shinomiya, T. Ushiwaka, N. Maeda, and Y. Hoshino, “Automatic Acquisition of Immune Cells Location Using Deep Learning for Automated Analysis,” J. of Japan Society for Fuzzy Theory and Intelligent Informatics, Vol.33, No.1, pp. 560-565, 2021 (in Japanese with English abstract).

- [11] B. Zhou, A. Khosla, A. Lapedriza, A. Oliva, and A. Torralba, “Learning Deep Features for Discriminative Localization,” Proc. of the IEEE Conf. on Computer Vision and Pattern Recognition (CVPR), pp. 2921-2929, 2016.

- [12] R. R. Selvaraju, M. Cogswell, A. Das, R. Vedantam, D. Parikh, and D. Batra, “Grad-cam: Visual explanations from deep networks via gradient-based localization,” Proc. of the IEEE Int. Conf. on Computer Vision, pp. 618-626, 2017.

- [13] A. Chattopadhay, A. Sarkar, P. Howlader, and V. N. Balasubramanian, “Grad-CAM++: Generalized Gradient-Based Visual Explanations for Deep Convolutional Networks,” Proc. of the 2018 IEEE Winter Conf. on Applications of Computer Vision (WACV), pp. 839-847, 2018.

- [14] M. B. Muhammad and M. Yeasin, “Eigen-CAM: Class Activation Map using Principal Components,” Proc. of the 2020 Int. Joint Conf. on Neural Networks (IJCNN), 2020.

- [15] M. Lin, Q. Chen, and S. Yan, “Network In Network,” Proc. of 2nd Int. Conf. on Learning Representations, 2014.

- [16] C. Szegedy, W. Liu, Y. Jia, P. Sermanet, S. Reed, D. Anguelov, D. Erhan, V. Vanhoucke, and A. Rabinovich, “Going Deeper With Convolutions,” Proc. of the IEEE Conf. on Computer Vision and Pattern Recognition (CVPR), 2015.

- [17] K. He, X. Zhang, S. Ren, and J. Sun, “Deep residual learning for image recognition,” Proc. of the IEEE Conf. on Computer Vision and Pattern Recognition (CVPR), pp. 770-778, 2016.

- [18] K. He, X. Zhang, S. Ren, and J. Sun, “Identity mappings in deep residual networks,” Proc. of the European Conf. on Computer Vision (ECCV), pp. 630-645, 2016.

- [19] I. Bello, W. Fedus, X. Du, E. D. Cubuk, A. Srinivas, T. Lin, J. Shlens, and B. Zoph, “Revisiting ResNets: Improved Training and Scaling Strategies,” Advances in Neural Information Processing Systems 34, Proc. of 35th Conf. on Neural Information Processing Systems (NeurIPS 2021), 2021.

- [20] C. G. Northcutt, A. Athalye, and J. Mueller, “Pervasive Label Errors in Test Sets Destabilize Machine Learning Benchmarks,” Proc. of the 35th Conf. on Neural Information Processing Systems (NeurIPS 2021) Datasets and Benchmarks, 2021.

- [21] R. Girshick, J. Donahue, T. Darrell, and J. Malik, “Rich feature hierarchies for accurate object detection and semantic segmentation,” Proc. of the IEEE Conf. on Computer Vision and Pattern Recognition, pp. 580-587, 2014.

- [22] R. Girshick, “Fast R-CNN,” Proc. of the 2015 IEEE Int. Conf. on Computer Vision (ICCV), pp. 1440-1448, 2015.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.