Research Paper:

Mobile-YOLO: A Lightweight YOLO for Road Crack Detection on Mobile Devices

Anjun Yu*,**, Yixiang Gao**,†, Yonghua Xiong**, Wei Liu**, and Jinhua She**,***

*Jiangxi Ganyue Expressway Co., Ltd.

No.199 Torch Street, High-tech Zone, Nanchang, Jiangxi 330000, China

**School of Automation, China University of Geosciences

No.388 Lumo Road, Hongshan District, Wuhan, Hubei 430074, China

†Corresponding author

***School of Engineering, Tokyo University of Technology

1404-1 Katakuramachi, Hachioji, Tokyo 192-0982, Japan

Road crack detection is critical for ensuring road traffic safety, extending the service life of roads, and improving the efficiency of road maintenance management. However, the traditional YOLOv8 model, when applied on mobile devices, faces challenges, such as high network complexity, significant computational resource demands, and slow inference speeds owing to limited computational resources. To address these issues, this paper proposes a model tailored for mobile terminals—Mobile-YOLO. By incorporating the universal inverted bottleneck module and the multi-query attention mechanism, the model significantly reduces network complexity while enhancing computational efficiency for mobile deployment, making it well suited for real-time detection requirements in embedded systems and vehicle-mounted patrol devices. Experimental results showed that Mobile-YOLO improves detection accuracy by 4.1%, mAP50 by 2.76%, and mAP50-95 by 2.56% compared with the baseline YOLOv8, achieving an inference speed of 113 fps, outperforming other lightweight models. Experiments on the NVIDIA Jetson Nano platform further validated its excellent inference performance and low false positive rate, providing an efficient solution for real-world road crack detection in resource-constrained environments.

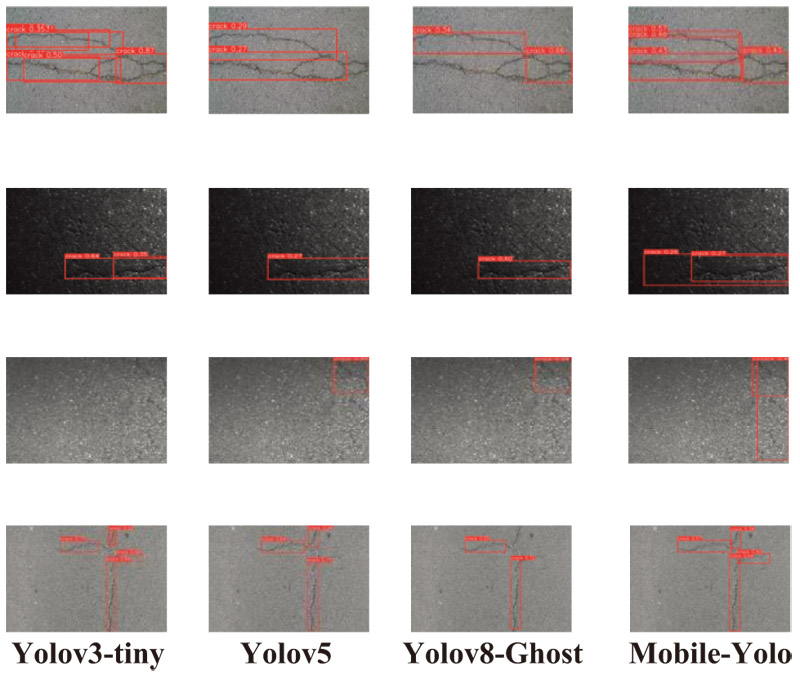

Detection results

- [1] S. Kulkarni, S. Singh, D. Balakrishnan, S. Sharma, S. Devunuri, and S. C. R. Korlapati, “CrackSeg9k: A collection and benchmark for crack segmentation datasets and frameworks,” European Conf. on Computer Vision, pp. 179-195, 2022. https://doi.org/10.1007/978-3-031-25082-8_12

- [2] P. S. Chakurkar, D. Vora, S. Patil, S. Mishra, and K. Kotecha, “Data-driven approach for AI-based crack detection: Techniques, challenges, and future scope,” Frontiers in Sustainable Cities, Vol.5, Article No.1253627, 2023. https://doi.org/10.3389/frsc.2023.1253627

- [3] S. B. Li, J. Yang, Z. Wang et al., “Review of development and application of defect detection technology,” Zidonghua Xuebao/Acta Automatica Sinica, Vol.46, No.11, pp. 2319-2336, 2020.

- [4] M. Kaya and H. S. Bilge, “Deep metric learning: A survey,” Symmetry-Basel, Vol.11, No.9, Article No.1066, 2019. https://doi.org/10.3390/sym11091066

- [5] D. Reis, J. Kupec, J. Hong, and A. Daoudi, “Real-time flying object detection with YOLOv8,” arXiv:2305.09972, 2023.

- [6] P. Prasitmeeboon and H. Yau, “Defect detection of particleboards by visual analysis and machine learning,” 2019 5th Int. Conf. on Engineering, Applied Sciences and Technology (ICEAST), 2019. https://doi.org/10.1109/ICEAST.2019.8802526

- [7] C.-F. Chang, J.-L. Wu, K.-J. Chen, and M.-C. Hsu, “A hybrid defect detection method for compact camera lens,” Adv. Mech. Eng., Vol.9, No.8, Article No.1687814017722949, 2017. https://doi.org/10.1177/1687814017722949

- [8] F. L. Wang and B. Zuo, “Detection of surface cutting defect on magnet using Fourier image reconstruction,” J. Cent. South Univ., Vol.23, No.5, pp. 1123-1131, 2016. https://doi.org/10.1007/s11771-016-0362-y

- [9] H. U. Dike, Y. Zhou, K. K. Deveerasetty, and Q. Wu, “Unsupervised learning based on artificial neural network: A review,” Proc. 2018 IEEE Int. Conf. Cyborg Bionic Syst. (CBS), pp. 322-327, 2018. https://doi.org/10.1109/CBS.2018.8612259

- [10] W. Zaremba, “Recurrent neural network regularization,” arXiv:1409.2329, 2014. https://doi.org/10.48550/arXiv.1409.2329

- [11] S. Ren, K. He, R. Girshick, and J. Sun, “Faster R-CNN: Towards real-time object detection with region proposal networks,” IEEE Trans. Pattern Anal. Mach. Intell., Vol.39, No.6, pp. 1137-1149, 2016. https://doi.org/10.1109/TPAMI.2016.2577031

- [12] K. He, G. Gkioxari, P. Dollár, and R. Girshick, “Mask R-CNN,” Proc. IEEE Int. Conf. Comput. Vis., pp. 2980-2988, 2017. https://doi.org/10.1109/ICCV.2017.322

- [13] J. Ren and X. Huang, “Defect detection using combined deep autoencoder and classifier for small sample size,” Proc. IEEE 6th Int. Conf. Control Sci. Syst. Eng., pp. 32-35, 2020. https://doi.org/10.1109/ICCSSE50399.2020.9171953

- [14] C. Sampedro, J. Rodriguez-Vazquez, A. Rodriguez-Ramos, A. Carrio, and P. Campoy, “Deep learning-based system for automatic recognition and diagnosis of electrical insulator strings,” IEEE Access, Vol.7, pp. 101283-101308, 2019. https://doi.org/10.1109/ACCESS.2019.2931144

- [15] J. Balzategui, L. Eciolaza, and N. Arana-Arexolaleiba, “Defect detection on polycrystalline solar cells using electroluminescence and fully convolutional neural networks,” 2020 IEEE/SICE Int. Symp. on System Integration (SII), pp. 949-953, 2020. https://doi.org/10.1109/SII46433.2020.9026211

- [16] X. Gao, M. Jian, M. Hu, M. Tanniru, and S. Li, “Faster multi-defect detection system in shield tunnel using combination of FCN and faster RCNN,” Advances in Structural Engineering, Vol.22, No.13, pp. 2907-2921, 2019. https://doi.org/10.1177/1369433219849829

- [17] W. Zhao, F. Chen, H. Huang, D. Li, and W. Cheng, “A new steel defect detection algorithm based on deep learning,” Computational Intelligence and Neuroscience, Vol.2021, No.1, Article No.5592878, 2021. https://doi.org/10.1155/2021/5592878

- [18] B. Su, H. Chen, P. Chen, G. Bian, K. Liu, and W. Liu, “Deep learning-based solar-cell manufacturing defect detection with complementary attention network,” IEEE Trans. Ind. Informat., Vol.17, No.6, pp. 4084-4095, 2020. https://doi.org/10.1109/TII.2020.3008021

- [19] W. Liu, D. Anguelov, D. Erhan, C. Szegedy, S. Reed, C.-Y. Fu, and A. C. Berg, “SSD: Single shot multibox detector,” Proc. 14th European Conf. Comput. Vis., pp. 21-37, 2016. https://doi.org/10.1007/978-3-319-46448-0_2

- [20] J. Redmon, “YOLOv3: An incremental improvement,” arXiv:1804.02767, 2018. https://doi.org/10.48550/arXiv.1804.02767

- [21] C. Zhang, C.-C. Chang, and M. Jamshidi, “Bridge damage detection using a single-stage detector and field inspection images,” arXiv:1812.10590, 2018. https://doi.org/10.48550/arXiv.1812.10590

- [22] X. Yu, W. Lyu, D. Zhou, C. Wang, and W. Xu, “ES-Net: Efficient scale-aware network for tiny defect detection,” IEEE Trans. Instrum. Meas., Vol.71, pp. 1-14, 2022. https://doi.org/10.1109/TIM.2022.3168897

- [23] A. Bochkovskiy, C.-Y. Wang, and H.-Y. M. Liao, “YOLOv4: Optimal speed and accuracy of object detection,” arXiv:2004.10934, 2020. https://doi.org/10.48550/arXiv.2004.10934

- [24] R. Wang and C. F. Cheung, “CenterNet-based defect detection for additive manufacturing,” Expert Syst. Appl., Vol.188, Article No.116000, 2022. https://doi.org/10.1016/j.eswa.2021.116000

- [25] A. G. Howard, “MobileNets: Efficient convolutional neural networks for mobile vision applications,” arXiv:1704.04861, 2017. https://doi.org/10.48550/arXiv.1704.04861

- [26] X. Zhang, X. Zhou, M. Lin, and J. Sun, “ShuffleNet: An extremely efficient convolutional neural network for mobile devices,” Proc. IEEE Conf. Comput. Vis. Pattern Recognit., pp. 6848-6856, 2018. https://doi.org/10.1109/CVPR.2018.00716

- [27] K. Han, Y. Wang, Q. Tian, J. Guo, C. Xu, and C. Xu, “GhostNet: More features from cheap operations,” Proc. of the IEEE/CVF Conf. on Computer Vision and Pattern Recognition, pp. 1580-1589, 2020. https://doi.org/10.1109/CVPR42600.2020.00165

- [28] E. J. Jeong, J. Kim, and S. Ha, “TensorRT-based framework and optimization methodology for deep learning inference on Jetson boards,” ACM Trans. on Embedded Computing Systems (TECS), Vol.21, No.5, Article No.51, 2022. https://doi.org/10.1145/3508391

- [29] J. Mao et al., “MoDNN: Local distributed mobile computing system for deep neural network,” Design, Automation & Test in Europe Conf. & Exhibition (DATE), pp. 1396-1401, 2017. https://doi.org/10.23919/DATE.2017.7927211

- [30] T. Chen et al., “TVM: An automated End-to-End optimizing compiler for deep learning,” 13th USENIX Symp. on Operating Systems Design and Implementation (OSDI 18), 2018.

- [31] B. Moons et al., “14.5 envision: A 0.26-to-10TOPS/W subword-parallel dynamic-voltage-accuracy-frequency-scalable convolutional neural network processor in 28nm FDSOI,” 2017 IEEE Int. Solid-State Circuits Conf. (ISSCC), pp. 246-247, 2017.

- [32] G. Jocher et al., “ultralytics/yolov5: v6. 1-tensorrt, tensorflow edge tpu and openvino export and inference,” Zenodo, 2022.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.