Research Paper:

A Method for Recognizing Entities in Power News Texts Based on Dependency Syntactic Parsing

Yun Wu*

, Xinru Liu**, Yan Du***, Jieming Yang*

, Xinru Liu**, Yan Du***, Jieming Yang*

, Zhenhong Liu*,†, Kai Yang*

, Zhenhong Liu*,†, Kai Yang*

, and Ziyi Wang*

, and Ziyi Wang*

*School of Computer Science, Northeast Electric Power University

No.169 Changchun Road, Chuanying District, Jilin, Jilin 132012, China

†Corresponding author

**Shandong University of Finance and Economics

40 Shungeng Road, Shizhong District, Jinan, Shandong 250014, China

***Jilin Meteorological Observation and Protection Center, Jilin Meteorological Service

No.176 Suizhong Road, Lvyuan District, Changchun, Jilin 130000, China

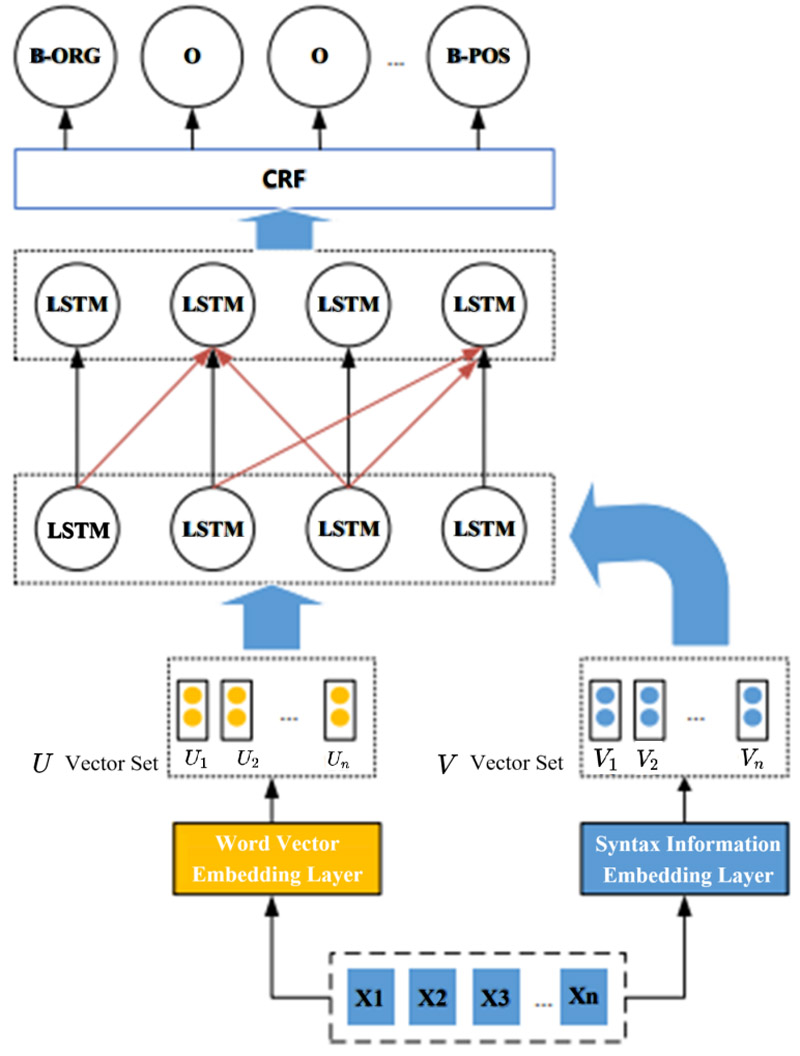

Addressing the challenge that news texts in the power field often contain numerous professional terms and many new terms are generated every year, which are difficult to accurately identify using general named entity recognition methods, this paper proposes an entity recognition model for power texts based on dependency syntactic analysis (SYN-BiLSTM-CRF). This model first generates power text word vectors and inputs them into a forward LSTM for feature extraction. Simultaneously, dependency syntactic parsing is performed on the power text, and the syntactic information vectors are fused with the output of the forward LSTM before being input into a backward LSTM. This enhances the model’s ability to learn inter-word dependency relations by incorporating additional syntactic features. Finally, CRF is employed to obtain the predicted NER labels. The experiments demonstrate that the proposed SYN-BiLSTM-CRF model achieves an F1-score of 85.36% on power-related texts, representing a 2.78% improvement over the baseline BiLSTM-CRF model (82.58%). Additionally, it attains a recall of 89.06%, outperforming the BERT model’s recall (87.59%). These results prove that the proposed method significantly enhances entity recognition accuracy in this specialized domain.

SYN-BiLSTM-CRF model

- [1] Y. Liang, D. H. Xu, and Y. Bai, “Optimization of Combined Heat and Power System Based on Organic Rankine Cycle,” J. of Northeast Electric Power University, Vol.42, No.1, pp. 43-48, 2022 (in Chinese). https://doi.org/10.19718/j.issn.1005-2992.2022-01-0043-06

- [2] C. Jiang, Y. Wang, J. H. Hu, J. Q. Xu, M. Chen, Y. W. Wang, and G. M. Ma, “Power Entity Information Recognition Based on Deep Learning,” Power System Technology, Vol.45, No.6, pp. 2141-2149, 2021 (in Chinese). https://doi.org/10.13335/j.1000-3673.pst.2020.1678

- [3] R. S. Yang, X. Y. Zhang, and X. F. Lu, “Power Enterprise Material Business Process Based on Automatic Data Flow Research on Digital Management System,” J. of Northeast Electric Power University, Vol.41, No.6, pp. 100-104, 2021 (in Chinese). https://doi.org/10.19718/j.issn.1005-2992.2021-06-0100-05

- [4] Z. C. Yang and Y. Sun, “Preparation of Liquid Splitting Media And Their Impaction on the PV/T Systems,” J. of Northeast Electric Power University, Vol.44, No.2, pp. 35-41, 2024 (in Chinese). https://doi.org/10.19718/j.issn.1005-2992.2024-02-0035-07

- [5] Z. K. Yin, Z. W. Lin, G. H. Lv, and D. Z. Li, “Research on Fault Diagnosis and Health State Prediction of Wind Turbine Variable Pitch System Based on Data Drive,” J. of Northeast Electric Power University, Vol.43, No.5, pp. 1-11+17, 2023 (in Chinese). https://doi.org/10.19718/j.issn.1005-2992.2023-05-0001-12

- [6] Z. Huang, W. Xu, and K. Yu, “Bidirectional LSTM-CRF Models for Sequence Tagging,” arXiv preprint, arXiv:1508.01991, 2015. https://doi.org/10.48550/arXiv.1508.01991

- [7] G. Lample, M. Ballesteros, S. Subramanian, K. Kawakami, and C. Dyer, “Neural Architectures for Named Entity Recognition,” Proc. of the 2016 Conf. of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, pp. 260-270, 2016. https://doi.org/10.18653/v1/N16-1030

- [8] J. Chiu and E. Nichols, “Named Entity Recognition with Bidirectional LSTM-CNNs,” Trans. of the Association for Computational Linguistics, Vol.4, pp. 357-370, 2016. https://doi.org/10.1162/tacl_a_00104

- [9] P. H. Li, R. P. Dong, Y. S. Wang, J. C. Chou, and W. Y. Ma, “Leveraging Linguistic Structures for Named Entity Recognition with Bidirectional Recursive Neural Networks,” Proc. of the 2017 Conf. on Empirical Methods in Natural Language Processing, pp. 2664-2669, 2017. https://doi.org/10.18653/v1/D17-1282

- [10] R. Ni, H. Shibata, and Y. Takama, “Entity and Entity Type Composition Representation Learning for Knowledge Graph Completion,” J. Adv. Comput. Intell. Intell. Inform., Vol.27, No.6, pp. 1151-1158, 2023. https://doi.org/10.20965/jaciii.2023.p1151

- [11] J. Devlin, M. W. Chang, K. Lee, and K. Toutanova, “BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding,” Proc. of the 2019 Conf. of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Vol.1, pp. 4171-4186, 2019. https://doi.org/10.18653/v1/N19-1423

- [12] N. M. Gardazi, A. Daud, M. K. Malik, A. Bukhari, T. Alsahfi, and B. Alshemaimri, “BERT applications in natural language processing: A review,” Artificial Intelligence Review, Vol.58, Article No.166, 2025. https://doi.org/10.1007/s10462-025-11162-5

- [13] Y. Chang, L. Kong, K. Jia, and Q. Meng, “Chinese named entity recognition method based on BERT,” 2021 IEEE Int. Conf. on Data Science and Computer Application (ICDSCA), pp. 294-299, 2021. https://doi.org/10.1109/ICDSCA53499.2021.9650256

- [14] S. Shen, Z. Dong, J. Ye, L. Ma, Z. Yao, A. Gholami, M. W. Mahoney, and K. Keutzer, “Q-BERT: Hessian Based Ultra Low Precision Quantization of BERT,” 34th AAAI Conf. on Artificial Intelligence (AAAI 2020), pp. 8815-8824, 2020. https://doi.org/10.1609/aaai.v34i05.6409

- [15] V. Sanh, L. Debut, J. Chaumond, and T. Wolf, “DistilBERT, a distilled version of BERT: smaller, faster, cheaper and lighter,” arXiv preprint, arXiv:1910.01108, 2019. https://doi.org/10.48550/arXiv.1910.01108

- [16] O. Levy and Y. Goldberg, “Dependency-Based Word Embeddings,” Proc. of the 52nd Annual Meeting of the Association for Computational Linguistics, Vol.2, pp. 302-308, 2014. https://doi.org/10.3115/v1/P14-2050

- [17] J. Bastings, I. Titov, W. Aziz, D. Marcheggiani, and K. Sima’an, “Graph Convolutional Encoders for Syntax-aware Neural Machine Translation,” Proc. of the 2017 Conf. on Empirical Methods in Natural Language Processing, pp. 1957-1967, 2017. https://doi.org/10.18653/v1/D17-1209

- [18] L. Yao, C. Mao, and Y. Luo, “Graph Convolutional Networks for Text Classification,” AAAI Conf. on Artificial Intelligence, pp. 7370-7377, 2019. https://doi.org/10.1609/aaai.v33i01.33017370

- [19] Z. Jie and W. Lu, “Dependency-Guided LSTM-CRF for Named Entity Recognition,” Proc. of the 2019 Conf. on Empirical Methods in Natural Language Processing and the 9th Int. Joint Conf. on Natural Language Processing, pp. 3862-3872, 2019. https://doi.org/10.18653/v1/D19-1399

- [20] M. Sun, Q. Yang, H. Wang, M. Pasquine, and I. A. Hameed, “Learning the Morphological and Syntactic Grammars for Named Entity Recognition,” Inf., Vol.13, No.2, Article No.49, 2022. https://doi.org/10.3390/info13020049

- [21] K. Chen and Z. Chen, “Entity Relation Extraction Based on Shortest Dependency Path and BERT,” J. of Southwest China Normal University (Natural Science Edition), Vol.46, No.11, pp. 56-66, 2021 (in Chinese). https://doi.org/0.13718/j.cnki.xsxb.2021.11.008

- [22] X. S. Zhang, “Research on Named Entity Recognition integrated Dependency Syntax Information,” Hebei Normal University, M.A. Thesis, 2021 (in Chinese). https://doi.org/10.27110/d.cnki.ghsfu.2021.000460

- [23] Y. M. Zhang, Z. Y. Zhu, E. T. Liu, and X. Y. Zhang, “Review Text Analysis Based on Long Short-term Memory Neural Network, Science and Technology Innovation,” Scientific and Technological Innovation, pp. 183-184, 2020 (in Chinese).

- [24] Y. Nie, Y. Tian, Y. Song, X. Ao, and X. Wan, “Improving Named Entity Recognition with Attentive Ensemble of Syntactic Information,” Findings of the Association for Computational Linguistics: EMNLP 2020, pp. 4231-4245, 2020. https://doi.org/10.18653/v1/2020.findings-emnlp.378

- [25] Z. M. Wang, L. Sun, L. G. Sun, and R. T. Yuan, “Optimization Scheduling of CCHP Micro-Energy Network Based on Phase Change Energy Storage Thermal Resistance Model,” J. of Northeast Electric Power University, Vol.42, No.1, pp. 96-103, 2022 (in Chinese). https://doi.org/10.19718/j.issn.1005-2992.2022-01-0096-08

- [26] T. Mikolov, K. Chen, G. Corrado, and J. Dean, “Efficient Estimation of Word Representations in Vector Space,” arXiv preprint, arXiv:1301.3781, 2013. https://doi.org/10.48550/arXiv.1301.3781

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.