Paper:

Autonomous Motion Control for Exploration Robots on Rough Terrain Using Enhanced DDPG Deep Reinforcement Learning

Zijie Wang, Jiaheng Lu, and Yonghoon Ji†

Graduate School of Advanced Science and Technology, Japan Advanced Institute of Science and Technology

1-1 Asahidai, Nomi, Ishikawa 923-1292, Japan

†Corresponding author

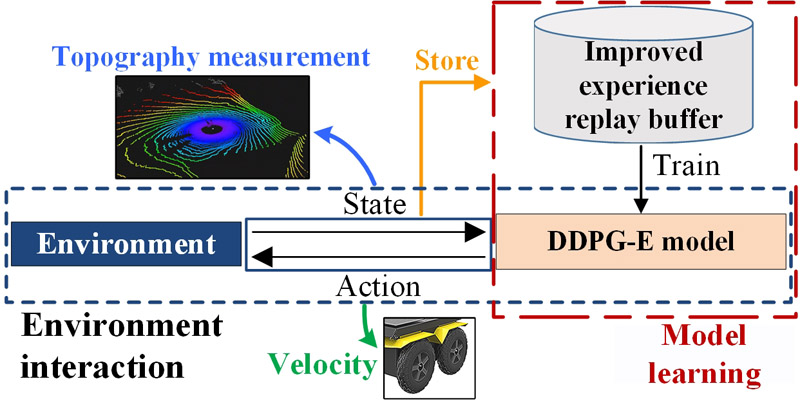

The deployment of exploration robots for search and rescue, rather than relying exclusively on human-led efforts, mitigates the risk of property damage and casualties arising from secondary disasters in complex environments. The effectiveness of these robots is often affected by various environmental factors, including the degree of terrain flatness and the presence of obstacles. To address these challenges, we propose a novel approach for autonomous motion control using a deep deterministic policy gradient-based experience (DDPG-E) replay method, which allows exploration robots to navigate autonomously and safely in complex environments. Using deep reinforcement learning to establish the control module, the proposed system enables the exploration robot to generate optimal motion control from environmental information by considering a six-degrees-of-freedom (6-DoF) pose. Experiments indicate that our approach not only promotes navigation with a high level of collision avoidance in complex environments but also achieves higher accuracy and superior generalization ability compared to preceding methods.

Autonomous motion control based on DDPG-E

- [1] S. Lock, G. J. Rubin, V. Murray, M. B. Rogers, R. Amlôt, and R. Williams, “Secondary stressors and extreme events and disasters: A systematic review of primary research from 2010-2011,” PLoS Currents, Vol.4, 2012. https://doi.org/10.1371/currents.dis.a9b76fed1b2dd5c5bfcfc13c87a2f24f

- [2] G. Tuna, V. C. Gungor, and K. Gulez, “An autonomous wireless sensor network deployment system using mobile robots for human existence detection in case of disasters,” Ad Hoc Networks, Vol.13, Part A, pp. 54-68, 2014. https://doi.org/10.1016/j.adhoc.2012.06.006

- [3] N. Gageik, P. Benz, and S. Montenegro, “Obstacle Detection and Collision Avoidance for a UAV with Complementary Low-Cost Sensors,” IEEE Access, Vol.3, pp. 599-609, 2015. https://doi.org/10.1109/ACCESS.2015.2432455

- [4] C. S. Adams and S. M. M. Rahman, “Design and Development of an Autonomous Feline Entertainment Robot (AFER) for Studying Animal-Robot Interactions,” Proc. of 2021 SoutheastCon, 2021. https://doi.org/10.1109/SoutheastCon45413.2021.9401864

- [5] J. Alvarez, G. Campos, V. Enríquez, A. Miranda, F. Rodriguez, and H. Ponce, “Nurse-Bot: A Robot System Applied to Medical Assistance,” Proc. of 2018 Int. Conf. on Mechatronics, Electronics and Automotive Engineering (ICMEAE), pp. 56-59, 2018. https://doi.org/10.1109/ICMEAE.2018.00017

- [6] T. Okuyama, T. Gonsalves, and J. Upadhay, “Autonomous driving system based on deep Q-learning,” Proc. of 2018 Int. Conf. on Intelligent Autonomous Systems (ICoIAS), pp. 201-205, 2018. https://doi.org/10.1109/ICoIAS.2018.8494053

- [7] A. Abbasimoshaei, A. K. C. Ravi, and T. A. Kern, “Development of a New Control System for a Rehabilitation Robot Using Electrical Impedance Tomography and Artificial Intelligence,” Biomimetics, Vol.8, Issue 5, Article No.420, 2023. https://doi.org/10.3390/biomimetics8050420

- [8] H. Asama, “R&D for Practical Robot Technology and Establishment of Its Operation System for Disaster Response,” J. Robot. Soc. Japan, Vol.32, Issue 1, pp. 37-41, 2014. https://doi.org/10.7210/jrsj.32.37

- [9] R. R. Murphy, “Disaster Robotics,” MIT Press, 2017.

- [10] T. Ohki, K. Nagatani, and K. Yoshida, “Safety path planning for mobile robot on rough terrain considering instability of attitude maneuver,” Proc. of 2010 IEEE/SICE Int. Symp. on System Integration (SII), pp. 55-60, 2010. https://doi.org/10.1109/SII.2010.5708301

- [11] Y. Ji, Y. Tanaka, Y. Tamura, M. Kimura, A. Umemura, Y. Kaneshima, H. Murakami, A. Yamashita, and H. Asama, “Adaptive Motion Planning Based on Vehicle Characteristics and Regulations for Off-Road UGVs,” IEEE Trans. Ind. Informat., Vol.15, Issue 1, pp. 599-611, 2019. https://doi.org/10.1109/TII.2018.2870662

- [12] G. Kahn, A. Villaflor, B. Ding, P. Abbeel, and S. Levine, “Self-Supervised Deep Reinforcement Learning with Generalized Computation Graphs for Robot Navigation,” Proc. of 2018 IEEE Int. Conf. on Robotics and Automation (ICRA), pp. 5129-5136, 2018. https://doi.org/10.1109/ICRA.2018.8460655

- [13] A. Hornung, K. M. Wurm, M. Bennewitz, C. Stachniss, and W. Burgard, “OctoMap: An efficient probabilistic 3D mapping framework based on Octrees,” Auton. Robots, Vol.34, pp. 189-206, 2013. https://doi.org/10.1007/s10514-012-9321-0

- [14] J. Hu, Y. Hu, C. Lu, J. Gong, and H. Chen, “Integrated Path Planning for Unmanned Differential Steering Vehicles in Off-Road Environment with 3D Terrains and Obstacles,” IEEE Trans. Intell. Transp. Syst., Vol.23, Issue 6, pp. 5562-5572, 2022. https://doi.org/10.1109/TITS.2021.3054921

- [15] R. Chai, H. Niu, J. Carrasco, F. Arvin, H. Yin, and B. Lennox, “Design and Experimental Validation of Deep Reinforcement Learning-Based Fast Trajectory Planning and Control for Mobile Robot in Unknown Environment,” IEEE Trans. Neural Netw. Learn. Syst., Vol.35, Issue 4, pp. 5778-5792, 2024. https://doi.org/10.1109/TNNLS.2022.3209154

- [16] K. Wan, X. Gao, Z. Hu, and G. Wu, “Robust Motion Control for UAV in Dynamic Uncertain Environments Using Deep Reinforcement Learning,” Remote Sensing, Vol.12, No.4, Article No.640, 2020. https://doi.org/10.3390/rs12040640

- [17] K. Zhang, F. Niroui, M. Ficocelli, and G. Nejat, “Robot Navigation of Environments with Unknown Rough Terrain Using deep Reinforcement Learning,” Proc. of 2018 IEEE Int. Symp. on Safety, Security, and Rescue Robotics (SSRR), 2018. https://doi.org/10.1109/SSRR.2018.8468643

- [18] Z. Wang, Y. Ji, H. Fujii, and H. Kono, “Autonomous Motion Control Using Deep Reinforcement Learning for Exploration Robot on Rough Terrain,” Proc. of IEEE/SICE Int. Symp. on System Integration (SII), pp. 1021-1025, 2022. https://doi.org/10.1109/SII52469.2022.9708814

- [19] T. P. Lillicrap, J. J. Hunt, A. Pritzel, N. Heess, T. Erez, Y. Tassa, D. Silver, and D. Wierstra, “Continuous control with deep reinforcement learning,” arXiv preprint, arXiv:1509.02971, 2015. https://doi.org/10.48550/arXiv.1509.02971

- [20] S. Gu, T. Lillicrap, I. Sutskever, and S. Levine, “Continuous deep Q-learning with model-based acceleration,” Proc. of Int. Conf. Mach. Learn. (ICML), Vol.48, pp. 2829-2838, 2016.

- [21] Y. Tassa, Y. Doron, A. Muldal, T. Erez, Y. Li, D. de Las Casas, D. Budden, A. Abdolmaleki, J. Merel, A. Lefrancq, T. Lillicrap, and M. Riedmiller, “DeepMind Control Suite,” arXiv preprint, arXiv:1801.00690, 2018. https://doi.org/10.48550/arXiv.1801.00690

- [22] J. Schulman, F. Wolski, P. Dhariwal, A. Radford, and O. Klimov, “Proximal Policy Optimization Algorithms,” arXiv preprint, arXiv:1707.06347, 2017. https://doi.org/10.48550/arXiv.1707.06347

- [23] T. Haarnoja, A. Zhou, P. Abbeel, and S. Levine, “Soft Actor-Critic: Off-Policy Maximum Entropy Deep Reinforcement Learning with a Stochastic Actor,” Proc. of Int. Conf. Mach. Learn. (ICML), Vol.80, pp. 1861-1870, 2018.

- [24] T. Schaul, J. Quan, I. Antonoglou, and D. Silver, “Prioritized Experience Replay,” arXiv preprint, arXiv:1511.05952, 2015. https://doi.org/10.48550/arXiv.1511.05952

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.