Research Paper:

An Analysis of Viewing Intentions for Promotional Videos Using Fuzzy c-Means Clustering: A Comparative Study Between Japan and Singapore

Naruki Shirahama*1,†

, Naofumi Nakaya*2

, Naofumi Nakaya*2

, Kenji Moriya*3, Kazuhiro Koshi*4, Keiji Matsumoto*5, and Satoshi Watanabe*6

, Kenji Moriya*3, Kazuhiro Koshi*4, Keiji Matsumoto*5, and Satoshi Watanabe*6

*1Shimonoseki City University

2-1-1 Daigaku-cho, Shimonoseki, Yamaguchi 751-8510, Japan

†Corresponding author

*2Juntendo University

Hinode, Urayasu, Chiba 279-0013, Japan

*3National Institute of Technology (KOSEN), Hakodate College

14-1 Tokura, Hakodate, Hokkaido 042-8501, Japan

*4National Institute of Technology (KOSEN), Kumamoto College

2659-2 Suya, Koshi, Kumamoto 861-1102, Japan

*5National Institute of Technology (KOSEN), Kitakyushu College

5-20-1 Shii, Kokuraminami, Kitakyushu, Fukuoka 802-0985, Japan

*6Shizuoka Institute of Science and Technology

2200-2, Toyosawa, Fukuroi, Shizuoka 437-8555, Japan

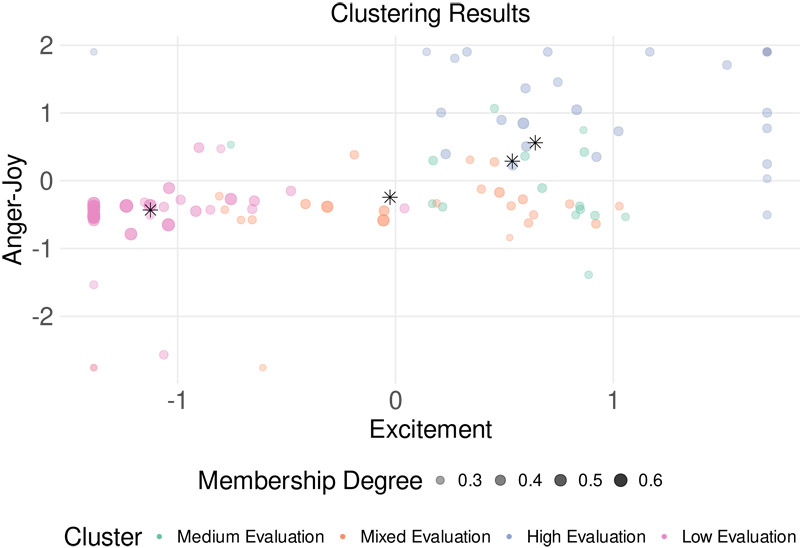

This study systematically investigated the intricate relationship between viewers’ emotional responses and their viewing intentions toward animated promotional videos via a visual analog scale and fuzzy c-means clustering (FCM). Survey data collected from students in Japan (n=71) and Singapore (n=27) were analyzed via FCM, revealing four distinct viewer clusters: “high evaluation group,” “medium evaluation group,” “mixed group,” and “low evaluation group,” each exhibiting characteristic emotional response patterns. Multiple regression analysis revealed that joy (β=0.503) and excitement (β=0.276) had significant positive effects on viewing intention, accounting for 54% of the variance in viewing intention (adjusted R2=0.524). Statistically significant differences (p<0.05) were observed across cultural backgrounds, particularly in emotional responses to joy, with Singaporean students exhibiting greater appreciation. These findings contribute to optimizing promotional strategies for international video distribution platforms, emphasizing the importance of eliciting positive emotional responses and considering cultural variations in audience segmentation and targeting. A limitation of this study is its relatively small sample size, which may not fully represent the broader populations of Japan and Singapore. Future research should validate our findings using larger and more diverse samples to enhance their generalizability.

FCM viewer classification

- [1] N. Shirahama, S. Watanabe, K. Moriya, K. Koshi, and K. Matsumoto, “A new method of subjective evaluation using visual analog scale for small sample data analysis,” J. of Information Processing, Vol.29, pp. 424-433, 2021. https://doi.org/10.2197/ipsjjip.29.424

- [2] N. Shirahama, K. Murakami, S. Watanabe, N. Nakaya, and Y. Mori, “Subjective evaluation experiment of grayscale color to examine vas measurement method,” Proc. of the 7th IIAE Int. Conf. on Intelligent Systems and Image Processing 2019 (ICISIP2019), pp. 147-153, 2019. https://doi.org/10.12792/icisip2019.027

- [3] N. Shirahama, S. Kondo, K. Matsumoto, K. Moriya, N. Nakaya, K. Koshi, and S. Watanabe, “Quantitative analysis of conversational response nuances using visual analog scale, data visualization, and clustering,” 2024 IEEE/ACIS 22nd Int. Conf. on Software Engineering Research, Management and Applications (SERA), pp. 362-367, 2024. https://doi.org/10.1109/SERA61261.2024.10685555

- [4] N. Shirahama, N. Nakaya, S. Watanabe, K. Moriya, K. Matsumoto, and K. Koshi, “Assessing emotional intelligence in ai: Visual analogue scale vs. likert scale in short story comprehension,” J. of the Institute of Industrial Applications Engineers, Vol.13, pp. 21-31, 2025. https://doi.org/10.12792/jiiae.13.21

- [5] N. Shirahama, N. Nakaya, and S. Watanabe, “Comparative study of visual analogue scale and likert scale using chat generation ai,” The Proc. of The 11th Int. Conf. on Intelligent Systems and Image Processing 2024, pp. 195-202, 2024. https://doi.org/10.12792/icisip2024.038

- [6] N. Shirahama, N. Nakaya, K. Koshi, K. Matsumoto, K. Moriya, and S. Watanabe, “Proposal for quantification and analysis method of nuances in conversation responses using visual analog scale,” ICIC Express Letters, Part B: Applications, Vol.15, pp. 71-81, 2024. https://doi.org/10.24507/icicelb.15.01.71

- [7] R. Deng and Y. Gao, “A review of eye tracking research on video-based learning,” Education and Information Technologies, Vol.28, pp. 7671-7702, 2023. https://doi.org/10.1007/s10639-022-11486-7

- [8] M. A. García-Pérez and R. Alcalá-Quintana, “Accuracy and precision of responses to visual analog scales: Inter- and intra-individual variability,” Behavior Research Methods, Vol.55, pp. 4369-4381, 2023. https://doi.org/10.3758/s13428-022-02021-0

- [9] T. Kuhlmann, M. Dantlgraber, and U.-D. Reips, “Investigating measurement equivalence of visual analogue scales and likert-type scales in internet-based personality questionnaires,” Behavior Research Methods, Vol.49, pp. 2173-2181, 2017. https://doi.org/10.3758/s13428-016-0850-x

- [10] S. Chan, N. Imanishi, S. Watanabe, and N. Shirahama, “A proposal of an experimental method for quantifying impressions using visual analog scale,” Proc. of the 9th IIAE Int. Conf. on Intelligent Systems and Image Processing 2022, pp. 46-52, 2022. https://doi.org/10.12792/icisip2022.010

- [11] M.-B. Naghi, L. Kovács, and L. Szilágyi, “A review on advanced c-means clustering models based on fuzzy logic,” 2023 IEEE 21st World Symp. on Applied Machine Intelligence and Informatics (SAMI), pp. 293-298, 2023. https://doi.org/10.1109/SAMI58000.2023.10044530

- [12] C. Wu and X. Qi, “Reconstruction-aware kernelized fuzzy clustering framework incorporating local information for image segmentation,” Neural Processing Letters, Vol.56, Article No.123, 2024. https://doi.org/10.1007/s11063-024-11450-1

- [13] F. Zhao, Y. Yang, H. Liu, and C. Wang, “A robust multi-view knowledge transfer-based rough fuzzy c-means clustering algorithm,” Complex & Intelligent Systems, Vol.10, pp. 5331-5358, 2024. https://doi.org/10.1007/s40747-024-01431-1

- [14] P. Hajek and V. Olej, “Hierarchical intuitionistic tsk fuzzy system for bitcoin price forecasting,” 2023 IEEE Int. Conf. on Fuzzy Systems (FUZZ), 2023. https://doi.org/10.1109/FUZZ52849.2023.10309793

- [15] D. Wijaya, H. Murfi, and G. Ardaneswari, “Topic-level sentiment analysis for user reviews in gasoline subsidy application,” 2024 11th IEEE Swiss Conf. on Data Science (SDS), pp. 221-224, 2024. https://doi.org/10.1109/SDS60720.2024.00038

- [16] R. A. Fitrianto, A. S. Editya, M. M. Alamin, A. L. Pramana, and A. K. Alhaq, “Classification of indonesian sarcasm tweets on x platform using deep learning,” 2024 7th Int. Conf. on Informatics and Computational Sciences (ICICoS), pp. 388-393, 2024. https://doi.org/10.1109/ICICoS62600.2024.10636904

- [17] H. Nishikori, S. Lee, and T. Hayashi, “Analysis of the relationship between colors and impression on studio ghibli movie posters,” 2024 Joint 13th Int. Conf. on Soft Computing and Intelligent Systems and 25th Int. Symp. on Advanced Intelligent Systems (SCIS&ISIS), 2024. https://doi.org/10.1109/SCISISIS61014.2024.10760063

- [18] D. Susilo and Harliantara, “The digital promotion of japanese and korean movie in ott platform by netflix,” Indonesian J. of Business Analytics, Vol.3, pp. 1979-1994, 2023. https://doi.org/10.55927/ijba.v3i5.6418

- [19] S. Kumar and T. Sharma, “Impact of marketing strategies on consumer buying behaviour with specific reference to movies as a medium,” Int. Research J. on Advanced Engineering and Management (IRJAEM), Vol.2, pp. 248-255, 2024. https://doi.org/10.47392/IRJAEM.2024.0038

- [20] T. Makioka, S. Okamoto, and I. Tara, “Difference in skin conductance dynamics to horror and family bond emotional movies,” 2022 IEEE 11th Global Conf. on Consumer Electronics (GCCE), pp. 444-446, 2022. https://doi.org/10.1109/GCCE56475.2022.10014187

- [21] S. Fremerey, R. F. U. Zaman, T. Ashraf, R. R. R. Rao, S. Göring, and A. Raake, “Towards evaluation of immersion, visual comfort and exploration behaviour for non-stereoscopic and stereoscopic 360° videos,” 2023 IEEE Int. Symp. on Multimedia (ISM), pp. 131-138, 2023. https://doi.org/10.1109/ISM59092.2023.00025

- [22] L. Song, “Research on the application of computer 3d technology in the creation of films adapted from literary works,” 2022 IEEE Asia-Pacific Conf. on Image Processing, Electronics and Computers (IPEC), pp. 1461-1465, 2022. https://doi.org/10.1109/IPEC54454.2022.9777629

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.