Research Paper:

BrainLM: Enhancing Brain Encoding and Decoding Capabilities with Applications in Multilingual Learning

Ying Luo

and Ichiro Kobayashi

and Ichiro Kobayashi

Graduate School of Humanities and Sciences, Ochanomizu University

2-1-1 Ohtsuka, Bunkyo-ku, Tokyo 112-8610, Japan

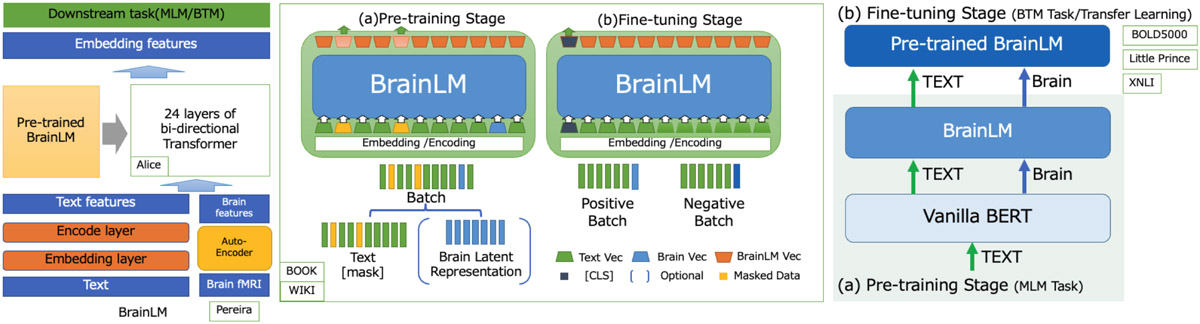

With the rapid advancement of large-language models in natural language processing (NLP), many studies have explored their role in brain encoding and decoding. In this study, we developed BrainLM, a pre-trained multimodal model that incorporates paired brain activity data from text stimuli. We demonstrated its accuracy in brain encoding and decoding across multiple NLP tasks. Our research produced several notable findings: we successfully developed a model for brain encoding and decoding, validated its reliability through bidirectional experiments, and outperformed 20 state-of-the-art models in brain encoding tasks. Additionally, we designed an autoencoder module to extract brain features. We extended the capabilities of BrainLM to new datasets and explored multilingual tasks using transfer learning, which enhanced the generalization ability of the model. Notably, BrainLM achieved 51.75% accuracy in binary classification tasks and increased the correlation coefficient by 3%–15% in brain prediction tasks. This study expands the applications of BrainLM and uncovers the complex interactions between brain regions and language models across different linguistic environments.

BrainLM: a framework for brain-to-text

- [1] A. Ardila, B. Bernal, and M. Rosselli, “How localized are language brain areas? A review of brodmann areas involvement in oral language,” Archives of Clinical Neuropsychology, Vol.31, Issue 1, pp. 112-122, 2015. https://doi.org/10.1093/arclin/acv081

- [2] A. Vaswani, N. Shazeer, N. Parmar, J. Uszkoreit, L. Jones, A. N. Gomez, L. Kaiser, and I. Polosukhin, “Attention is all you need,” Proc. of the 31st Int. Conf. on Neural Information Processing Systems, pp. 6000-6010, 2017.

- [3] J. Devlin, M.-W. Chang, K. Lee, and K. Toutanova, “BERT: Pre-training of deep bidirectional transformers for language understanding,” J. Burstein, C. Doran, and T. Solorio (Eds.), “Proc. of the 2019 Conf. of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies,” Vol.1 (Long and Short Papers), pp. 4171-4186, 2019. https://doi.org/10.18653/v1/N19-1423.

- [4] A. Radford, J. Wu, R. Child, D. Luan, D. Amodei, and I. Sutskever, “Language models are unsupervised multitask learners,” OpenAI, 2019.

- [5] Y.-C. Chen, L. Li, L. Yu, A. El Kholy, F. Ahmed, Z. Gan, Y. Cheng, and J. Liu, “UNITER: UNiversal Image-TExt Representation Learning,” European Conf. on Computer Vision, pp. 104-120, 2019. https://doi.org/10.1007/978-3-030-58577-8_7

- [6] Y.-S. Chuang, C.-L. Liu, H.-y. Lee, and L.-S. Lee, “SpeechBERT: An audio-and-text jointly learned language model for end-to-end spoken question answering,” Interspeech, 2019. https://doi.org/10.21437/interspeech.2020-1570

- [7] B. Woll, “How the Brain Processes Language in Different Modalities,” A. Esposito, A. Hussain, M. Marinaro, and R. Martone (Eds.), “Multimodal Signals: Cognitive and Algorithmic Issues,” pp. 145-163, Springer, 2009. https://doi.org/10.1007/978-3-642-00525-1_15

- [8] E. Higby, J. Kim, and L. K. Obler, “Multilingualism and the brain,” Annual Review of Applied Linguistics, Vol.33, pp. 68-101, 2013. https://doi.org/10.1017/S0267190513000081

- [9] M. Bhargava, K. Vijayan, O. Anand, and G. Raina, “Exploration of transfer learning capability of multilingual models for text classification,” Proc. of the 2023 5th Int. Conf. on Pattern Recognition and Intelligent Systems (PRIS ’23), pp. 45-50, 2023. https://doi.org/10.1145/3609703.3609711

- [10] S. Stehwien, L. Henke, J. Hale, J. Brennan, and L. Meyer, “The Little Prince in 26 languages: Towards a multilingual neuro-cognitive corpus,” Proc. of the 2nd Workshop on Linguistic and Neurocognitive Resources, pp. 43-49, 2020.

- [11] J. Li, S. Bhattasali, S. Zhang, B. Franzluebbers, W.-M. Luh, R. N. Spreng, J. R. Brennan, Y. Yang, C. Pallier, and J. Hale, “Le Petit Prince multilingual naturalistic fMRI corpus,” Scientific Data, Vol.9, No.1, Article No.530, 2022. https://doi.org/10.1038/s41597-022-01625-7

- [12] T. N. Rubin, O. Koyejo, K. J. Gorgolewski, M. N. Jones, R. A. Poldrack, and T. Yarkoni, “Decoding brain activity using a large-scale probabilistic functional-anatomical atlas of human cognition,” bioRxiv 059618, 2016. https://doi.org/10.1101/059618

- [13] S. Nishida, A. Blanc, N. Maeda, M. Kado, and S. Nishimoto, “Behavioral correlates of cortical semantic representations modeled by word vectors,” PLOS Computational Biology, Vol.17, No.6, Article No.e1009138, 2021. https://doi.org/10.1371/journal.pcbi.1009138

- [14] Y. Takagi and S. Nishimoto, “High-resolution image reconstruction with latent diffusion models from human brain activity,” 2023 IEEE/CVF Conf. on Computer Vision and Pattern Recognition (CVPR), pp. 14453-14463, 2023. https://doi.org/10.1109/CVPR52729.2023.01389

- [15] I. I. Groen, M. R. Greene, C. Baldassano, L. Fei-Fei, D. M. Beck, and C. I. Baker, “Distinct contributions of functional and deep neural network features to representational similarity of scenes in human brain and behavior,” eLife, Vol.7, Article No.e32962, 2018. https://doi.org/10.7554/eLife.32962

- [16] C. Wang, V. Subramaniam, A. U. Yaari, G. Kreiman, B. Katz, I. Cases, and A. Barbu, “BrainBERT: Self-supervised representation learning for intracranial recordings,” arXiv:2302.14367, 2023. https://doi.org/10.48550/arXiv.2302.14367

- [17] J. M. Valverde, V. Imani, A. Abdollahzadeh, R. De Feo, M. Prakash, R. Ciszek, and J. Tohka, “Transfer learning in magnetic resonance brain imaging: A systematic review,” J. of Imaging, Vol.7, Issue 4, Article No.66, 2021. https://doi.org/10.3390/jimaging7040066

- [18] S. Bhattasali, J. Brennan, W.-M. Luh, B. Franzluebbers, and J. Hale, “The Alice datasets: fMRI & EEG observations of natural language comprehension,” Proc. of the 12th Language Resources and Evaluation Conf., pp. 120-125, 2020.

- [19] F. Pereira, B. Lou, B. Pritchett, S. Ritter, S. J. Gershman, N. Kanwisher, M. Botvinick, and E. Fedorenko, “Toward a universal decoder of linguistic meaning from brain activation,” Nature Communications, Vol.9, Article No.963, 2018. https://doi.org/10.1038/s41467-018-03068-4

- [20] D. L. Collins, A. P. Zijdenbos, W. F. C. Baaré, and A. C. Evans, “ANIMAL+INSECT: Improved cortical structure segmentation,” A. Kuba, M. Šáamal, and A. Todd-Pokropek (Eds.), “Information Processing in Medical Imaging,” pp. 210-223, Springer, 1999. https://doi.org/10.1007/3-540-48714-X_16

- [21] N. Chang, J. A. Pyles, A. Marcus, A. Gupta, M. J. Tarr, and E. M. Aminoff, “BOLD5000, a public fMRI dataset while viewing 5000 visual images,” Scientific Data, Vol.6, No.1, Article No.49, 2019. https://doi.org/10.1038/s41597-019-0052-3

- [22] A. Conneau, R. Rinott, G. Lample, A. Williams, S. Bowman, H. Schwenk, and V. Stoyanov, “XNLI: Evaluating cross-lingual sentence representations,” Proc. of the 2018 Conf. on Empirical Methods in Natural Language Processing, pp. 2475-2485, 2018. https://doi.org/10.18653/v1/D18-1269

- [23] P. Przybyła, P. Borkowski, and K. Kaczyński, “Wikipedia complete citation corpus,” 2022. https://doi.org/10.5281/zenodo.6539054

- [24] S. R. Oota, J. Arora, M. Gupta, R. S. Bapi, and M. Toneva, “Tutorial: Deep Learning for Brain Encoding and Decoding,” The 44th Annual Meeting of the Cognitive Science Society (CogSci), 2022.

- [25] C. Foster, D. Dharmaretnam, H. Xu, A. Fyshe, and G. Tzanetakis, “Decoding music in the human brain using EEG data,” 2018 IEEE 20th Int. Workshop on Multimedia Signal Processing (MMSP), 2018. https://doi.org/10.1109/MMSP.2018.8547051

- [26] Z. Liu, E. T. Rolls, Z. Liu, K. Zhang, M. Yang, J. Du, W. Gong, W. Cheng, F. Dai, H. Wang, K. Ugurbil, J. Zhang, and J. Feng, “Brain annotation toolbox: exploring the functional and genetic associations of neuroimaging results,” Bioinformatics, Vol.35, Issue 19, pp. 3771-3778, 2019. https://doi.org/10.1093/bioinformatics/btz128

- [27] N. Kanwisher, “Functional specificity in the human brain: A window into the functional architecture of the mind,” Proc. of the National Academy of Sciences, Vol.107, No.25, pp. 11163-11170, 2010. https://doi.org/10.1073/pnas.1005062107

- [28] J. R. Binder, J. A. Frost, T. A. Hammeke, R. W. Cox, S. M. Rao, and T. Prieto, “Human brain language areas identified by functional magnetic resonance imaging,” J. of Neuroscience, Vol.17, No.1, pp. 353-362, 1997. https://doi.org/10.1523/JNEUROSCI.17-01-00353.1997

- [29] N. Reimers and I. Gurevych, “Sentence-BERT: Sentence Embeddings using Siamese BERT-Networks,” Proc. of the 2019 Conf. on Empirical Methods in Natural Language Processing (EMNLP), 2019. https://doi.org/10.48550/arXiv.1908.10084

- [30] T. Gao, X. Yao, and D. Chen, “SimCSE: Simple Contrastive Learning of Sentence Embeddings,” Proc. of the 2021 Conf. on Empirical Methods in Natural Language Processing (EMNLP), 2021. https://doi.org/10.48550/arXiv.2104.08821

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.