Research Paper:

A Text-Based Suicide Detection Model Using Hybrid Prompt Tuning in Few-Shot Scenarios

Yiwen He, Lulu Ji, Ruipeng Qian

, and Wentao Gu†

, and Wentao Gu†

Research Institute of Econometrics and Statistics, Zhejiang Gongshang University

18 Xuezheng Street, Xiasha Education Park, Hangzhou, Zhejiang 310018, China

†Corresponding author

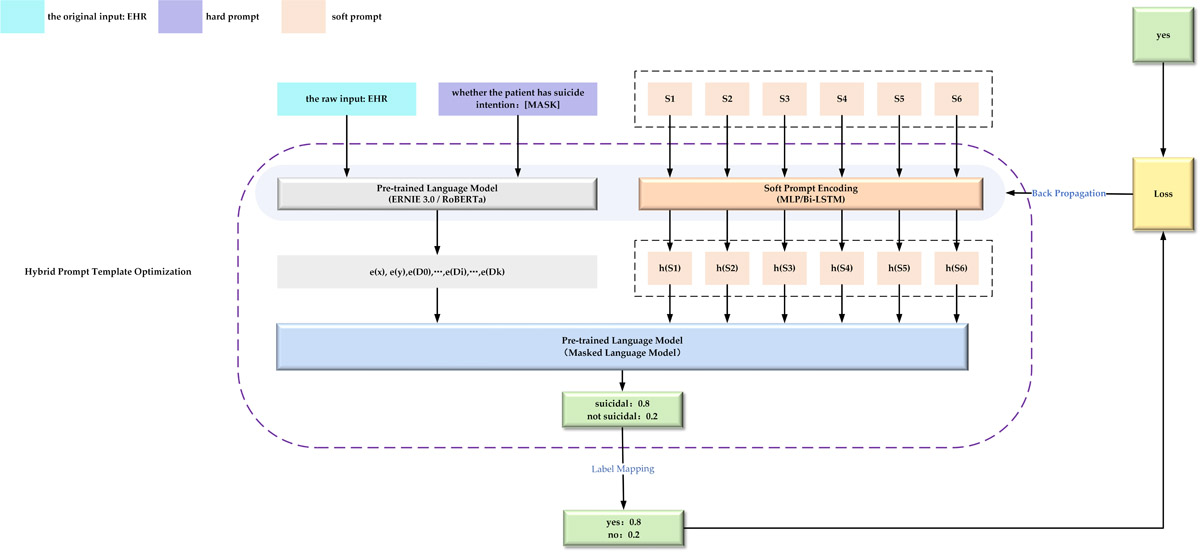

Suicide is more prevalent among individuals with psychiatric disorders, underscoring the importance of early identification of warning signs for intervention. Common suicide detection models for text analysis often require tremendous labeled data, making them prone to overfitting when dealing with tiny datasets. Aiming at the problem, we propose a prompt-based learning suicide detection model that is suitable in low-resource settings following the “pre-train, prompt, predict” paradigm, named E3.0-HP-SDM (ERNIE 3.0 Hybrid Prompt-Suicide Detection Model). In the construction of the E3.0-HP-SDM, we selected ERNIE 3.0, renowned for its knowledge enhancement capabilities, as our pre-trained language model (PLM). Additionally, we developed a hybrid prompt template, which integrates a set of tunable soft prompts into a specific suicide-related hard prompt template. This template reformulates the original input into a format with unfilled slots, specifically designed to guide the PLM in applying its knowledge-masked language model for the inference of suicide intentions. When tested on identical data, E3.0-HP-SDM outperforms not only other models within the same paradigm but also often-cited baseline combination models that follow the third paradigm of natural language processing, the “pre-train, fine-tune” paradigm, with an accuracy of 87.6% and an AUC of 85.2%.

The optimization process of a hybrid prompt-based detection model

- [1] A. Forray and K. A. Yonkers, “The collision of mental health, substance use disorder, and suicide,” Obstetrics & Gynecology, Vol.137, No.6, pp. 1083-1090, 2021. https://doi.org/10.1097/aog.0000000000004391

- [2] R. W. Brendel, M. Wei, I. T. Lagomasino, R. H. Perlis, and T. A. Stern, “Care of the suicidal patient,” T. A. Stern, G. L. Fricchione, N. H. Cassem, M. Jellinek, and J. F. Rosenbaum (Eds.), “Massachusetts General Hospital Handbook of General Hospital Psychiatry,” pp. 541-554, Elsevier, 2010. https://doi.org/10.1016/b978-1-4377-1927-7.00040-6

- [3] A. Abbe, C. Grouin, P. Zweigenbaum, and B. Falissard, “Text mining applications in psychiatry: a systematic literature review,” Int. J. of Methods in Psychiatric Research, Vol.25, No.2, pp. 86-100, 2016. https://doi.org/10.1002/mpr.1481

- [4] S. Velupillai, H. Suominen, M. Liakata, A. Roberts, A. D. Shah, K. Morley, D. Osborn, J. Hayes, R. Stewart, J. Downs et al., “Using clinical natural language processing for health outcomes research: Overview and actionable suggestions for future advances,” J. of Biomedical Informatics, Vol.88, pp. 11-19, 2018. https://doi.org/10.1016/j.jbi.2018.10.005

- [5] G. Karystianis, A. J. Nevado, C.-H. Kim, A. Dehghan, J. A. Keane, and G. Nenadic, “Automatic mining of symptom severity from psychiatric evaluation notes,” Int. J. of Methods in Psychiatric Research, Vol.27, No.1, Article No.e1602, 2018. https://doi.org/10.1002/mpr.1602

- [6] P. Liu, W. Yuan, J. Fu, Z. Jiang, H. Hayashi, and G. Neubig, “Pre-train, prompt, and predict: A systematic survey of prompting methods in natural language processing,” ACM Computing Surveys, Vol.55, No.9, pp. 1-35, 2023. https://doi.org/10.1145/3560815

- [7] M. Sanderson, A. G. Bulloch, J. Wang, K. G. Williams, T. Williamson, and S. B. Patten, “Predicting death by suicide following an emergency department visit for parasuicide with administrative health care system data and machine learning,” EClinicalMedicine, Vol.20, Article No.100281, 2020. https://doi.org/10.1016/j.eclinm.2020.100281

- [8] Y. Barak-Corren, V. M. Castro, M. K. Nock, K. D. Mandl, E. M. Madsen, A. Seiger, W. G. Adams, R. J. Applegate, E. V. Bernstam, J. G. Klann et al., “Validation of an electronic health record–based suicide risk prediction modeling approach across multiple health care systems,” JAMA Network Open, Vol.3, No.3, Article No.e201262, 2020. https://doi.org/10.1001/jamanetworkopen.2020.1262

- [9] A. Turchin, N. S. Kolatkar, R. W. Grant, E. C. Makhni, M. L. Pendergrass, and J. S. Einbinder, “Using regular expressions to abstract blood pressure and treatment intensification information from the text of physician notes,” J. of the American Medical Informatics Association, Vol.13, No.6, pp. 691-695, 2006. https://doi.org/10.1197/jamia.m2078

- [10] W. Huang, “Elderly depression recognition based on facial micro-expression extraction,” Traitement du Signal, Vol.38, No.4, pp. 1123-1130, 2021. https://doi.org/10.18280/ts.380423

- [11] N. Cummins, S. Scherer, J. Krajewski, S. Schnieder, J. Epps, and T. F. Quatieri, “A review of depression and suicide risk assessment using speech analysis,” Speech Communication, Vol.71, pp. 10-49, 2015. https://doi.org/10.1016/j.specom.2015.03.004

- [12] C. Poulin, B. Shiner, P. Thompson, L. Vepstas, Y. Young-Xu, B. Goertzel, B. Watts, L. Flashman, and T. McAllister, “Predicting the risk of suicide by analyzing the text of clinical notes,” PloS one, Vol.9, No.1, Article No.e85733, 2014. https://doi.org/10.1371/journal.pone.0085733

- [13] M.-F. R. Lee, Y.-C. Chen, and C.-Y. Tsai, “Deep learning-based human body posture recognition and tracking for unmanned aerial vehicles,” Processes, Vol.10, No.11, Article No.2295, 2022. https://doi.org/10.3390/pr10112295

- [14] N. C. Jacobson, D. Lekkas, R. Huang, and N. Thomas, “Deep learning paired with wearable passive sensing data predicts deterioration in anxiety disorder symptoms across 17–18 years,” J. of Affective Disorders, Vol. 282, pp. 104-111, 2021. https://doi.org/10.1016/j.jad.2020.12.086

- [15] T. H. H. Aldhyani, S. N. Alsubari, A. S. Alshebami, H. Alkahtani, and Z. A. T. Ahmed, “Detecting and analyzing suicidal ideation on social media using deep learning and machine learning models,” International J. of Environmental Research and Public Health, Vol.19, No.19, Article No.12635, 2022. https://doi.org/10.3390/ijerph191912635

- [16] A. St-Hilaire, A. S. Cohen, and N. M. Docherty, “Emotion word use in the conversational speech of schizophrenia patients,” Cognitive Neuropsychiatry, Vol.13, No.4, pp. 343-356, 2008. https://doi.org/10.1080/13546800802250560

- [17] A. Parola, A. Simonsen, V. Bliksted, and R. Fusaroli, “Voice patterns in schizophrenia: A systematic review and bayesian meta-analysis,” Schizophrenia Research, Vol.216, pp. 24-40, 2020. https://doi.org/10.1016/j.schres.2019.11.031

- [18] J. Devlin, “Bert: Pre-training of deep bidirectional transformers for language understanding,” arXiv:1810.04805, 2018. https://doi.org/10.48550/arXiv.1810.04805

- [19] Y. Liu, “Roberta: A robustly optimized bert pretraining approach,” arXiv:1907.11692, 2019. https://doi.org/10.48550/arXiv.1907.11692

- [20] Z. Yang, Z. Dai, Y. Yang, J. Carbonell, R. Salakhutdinov, and Q. V. Le, “Xlnet: Generalized autoregressive pretraining for language understanding,” Proc. of the 33rd Int. Conf. on Neural Information Processing Systems, pp. 5753-5763, 2019.

- [21] Z. Zhang, X. Han, Z. Liu, X. Jiang, M. Sun, and Q. Liu, “Ernie: Enhanced language representation with informative entities,” arXiv:1905.07129, 2019. https://doi.org/10.48550/arXiv.1905.07129

- [22] B. Shickel, M. Heesacker, S. Benton, and P. Rashidi, “Automated emotional valence prediction in mental health text via deep transfer learning,” 2020 IEEE 20th Int. Conf. on Bioinformatics and Bioengineering (BIBE), pp. 269-274, 2020. https://doi.org/10.1109/BIBE50027.2020.00051

- [23] R. Dale, “Gpt-3: What’s it good for?,” Natural Language Engineering, Vol.27, No.1, pp. 113-118, 2021. https://doi.org/10.1017/S1351324920000601

- [24] Y. Sun, S. Wang, S. Feng, S. Ding, C. Pang, J. Shang, J. Liu, X. Chen, Y. Zhao, Y. Lu et al., “Ernie 3.0: Large-scale knowledge enhanced pre-training for language understanding and generation,” arXiv:2107.02137, 2021. https://doi.org/10.48550/arXiv.2107.02137

- [25] Y. Gu, X. Han, Z. Liu, and M. Huang, “Ppt: Pre-trained prompt tuning for few-shot learning,” arXiv:2109.04332, 2021. https://doi.org/10.48550/arXiv.2109.04332

- [26] X. Liu, Y. Zheng, Z. Du, M. Ding, Y. Qian, Z. Yang, and J. Tang, “Gpt understands, too,” AI Open, Vol.5, pp. 208-215, 2024. https://doi.org/10.1016/j.aiopen.2023.08.012

- [27] Y. Cui, W. Che, T. Liu, B. Qin, and Z. Yang, “Pre-training with whole word masking for chinese bert,” IEEE/ACM Trans. on Audio, Speech, and Language Processing, Vol.29, pp. 3504-3514, 2021. https://doi.org/10.1109/TASLP.2021.3124365

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.