Research Paper:

High-Precision Feature Point Matching and Stereo-Depth Estimation Using Rotation-Invariant CNN

Makoto Anazawa*

, Hajime Nobuhara*

, Hajime Nobuhara*

, and Nozomu Ohta**

, and Nozomu Ohta**

*University of Tsukuba

1-1-1 Tenoudai, Tsukuba, Ibaraki 305-8573, Japan

**Institute of Agricultural Machinery, National Agriculture and Food Research Organization

1-31-1 Kannondai, Tsukuba, Ibaraki 305-8517, Japan

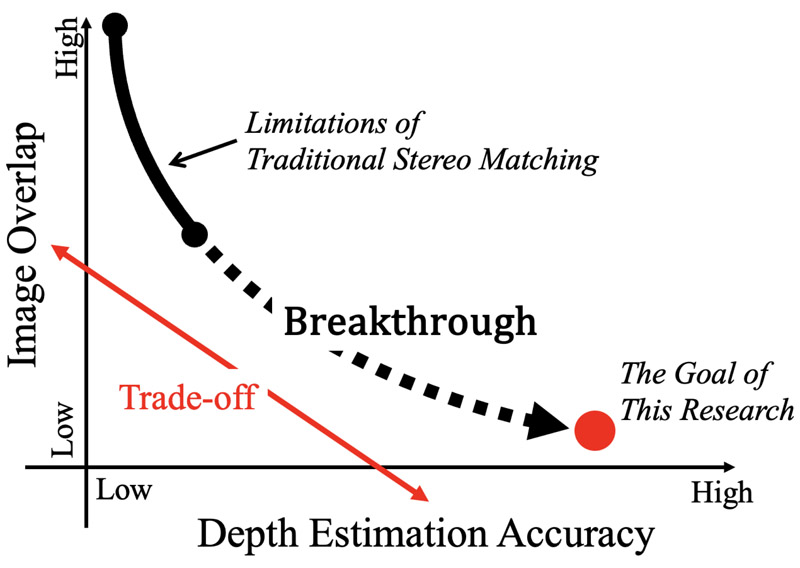

Stereo-matching has become essential in various industrial applications, including robotics, autonomous driving, and drone-based surveying. In the drone-based depth estimation, we captured images from two different positions and determined the corresponding points between them through stereo-matching. A longer distance between the two positions improves triangulation accuracy but makes stereo-matching difficult owing to the reduced image overlap. This limitation is inherent to previous methods, necessitating at least 50% image overlap to achieve only centimeter-level accuracy. Hence, we propose using stereo viewing with feature point matching, which allows for direct matching of points on the image. Our approach applies a novel rotation-invariant convolutional neural network (CNN) that extracts features more effectively in the presence of angular changes in a subject, surpassing the performance of previous CNN-based models. We evaluated our method using the HPatches dataset, which demonstrated an increase in feature point matching accuracy of up to 0.9%. In a practical stereo imaging setting, our method achieved a height estimation error of approximately 1.2 mm and height resolution of approximately 2.6 mm in image pairs with approximately 25% overlap under varying conditions. This performance confirms that the proposed approach effectively resolves the trade-off inherent to traditional stereo-matching techniques, particularly with regard to the challenging overlapping scenarios that these previous methods failed to account for. Consequently, this study substantially broadens the applicability and versatility of stereo-depth estimation.

Advancing stereo-matching methods

- [1] Y. Bi, C. Li, X. Tong, G. Wang, and H. Sun, “An application of stereo matching algorithm based on transfer learning on robots in multiple scenes,” Scientific Reports, Vol.13, Article No.12739, 2023. https://doi.org/10.1038/s41598-023-39964-z

- [2] J. Suthakorn, M. Kishore, S. Ongwattanakul, F. Matsuno, M. Svinin, and B. Madhavan Pillai, “Stereo Vision-based Object Detection and Depth Estimation from 3D Reconstructed Scene for an Autonomous Multi Robotic Rescue Mission,” AROB-ISBC-SWARM 2022, 2022.

- [3] Z. Rao, Y. Cai, H. Wang, L. Chen, and Y. Li, “A multi‐stage model for bird’s eye view prediction based on stereo‐matching model and RGB‐D semantic segmentation,” IET Intelligent Transport Systems, Vol.18, No.2, pp. 2552-2564, 2023. https://doi.org/10.1049/itr2.12367

- [4] P. Li, S. Su, and H. Zhao, “RTS3D: Real-time Stereo 3D Detection from 4D Feature-Consistency Embedding Space for Autonomous Driving,” arXiv:2012.15072, 2020. https://doi.org/10.48550/arXiv.2012.1507

- [5] D. Deepa and K. Jyothi, “A deep learning based stereo matching model for autonomous vehicle,” IAES Int. J. of Artificial Intelligence (IJ-AI), Vol.12, No.1, pp. 87-95, 2023. http://doi.org/10.11591/ijai.v12.i1.pp87-95

- [6] H. Zhao and B. Wu, “Three-dimensional face modeling technology based on 5G virtual reality binocular stereo vision,” Int. J. of Communication Systems, Vol.35, Issue 5, Article No.e4651, 2020. https://doi.org/10.1002/dac.4651

- [7] L. Lin, Y. Zhang, Z. Wang, L. Zhang, X. Liu, and Q. Wang, “A-SATMVSNet: An attention-aware multi-view stereo matching network based on satellite imagery,” Frontiers in Earth Science, Vol.11, Article No.1108403, 2023. https://doi.org/10.3389/feart.2023.1108403

- [8] H. Lee and J. Oh, “3D Displacement Measurement of Railway Bridge According to Cyclic Loads of Different Types of Railcars with Sequential Photogrammetry,” Applied Sciences, Vol.13, No.3, Article No.1359, 2023. https://doi.org/10.3390/app13031359

- [9] J. Li, W. Zhou, W. Gong, Z. Lu, H. Yan, W. Wei, Z. Wang, C. Shen, and J. Pang, “LiDAR-Assisted UAV Stereo Vision Detection in Railway Freight Transport Measurement,” Drones, Vol.6, No.11, Article No.367, 2022. https://doi.org/10.3390/drones6110367

- [10] Y. Matsuura, Z. Heming, K. Nakao, C. Qiong, I. Firmansyah, S. Kawai, Y. Yamaguchi, H. Hayashi, T. Maruyama, and H. Nobuhara, “High-Precision Stereo Matching with a Drone Equipped with Rtk-Gnss and a Single Camera for Plant Height Measurements,” SSRN Electronic J., 2022. http://dx.doi.org/10.2139/ssrn.4053448

- [11] A. Mishchuk, D. Mishkin, F. Radenovic, and J. Matas, “Working hard to know your neighbor’s margins: Local descriptor learning loss,” Proc. of NeurIPS, 2017.

- [12] Y. Tian, X. Yu, B. Fan, F. Wu, H. Heijnen, and V. Balntas, “SOSNet: Second Order Similarity Regularization for Local Descriptor Learning,” 2019 IEEE/CVF Conf. on Computer Vision and Pattern Recognition (CVPR), pp. 11008-11017, 2019.

- [13] Y. Tian, A. Barroso Laguna, T. Ng, V. Balntas, and K. Mikolajczyk, “HyNet: Learning Local Descriptor with Hybrid Similarity Measure and Triplet Loss,” 34th Conf. on Neural Information Processing Systems (NeurIPS 2020), pp. 7401-7412, 2020.

- [14] J. Lee, B. Kim, and M. Cho, “Self-Supervised Equivariant Learning for Oriented Keypoint Detection,” 2022 IEEE/CVF Conf. on Computer Vision and Pattern Recognition (CVPR), pp. 4837-4847, 2022. http://dx.doi.org/10.1109/CVPR52688.2022.00480

- [15] M. S. Hamid, N. Abd Manap, R. Hamzah, and A. F. Kadmin, “Stereo Matching Algorithm based on Deep Learning: A Survey,” J. of King Saud University—Computer and Information Sciences, Vol. 34, Issue 5, pp. 1663-1673, 2022. https://doi.org/10.1016/j.jksuci.2020.08.011

- [16] G. Popović, A. Hadviger, I. Marković, and I. Petrovic, “Computationally efficient dense moving object detection based on reduced space disparity estimation,” arXiv:1809.07986, 2018.

- [17] G. R. Sangeetha, K. Nishank, P. R. Hari, and S. Sasikumar, “Implementation of a Stereo vision based system for visual feedback control of Robotic Arm for space manipulations,” Procedia Computer Science, Vol.133, pp. 1066-1073, 2018. https://doi.org/10.1016/j.procs.2018.07.031

- [18] D. Scharstein and R. Szeliski, “A Taxonomy and Evaluation of Dense Two-Frame Stereo Correspondence Algorithms,” Int. J. of Computer Vision, Vol.47, pp. 7-42, 2002. https://doi.org/10.1023/A:1014573219977

- [19] R. Hamzah and H. Ibrahim, “Literature Survey on Stereo Vision Disparity Map Algorithms,” J. of Sensors, Vol.2016, Article No.8742920, 2016. https://doi.org/10.1155/2016/8742920

- [20] Y. Li, J. Huang, N. Ahuja, and M. Yang, “Deep joint image filtering,” Computer Vision—14th European Conf. (ECCV 2016), pp. 154-169, 2016. https://doi.org/10.1007/978-3-319-46493-0_10

- [21] B. Salehian, A. Fotouhi, and A. Raie, “Dynamic programming-based dense stereo matching improvement using an efficient search space reduction technique,” Optik—Int. J. for Light and Electron Optics, Vol.160, pp. 1-12, 2018. https://doi.org/10.1016/j.ijleo.2018.01.021

- [22] J. Žbontar and Y. Lecun, “Computing the Stereo Matching Cost with a Convolutional Neural Network,” 2015 IEEE Conf. on Computer Vision and Pattern Recognition (CVPR), 2015. https://doi.org/10.1109/CVPR.2015.7298767

- [23] J. Žbontar and Y. Lecun, “Stereo Matching by Training a Convolutional Neural Network to Compare Image Patches,” arXiv:1510.05970, 2015.

- [24] J. Chen and C. Yuan, “Convolutional neural network using multi-scale information for stereo matching cost computation,” 2016 IEEE Int. Conf. on Image Processing (ICIP), pp. 3424-3428, 2016.

- [25] A. Kendall, H. Martirosyan, S. Dasgupta, P. Henry, R. Kennedy, A. Bachrach, and A. Bry, “End-to-End Learning of Geometry and Context for Deep Stereo Regression,” arXiv:1703.04309, 2017. https://doi.org/10.48550/arXiv.1703.04309

- [26] G. Yang, H. Zhao, J. Shi, Z. Deng, and J. Jia, “SegStereo: Exploiting Semantic Information for Disparity Estimation,” arXiv:1807.11699, 2018. https://doi.org/10.48550/arXiv.1807.11699

- [27] J. Kang, L. Chen, F. Deng, and C. Heipke, “Context pyramidal network for stereo matching regularized by disparity gradients,” ISPRS J. of Photogrammetry and Remote Sensing, Vol.157, pp. 201-215, 2019. https://doi.org/10.1016/j.isprsjprs.2019.09.012

- [28] K. Swami, K. Raghavan, N. Pelluri, R. Sarkar, and P. Bajpai, “DISCO: Depth Inference from Stereo using Context,” arXiv:1906.00050, 2019.

- [29] N. Mayer, E. Ilg, P. Häusser, P. Fischer, D. Cremers, A. Dosovitskiy, and T. Brox, “A Large Dataset to Train Convolutional Networks for Disparity, Optical Flow, and Scene Flow Estimation,” arXiv:1512.02134, 2015.

- [30] T. Phuoc Nguyen and J. Jeon, “Wide context learning network for stereo matching,” Signal Processing: Image Communication, Vol. 78, pp. 263-273, 2019. https://doi.org/10.1016/j.image.2019.07.008

- [31] X. Song, X. Zhao, H. Hu, and L. Fang, “EdgeStereo: A Context Integrated Residual Pyramid Network for Stereo Matching,” arXiv:1803.05196 2018.

- [32] G. Yang, J. Manela, M. Happold, and D. Ramanan, “Hierarchical Deep Stereo Matching on High-Resolution Images,” IEEE Conf. on Computer Vision and Pattern Recognition (CVPR), 2019.

- [33] S. Winder and M. Brown, “Learning Local Image Descriptors,” 2007 IEEE Conf. on Computer Vision and Pattern Recognition, 2007. https://dx.doi.org/10.1109/CVPR.2007.382971

- [34] V. Balntas, K. Lenc, A. Vedaldi, and K. Mikolajczyk, “HPatches: A benchmark and evaluation of handcrafted and learned local descriptors,” 2017 IEEE Conf. on Computer Vision and Pattern Recognition (CVPR), 2017. http://dx.doi.org/10.1109/CVPR.2017.410

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.