Paper:

Wide-Range and Real-Time 3D Tracking of a Moving Object by Dynamic Compensation Based on High-Speed Visual Feedback

Xuebin Zhu*,†, Kenichi Murakami**

, and Yuji Yamakawa***

, and Yuji Yamakawa***

*Graduate School of Engineering, The University of Tokyo

4-6-1 Komaba, Meguro-ku, Tokyo 153-8505, Japan

†Corresponding author

**Institute of Industrial Science, The University of Tokyo

4-6-1 Komaba, Meguro-ku, Tokyo 153-8505, Japan

***Interfaculty Initiative in Information Studies, The University of Tokyo

4-6-1 Komaba, Meguro-ku, Tokyo 153-8505, Japan

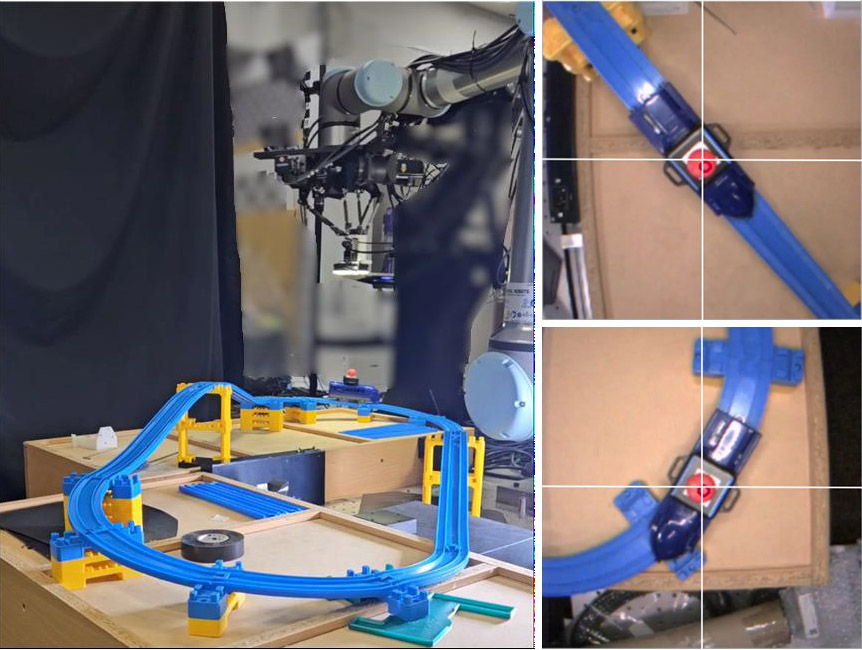

To apply dynamic compensation in increasing productivity and realizing high-speed manipulation and manufacturing, a wide-range 3D tracking system combining a high-speed 3D compensation module (also called a delta robot because of its structure) and a robotic arm is developed. During tracking, the cameras collect 1000 images per second, and the tracked object position in the real-world robotic-arm base coordinate system is calculated using the collected pixel data and camera calibration parameters. Corresponding commands are generated for the robotic arm and delta robot to maintain their positions relative to the tracked object. To generate these commands, encoder feedback from the robotic arm and delta robot is utilized. The cameras provide 1000-Hz visual feedback, and the high-speed compensation module provides 1000-Hz response to movement with high accuracy. The robotic arm achieves this accuracy over a wide range. Successful tracking along the xy-axis is achieved. Even when the object moves faster, the tracking accuracy is high along the x- and z-axes. This study demonstrates the feasibility of using a delta robot to achieve wide-range object tracking, which may contribute to higher-speed manipulation of robotic arms to increase productivity.

Developed system and tracking results

- [1] K. Murakami, S. Huang, M. Ishikawa, and Y. Yamakawa, “Development of high-speed 3D position compensation system with high-speed visual feedback,” SICE, SI2021, pp. 1403-1405, 2021.

- [2] A. Sharon, N. Hogan, and D. E. Hardt, “The macro/micro manipulator: An improved architecture for robot control,” Robot. Comput.-Integr. Manuf., Vol.10, No.3, pp. 209-222, 1993. https://doi.org/10.1016/0736-5845(93)90056-P

- [3] A. Omari et al., “Development of a high precision mounting robot system with fine motion mechanism (3rd report): Positioning experiments of SCARA robot with fine mechanism,” J. Jpn. Soc. Precis. Eng., Vol.67, No.7, pp. 1101-1107, 2001. https://doi.org/10.2493/jjspe.67.1101

- [4] M. Freundt, C. Brecher, and C. Wenzel, “Hybrid universal handling systems for micro component assembly,” Microsyst. Technol., Vol.14, No.12, pp. 1855-1860, 2008. https://doi.org/10.1007/s00542-008-0695-z

- [5] J. Sulzer and I. Kovač, “Enhancement of positioning accuracy of industrial robots with a reconfigurable fine-positioning module,” Precis. Eng., Vol.34, No.2, pp. 201-207, 2010. https://doi.org/10.1016/j.precisioneng.2009.06.006

- [6] S. Huang, N. Bergström, Y. Yamakawa, T. Senoo, and M. Ishikawa, “Robotic contour tracing with high-speed vision and force-torque sensing based on dynamic compensation scheme,” IFAC-PapersOnLine, Vol.50, No.1, pp. 4616-4622, 2017. https://doi.org/10.1016/j.ifacol.2017.08.654

- [7] S. Huang et al., “Dynamic compensation robot with a new high-speed vision system for flexible manufacturing,” Int. J. Adv. Manuf. Technol., Vol.95, No.9, pp. 4523-4533, 2018. https://doi.org/10.1007/s00170-017-1491-7

- [8] S. Huang, M. Ishikawa, and Y. Yamakawa, “A coarse-to-fine framework for accurate positioning under uncertainties—From autonomous robot to human-robot system,” Int. J. Adv. Manuf. Technol., Vol.108, No.9, pp. 2929-2944, 2020. https://doi.org/10.1007/s00170-020-05376-w

- [9] S. Huang, Y. Yamakawa, and M. Ishikawa, “Dynamic compensation framework to improve the autonomy of industrial robots,” A. Grau and Z. Wang (Eds.), “Industrial Robotics: New Paragidms,” pp. 61-80, IntechOpen, 2020. https://doi.org/10.5772/intechopen.90169

- [10] K. Murakami, S. Huang, M. Ishikawa, and Y. Yamakawa, “Fully automated bead art assembly for smart manufacturing using dynamic compensation approach,” J. Robot. Mechatron., Vol.34, No.5, pp. 936-945, 2022. https://doi.org/10.20965/jrm.2022.p0936

- [11] T. Akamatsu, F. Dong, and K. Hirota, “3D measurement of a moving object using a moving camera attached with a 6-axis sensor,” J. Adv. Comput. Intell. Intell. Inform., Vol.18, No.5, pp. 736-744, 2014. https://doi.org/10.20965/jaciii.2014.p0736

- [12] M. Wang, D. Su, L. Shi, Y. Liu, and J. V. Miro, “Real-time 3D human tracking for mobile robots with multisensors,” 2017 IEEE Int. Conf. on Robotics and Automation (ICRA), pp. 5081-5087, 2017. https://doi.org/10.1109/ICRA.2017.7989593

- [13] S. Hosseinyalamdary, Y. Balazadegan, and C. Toth, “Tracking 3D moving objects based on GPS/IMU navigation solution, laser scanner point cloud and GIS data,” ISPRS Int. J. Geo-Inf., Vol.4, No.3, pp. 1301-1316, 2015. https://doi.org/10.3390/ijgi4031301

- [14] C. Choi and H. I. Christensen, “Real-time 3D model-based tracking using edge and keypoint features for robotic manipulation,” 2010 IEEE Int. Conf. on Robotics and Automation, pp. 4048-4055, 2010. https://doi.org/10.1109/ROBOT.2010.5509171

- [15] N. Greggio, A. Bernardino, C. Laschi, J. Santos-Victor, and P. Dario, “Real-time 3D stereo tracking and localizing of spherical objects with the iCub robotic platform,” J. Intell. Robot. Syst., Vol.63, No.3, pp. 417-446, 2011. https://doi.org/10.1007/s10846-010-9527-3

- [16] P. K. Allen, A. Timcenko, B. Yoshimi, and P. Michelman, “Automated tracking and grasping of a moving object with a robotic hand-eye system,” IEEE Trans. Robot. Autom., Vol.9, No.2, pp. 152-165, 1993. https://doi.org/10.1109/70.238279

- [17] N. P. Papanikolopoulos, P. K. Khosla, and T. Kanade, “Visual tracking of a moving target by a camera mounted on a robot: A combination of control and vision,” IEEE Trans. Robot. Autom., Vol.9, No.1, pp. 14-35, 1993. https://doi.org/10.1109/70.210792

- [18] N. Marturi et al., “Dynamic grasp and trajectory planning for moving objects,” Auton. Robot., Vol.43, No.5, pp. 1241-1256, 2019. https://doi.org/10.1007/s10514-018-9799-1

- [19] J. Peng and Y. Yuan, “Moving object grasping method of mechanical arm based on deep deterministic policy gradient and hindsight experience replay,” J. Adv. Comput. Intell. Intell. Inform., Vol.26, No.1, pp. 51-57, 2022. https://doi.org/10.20965/jaciii.2022.p0051

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.