Paper:

Pose Estimation Focusing on One Object Based on Grasping Quality in Bin Picking

Ryotaro Yoshida and Tsuyoshi Tasaki

Meijo University

1-501 Shiogamaguchi, Tempaku-ku, Nagoya, Aichi 468-8502, Japan

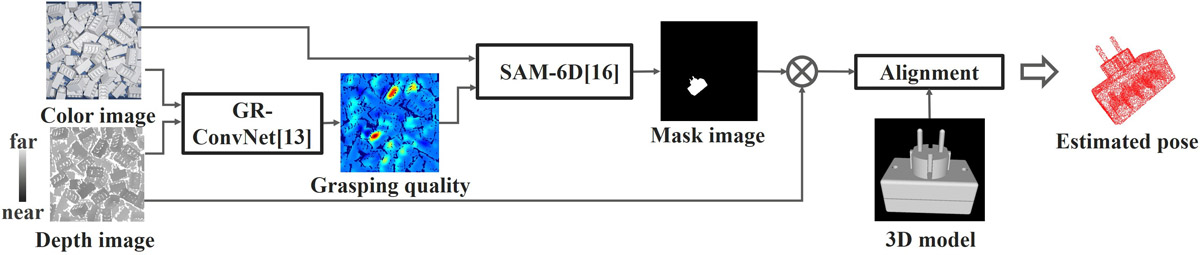

Labor shortages are becoming a significant issue in manufacturing sites, and automation of bin picking is required. To automate bin picking, it is necessary to estimate the pose of objects. Traditionally, the poses of multiple objects are estimated. However, estimating the poses of multiple objects is difficult, because it requires accurate pose estimation even for objects that are overlapped with others. This study is based on the fact that a robot can only grasp one object at a time. We developed a method that selects an easily graspable object first and focuses on pose estimation for this single object. In the Siléane dataset, the accuracy of pose estimation was 86.1%, an improvement of 17.5 points compared with the conventional method, PPR-Net.

Pose estimation focusing on one object

- [1] Z. Dong, S. Liu, T. Zhou, H. Cheng, L. Zeng, X. Yu, and H. Liu, “PPR-Net: Point-wise pose regression network for instance segmentation and 6d pose estimation in bin-picking scenarios,” 2019 IEEE/RSJ Int. Conf. on Intelligent Robots and Systems, pp. 1773-1780, 2019. https://doi.org/10.1109/IROS40897.2019.8967895

- [2] L. Zeng, W. J. Lv, Z. K. Dong, and Y. J. Liu, “PPR-Net++: Accurate 6D pose estimation in stacked scenarios,” IEEE Trans. on Automation Science and Engineering, Vol.19, No.4, pp. 3139-3135, 2021. https://doi.org/10.1109/TASE.2021.3108800

- [3] K. Kleeberger and M. F. Huber, “Single shot 6D object pose estimation,” 2020 IEEE Int. Conf. on Robotics and Automation, pp. 6239-6245, 2020. https://doi.org/10.1109/ICRA40945.2020.9197207

- [4] L. Zhao, M. Sun, W. J. Lv, X. Y. Zhang, and L. Zeng, “Domain adaptation on point clouds for 6D pose estimation in bin-picking scenarios,” 2023 IEEE/RSJ Int. Conf. on Intelligent Robots and Systems, pp. 2925-2931, 2023. https://doi.org/10.1109/IROS55552.2023.10341920

- [5] Y. Konishi, K. Hattori, and M. Hashimoto, “Real-time 6D object pose estimation on CPU,” 2019 IEEE/RSJ Int. Conf. on Intelligent Robots and Systems, pp. 3451-3458, 2019. https://doi.org/10.1109/IROS40897.2019.8967967

- [6] S. Hinterstoisser, S. Holzer, C. Cagniart, S. Ilic, K. Konolige, N. Navab, and V. Lepetit, “Multimodal templates for real-time detection of texture-less objects in heavily cluttered scenes,” 2011 Int. Conf. on Computer Vision, pp. 858-865, 2011. https://doi.org/10.1109/ICCV.2011.6126326

- [7] R. B. Rusu, G. Bradski, R. Thibaux, and J. Hsu, “Fast 3D recognition and pose using the viewpoint feature histogram,” 2010 IEEE/RSJ Int. Conf. on Intelligent Robots and Systems, pp. 2155-2162, 2010. https://doi.org/10.1109/IROS.2010.5651280

- [8] C. Choi, Y. Taguchi, O. Tuzel, M.-Y. Liu, and S. Ramalingam, “Voting-based pose estimation for robotic assembly using a 3D sensor,” 2012 IEEE Int. Conf. on Robotics and Automation, pp. 1724-1731, 2012. https://doi.org/10.1109/ICRA.2012.6225371

- [9] W. Kehl, F. Manhardt, F. Tombari, S. Ilic, and N. Navab, “SSD-6D: Making RGB-based 3D detection and 6D pose estimation great again,” 2017 IEEE Int. Conf. on Computer Vision, pp. 1521-1529, 2017. https://doi.org/10.1109/ICCV.2017.169

- [10] Y. He, H. Huang, H. Fan, Q. Chen, and J. Sun, “FFB6D: A full flow bidirectional fusion network for 6D pose estimation,” 2021 IEEE/CVF Int. Conf. on Computer Vision and Pattern Recognition, pp. 3002-3012, 2021. https://doi.org/10.1109/CVPR46437.2021.00302

- [11] D. Comaniciu and P. Meer, “Mean shift: A robust approach toward feature space analysis,” IEEE Trans. on Pattern Analysis and Machine Intelligence, Vol.24, No.5, pp. 603-619, 2002. https://doi.org/10.1109/34.1000236

- [12] R. Brégier, F. Devernay, L. Leyrit, and J. L. Crowley, “Symmetry aware evaluation of 3D object detection and pose estimation in scenes of many parts in bulk,” 2017 IEEE Int. Conf. on Computer Vision Workshops, pp. 2209-2218, 2017. https://doi.org/10.1109/ICCVW.2017.258

- [13] J. Mahler, M. Matl, V. Satish, M. Danielczuk, B. DeRose, S. McKinley, and K. Goldberg, “Learning ambidextrous robot grasping policies,” Science Robotics, Vol.4, No.26, Article No.eaau4984, 2019. https://doi.org/10.1126/scirobotics.aau4984

- [14] S. Ainetter and F. Fraundorfer, “End-to-end trainable deep neural network for robotic grasp detection and semantic segmentation from rgb,” 2021 IEEE Int. Conf. on Robotics and Automation, pp. 13452-13458, 2021. https://doi.org/10.1109/ICRA48506.2021.9561398

- [15] H. Cao, H. S. Fang, W. Liu, and C. Lu, “Suctionnet-1billion: A large-scale benchmark for suction grasping,” IEEE Robotics and Automation Letters, Vol.6, No.4, pp. 8718-8725, 2021. https://doi.org/10.1109/LRA.2021.3115406

- [16] S. Kumra, S. Joshi, and F. Sahin, “Antipodal Robotic Grasping using Generative Residual Convolutional Neural Network,” 2020 IEEE/RSJ Int. Conf. on Intelligent Robots and Systems, pp. 9626-9633, 2020. https://doi.org/10.1109/IROS45743.2020.9340777

- [17] S. van der Walt, J. L. Schönberger, J. Nunez-Iglesias, F. Boulogne, J. D. Warner, N. Yager, E. Gouillart, and T. Yu, “scikit-image: Image processing in Python,” PeerJ, Vol.2, Article No.e453, 2014. https://doi.org/10.7717/peerj.453

- [18] A. Kirillov, E. Mintun, N. Ravi, H. Mao, C. Rolland, L. Gustafson, T. Xiao, S. Whitehead, A. C. Berg, W. Lo, P. Dollar, and R. Girshick, “Segment anything,” 2023 IEEE/CVF Int. Conf. on Computer Vision, pp. 4015-4026, 2023. https://doi.org/10.1109/ICCV51070.2023.00371

- [19] J. Lin, L. Liu, D. Lu, and K. Jia, “Sam-6d: Segment anything model meets zero-shot 6d object pose estimation,” 2024 IEEE/CVF Conf. on Computer Vision and Pattern Recognition, pp. 27906-27916, 2024. https://doi.org/10.1109/CVPR52733.2024.02636

- [20] M. Oquab, T. Darcet, T. Moutakanni, H. V. Vo, M. Szafraniec, V. Khalidov, P. Fernandez, D. Haziza, F. Massa, A. El-Nouby, M. Assran, N. Ballas, W. Galuba, R. Howes, P. Huang, S. Li, I. Misra, M. Rabbat, V. Sharma, G. Synnaeve, H. Xu, H. Jegou, J. Mairal, P. Labatut, A. Joulin, and P. Bojanowski, “Dinov2: Learning robust visual features without supervision,” arXiv:2304.07193, 2023. https://doi.org/10.48550/arXiv.2304.07193

- [21] J. Yang, H. Li, D. Campbell, and Y. Jia, “Go-ICP: A globally optimal solution to 3D ICP point-set registration,” IEEE Trans. on Pattern Analysis and Machine Intelligence, Vol.38, No.11, pp. 2241-2254, 2015. https://doi.org/10.1109/TPAMI.2015.2513405

- [22] E. L. Lawler and D. E. Wood, “Branch-and-bound methods: A survey,” Operations Research, Vol.14, No.4, pp. 699-719, 1966. https://doi.org/10.1287/opre.14.4.699

- [23] K. Kleeberger, C. Landgraf, and M. F. Huber, “Large-scale 6D object pose estimation dataset for industrial bin-picking,” 2019 IEEE/RSJ Int. Conf. on Intelligent Robots and Systems, pp. 2573-2578, 2019. https://doi.org/10.1109/IROS40897.2019.8967594

- [24] J. Zhang, Y. Yao, and B. Deng, “Fast and Robust Iterative Closest Point,” IEEE Trans. on Pattern Analysis and Machine Intelligence, Vol.44, No.7, pp. 3450-3466, 2022. https://doi.org/10.1109/TPAMI.2021.3054619

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.