Paper:

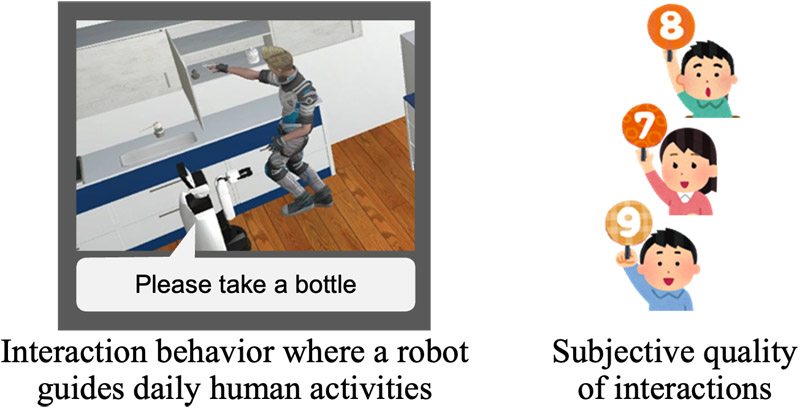

Interaction Quality Evaluation in Guiding Human Daily Life Behavior

Yoshiaki Mizuchi

and Tetsunari Inamura

and Tetsunari Inamura

Tamagawa University

6-1-1 Tamagawagakuen, Machida, Tokyo 194-8610, Japan

Interactive service robots must communicate effectively with users when teaching work procedures and seeking assistance in the case of errors. The evaluation of interaction quality is crucial for developing and enhancing the interaction capabilities of service robots. However, evaluating the interaction quality in daily life scenarios is significantly challenging, primarily because of its subjective nature. In this study, we present a series of case studies on evaluating interaction quality in the context of guiding daily life activities. Based on the results of the case studies, we discuss the remaining challenges and provide insights into developing a reasonable and efficient evaluation of the interaction quality of robots in guiding human scenarios.

Subjective evaluation of interaction quality

- [1] P. Anderson, Q. Wu, D. Teney, J. Bruce, M. Johnson, N. Sünderhauf, I. Reid, S. Gould, and A. van D. Hengel, “Vision-and-language navigation: Interpreting visually-grounded navigation instructions in real environments,” Proc. of the IEEE Conf. on Computer Vision and Pattern Recognition, pp. 3674-3683, 2018. https://doi.org/10.1109/CVPR.2018.00387

- [2] J. Hatori, Y. Kikuchi, S. Kobayashi, K. Takahashi, Y. Tsuboi, Y. Unno, W. Ko, and J. Tan, “Interactively Picking Real-World Objects with Unconstrained Spoken Language Instructions,” IEEE Int. Conf. on Robotics and Automation, pp. 3774-3781, 2018. https://doi.org/10.1109/ICRA.2018.8460699

- [3] A. Magassouba, K. Sugiura, and H. Kawai, “A Multimodal Classifier Generative Adversarial Network for Carry and Place Tasks from Ambiguous Language Instructions,” IEEE Robotics and Automation Letters, Vol.3, No.4, pp. 3113-3120, 2018. https://doi.org/10.1109/LRA.2018.2849607

- [4] S. Rossi, M. Staffa, L. Bove, R. Capasso, and G. Ercolano, “User’s Personality and Activity Influence on HRI Comfortable Distances,” Lecture Notes in Computer Science, Vol.10652, pp. 167-177, 2017. https://doi.org/10.1007/978-3-319-70022-9_17

- [5] S. Rosenthal and M. Veloso, “Mobile robot planning to seek help with spatially-situated tasks,” Proc. of the AAAI Conf. on Artificial Intelligence, 2012. https://doi.org/10.1609/aaai.v26i1.8386

- [6] R. A. Knepper, S. Tellex, A. Li, N. Roy, and D. Rus, “Recovering from failure by asking for help,” Autonomous Robots, Vol.39, No.3, pp. 347-362, 2015. https://doi.org/10.1007/s10514-015-9460-1

- [7] L. Iocchi, D. Holz, J. Ruiz-Del-Solar, K. Sugiura, and T. van der Zant, “RoboCup@Home: Analysis and results of evolving competitions for domestic and service robots,” Artificial Intelligence, Vol.229, pp. 258-281, 2015. https://doi.org/10.1016/j.artint.2015.08.002

- [8] L. Iocchi, G. K. Kraetzschmar, D. Nardi, P. U. Lima, P. Miraldo, E. Bastianelli, and R. Capobianco, “RoCKIn@Home: Domestic Robots Challenge,” A. Saffiotti et al. (Eds.), “RoCKIn – Benchmarking Through Robot Competitions,” pp. 21-46, IntechOpen, 2017. https://doi.org/10.5772/intechopen.70015

- [9] D. Brščić, H. Kidokoro, Y. Suehiro, and T. Kanda, “Escaping from Children’s Abuse of Social Robots,” Proc. of ACM/IEEE Int. Conf. on Human-Robot Interaction, pp. 59-66, 2015. https://doi.org/10.1145/2696454.2696468

- [10] J. Orkin and D. Roy, “The restaurant game: Learning social behavior and language from thousands of players online,” J. of Game Development, Vol.3, No.1, pp. 39-60, 2007.

- [11] C. Breazeal, N. Depalma, J. Orkin, S. Chernova, and M. Jung, “Crowdsourcing Human-Robot Interaction: New Methods and System Evaluation in a Public Environment,” J. of Human-Robot Interaction, Vol.2, No.1, pp. 82-111, 2013. https://doi.org/10.5898/JHRI.2.1.Breazeal

- [12] K. Striegnitz, A. Denis, A. Gargett, K. Garouf, A. Koller, and M. Theune, “Report on the Second Second Challenge on Generating Instructions in Virtual Environments (GIVE-2.5),” Proc. of European Workshop on Natural Language Generation, pp. 270-279, 2011.

- [13] A. Steinfeld, T. Fong, D. Kaber, M. Lewis, J. Scholtz, A. Schultz, and M. Goodrich, “Common metrics for human-robot interaction,” Proc. of the 1st ACM SIGCHI/SIGART Conf. on Human-Robot Interaction, pp. 33-40, 2006. https://doi.org/10.1145/1121241.1121249

- [14] C. L. Bethel and R. R. Murphy, “Review of human studies methods in HRI and recommendations,” Int. J. of Social Robotics, Vol.2, No.4, pp. 347-359, 2010. https://doi.org/10.1007/s12369-010-0064-9

- [15] A. Mayima, A. Clodic, and R. Alami, “Towards Robots able to Measure in Real-time the Quality of Interaction in HRI Contexts,” Int. J. of Social Robotics, Vol.14, No.3, pp. 713-731, 2022. https://doi.org/10.1007/s12369-021-00814-5

- [16] J. Yang, H. Jin, R. Tang, X. Han, Q. Feng, H. Jiang, S. Zhong, B. Yin, and X. Hu, “Harnessing the power of llms in practice: A survey on chatgpt and beyond,” ACM Trans. on Knowledge Discovery from Data, Vol.18, No.6, Article No.160, 2024. https://doi.org/10.1145/3649506

- [17] T. Kanda, H. Ishiguro, M. Imai, and T. Ono, “Development and evaluation of interactive humanoid robots,” Proc. of the IEEE, Vol.92, No.11, pp. 1839-1850, 2004. https://doi.org/10.1109/JPROC.2004.835359

- [18] T. Inamura and Y. Mizuchi, “SIGVerse: A Cloud-Based VR Platform for Research on Multimodal Human-Robot Interaction,” Frontiers in Robotics and AI, Vol.8, Article No.549360, 2021. https://doi.org/10.3389/frobt.2021.549360

- [19] Y. Mizuchi and T. Inamura, “Cloud-based multimodal human-robot interaction simulator utilizing ROS and unity frameworks,” IEEE/SICE Int. Symposium on System Integration (SII), pp. 948-955, 2017. https://doi.org/10.1109/SII.2017.8279345

- [20] Y. Mizuchi, H. Yamada, and T. Inamura, “Evaluation of an online human-robot interaction competition platform based on virtual reality – Case study in rcap2021,” Advanced Robotics, Vol.37, No.8, pp. 510-517, 2023. https://doi.org/10.1080/01691864.2022.2145235

- [21] T. Inamura and Y. Mizuchi, “Robot Competition to Evaluate Guidance Skill for General Users in VR Environment,” ACM/IEEE Int. Conf. on Human-Robot Interaction, pp. 552-553, 2019. https://doi.org/10.1109/HRI.2019.8673218

- [22] Y. Mizuchi and T. Inamura, “Optimization of criterion for objective evaluation of HRI performance that approximates subjective evaluation: A case study in robot competition,” Advanced Robotics, Vol.34, Nos.3-4, pp. 142-156, 2020. https://doi.org/10.1080/01691864.2019.1698462

- [23] H. Okada, T. Inamura, and K. Wada, “What competitions were conducted in the service categories of the world robot summit?,” Advanced Robotics, Vol.33, No.17, pp. 900-910, 2019. https://doi.org/10.1080/01691864.2019.1663608

- [24] Y. Mizuchi, Y. Tanno, and T. Inamura, “Designing Evaluation Metrics for Quality of Human-Robot Interaction in Guiding Human Behavior,” Proc. of the Int. Conf. on Human-Agent Interaction, pp. 39-45, 2023. https://doi.org/10.1145/3623809.3623835

- [25] Y. Mizuchi, K. Iwami, and T. Inamura, “Vr and gui based human-robot interaction behavior collection for modeling the subjective evaluation of the interaction quality,” 2022 IEEE/SICE Int. Symposium on System Integration (SII), pp. 375-382, 2022. https://doi.org/10.1109/SII52469.2022.9708824

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.