Paper:

6DoF Graspability Evaluation on a Single Depth Map

Yukiyasu Domae

National Institute of Advanced Industrial Science and Technology

2-3-26 Aomi, Koto-ku, Tokyo 135-0064, Japan

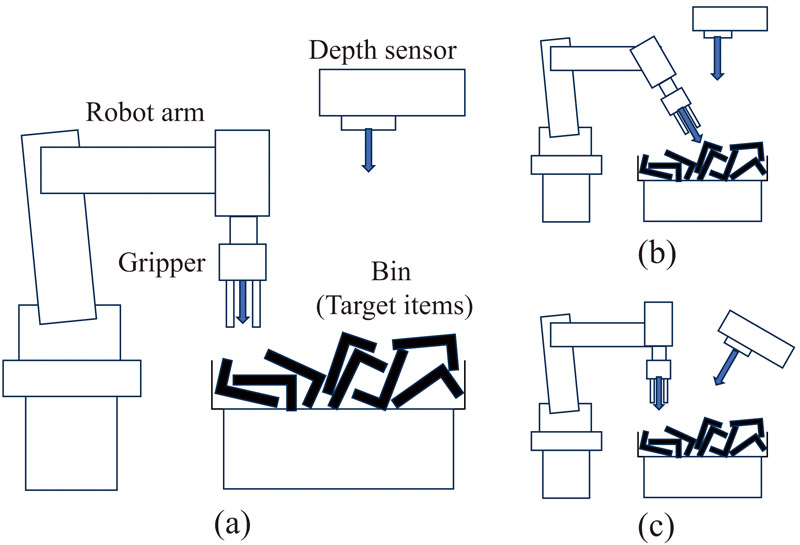

Robot-based picking of diverse objects remains one of the critical challenges in the field of robotic automation research. In the automation of vision-based picking, methods based on large-scale pre-training or object shape models have been proposed. However, in many real industrial settings, it is often difficult to prepare the necessary pre-trained models or object shape models. One approach that does not require pre-training or object shape models is fast graspability evaluation (FGE). FGE efficiently detects grasp positions by convolving depth images with a cross-sectional model of the robot hand. However, a limitation of FGE is that it requires the grasp direction to align with the sensor’s line of sight, and it can only detect grasp positions with up to 4 degrees of freedom (DoF). In this paper, we extend FGE to 6DoF grasp position detection by calculating the 2 additional DoFs of grasp posture based on the normal directions around the object’s grasp region, obtained from the depth image. In picking experiments using three types of actual industrial parts, a comparison between the proposed method and FGE confirmed an average improvement of 10% in grasp success rates.

Constraints of fast graspability evaluation

- [1] Y. Domae, H. Okuda, Y. Taguchi, K. Sumi, and T. Hirai, “Fast graspability evaluation on single depth maps for bin picking with general grippers,” Proc. of the IEEE Int. Conf. on Robotics and Automation (ICRA), pp. 1997-2004, 2014. https://doi.org/10.1109/ICRA.2014.6907124

- [2] N. Correll, K. E. Bekris, D. Berenson et al., “Analysis and observations from the first Amazon picking challenge,” IEEE Trans. on Automation Science and Engineering, Vol.15, No.1, pp. 172-188, 2018. https://doi.org/10.1109/TASE.2016.2600527

- [3] M. Fujita, Y. Domae, A. Noda, G. A. Garcia Ricardez, T. Nagatani, A. Zeng, S. Song, A. Rodriguez, A. Causo, I. M. Chen, and T. Ogasawara, “What are the important technologies for bin picking? Technology analysis of robots in competitions based on a set of performance metrics,” Advanced Robotics, Vol.34, Nos.7-8, pp. 560-574, 2020. https://doi.org/10.1080/01691864.2019.1698463

- [4] D. Morrison et al., “Cartman: The low-cost cartesian manipulator that won the Amazon Robotics Challenge,” 2018 IEEE Int. Conf. on Robotics and Automation (ICRA), pp. 7757-7764, 2018. https://doi.org/10.1109/ICRA.2018.8463191

- [5] P. J. Besl and N. D. McKay, “A method for registration of 3D shapes,” IEEE Trans. on Pattern Analysis and Machine Intelligence, Vol.14, No.2, pp. 239-254, 1992. https://doi.org/10.1109/34.121791

- [6] B. Drost, M. Ulrich, N. Navab, and S. Ilic, “Model globally, match locally: Efficient and robust 3D object recognition,” Proc. of the IEEE Computer Society Conf. on Computer Vision and Pattern Recognition (CVPR), pp. 998-1005, 2010. https://doi.org/10.1109/CVPR.2010.5540108

- [7] C. Choi, Y. Taguchi, O. Tuzel et al., “Voting-based pose estimation for robotic assembly using a 3D sensor,” Proc. of IEEE Int. Conf. on Robotics and Automation (ICRA), pp. 1724-1731, 2012. https://doi.org/10.1109/ICRA.2012.6225371

- [8] C. Wang et al., “DenseFusion: 6D object pose estimation by iterative dense fusion,” 2019 IEEE/CVF Conf. on Computer Vision and Pattern Recognition (CVPR), pp. 3338-3347, 2019. https://doi.org/10.1109/CVPR.2019.00346

- [9] Y. He, W. Sun, H. Huang, J. Liu, H. Fan, and J. Sun, “PVN3D: A deep point-wise 3D keypoints voting network for 6DoF pose estimation,” 2020 IEEE/CVF Conf. on Computer Vision and Pattern Recognition (CVPR), pp. 11629-11638, 2020. https://doi.org/10.1109/CVPR42600.2020.01165

- [10] K. Kleeberger, R. Bormann, W. Kraus, and M. F. Huber, “A survey on learning-based robotic grasping,” Robotics in Manufacturing, Vol.1, pp. 239-249, 2020. https://doi.org/10.1007/s43154-020-00021-6

- [11] Y. Jiang, S. Moseson, and A. Saxena, “Efficient grasping from RGBD images: Learning using a new rectangle representation,” 2011 IEEE Int. Conf. on Robotics and Automation, pp. 3304-3311, 2011. https://doi.org/10.1109/ICRA.2011.5980145

- [12] J. Mahler, F. T. Pokorny, B. Hou, M. Roderick, M. Laskey, M. Aubry, K. Kohlhoff, T. Kröger, J. Kuffner, and K. Goldberg, “Dex-net 1.0: A cloud-based network of 3D objects for robust grasp planning using a multi-armed bandit model with correlated rewards,” Proc. of the IEEE Int. Conf. on Robotics and Automation (ICRA), pp. 1957-1964, 2016. https://doi.org/10.1109/ICRA.2016.7487342

- [13] S. Levine, P. Pastor, A. Krizhevsky, J. Ibarz, and D. Quillen, “Learning hand-eye coordination for robotic grasping with deep learning and large-scale data collection,” Int. J. of Robotics Research, Vol.37, Nos.4-5, pp. 421-436, 2018. https://doi.org/10.1177/0278364917710318

- [14] A. Mousavian, C. Eppner, and D. Fox, “6-DOF GraspNet: Variational grasp generation for object manipulation,” arXiv preprint, arXiv:1905.10520, 2019. https://doi.org/10.48550/arXiv.1905.10520

- [15] S. Song, A. Zeng, J. Lee, and T. Funkhouser, “Grasping in the wild: Learning 6DoF closed-loop grasping from low-cost demonstrations,” arXiv preprint, arXiv:1912.04344, 2019. https://doi.org/10.48550/arXiv.1912.04344

- [16] H. Tachikake and W. Watanabe, “A learning-based robotic bin-picking with flexibly customizable grasping conditions,” IEEE/RSJ Int. Conf. on Intelligent Robots and Systems (IROS), pp. 9040-9047, 2020. https://doi.org/10.1109/IROS45743.2020.9340904

- [17] R. Matsumura, Y. Domae, W. Wan, and K. Harada, “Learning-based robotic bin-picking for potentially tangled objects,” Proc. of the IEEE/RSJ Int. Conf. on Intelligent Robots and Systems (IROS), pp. 7990-7997, 2019. https://doi.org/10.1109/IROS40897.2019.8968295

- [18] X. Zhang, Y. Domae, W. Wan, and K. Harada, “Learning efficient policies for picking entangled wire harnesses: An approach to industrial bin picking,” IEEE Robotics and Automation Letters (RA-L), Vol.8, No.1, pp. 73-80, 2022. https://doi.org/10.1109/LRA.2022.3222995

- [19] X. Zhang, Y. Domae, W. Wan, and K. Harada, “A closed-loop bin picking system for entangled wire harnesses using bimanual and dynamic manipulation,” Robotics and Computer-Integrated Manufacturing, No.86, Article No.102670, 2024. https://doi.org/10.1016/j.rcim.2023.102670

- [20] M. Fujita, Y. Domae, R. Kawanishi, G. A. G. Ricardez, K. Kato, K. Shiratsuchi, R. Haraguchi, R. Araki, H. Fujiyoshi, S. Akizuki, M. Hashimoto, A. Causo, A. Noda, H. Okuda, and T. Ogasawara, “Bin-picking robot using a multi-gripper switching strategy based on object sparseness,” 2019 IEEE 15th Int. Conf. on Automation Science and Engineering (CASE), pp. 1540-1547, 2019. https://doi.org/10.1109/COASE.2019.8842977

- [21] Y. Domae, A. Noda, T. Nagatani, and W. Wan, “Robotic general parts feeder: bin-picking, regrasping, and kitting,” Proc. of the IEEE Int. Conf. on Robotics and Automation (ICRA), pp. 5004-5010, 2020. https://doi.org/10.1109/ICRA40945.2020.9197056

- [22] Y. Nishina and T. Hasegawa, “Model-less grasping point estimation for bin-picking of non-rigid objects and irregular-shaped objects,” OMRON TECHNICS, Vol.52, Article No.012JP, 2020 (in Japanese).

- [23] J. Mahler, M. Matl, V. Satish, M. Danielczuk, B. DeRose, S. McKinley, and K. Goldberg, “Learning ambidextrous robot grasping policies,” Science Robotics, Vol.4, No.26, Article No.eaau4984, 2019. https://doi.org/10.1126/scirobotics.aau4984

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.