Paper:

A Method of Detection and Identification for Axillary Buds

Manabu Kawaguchi and Naoyuki Takesue

Tokyo Metropolitan University

6-6 Asahigaoka, Hino, Tokyo 191-0065, Japan

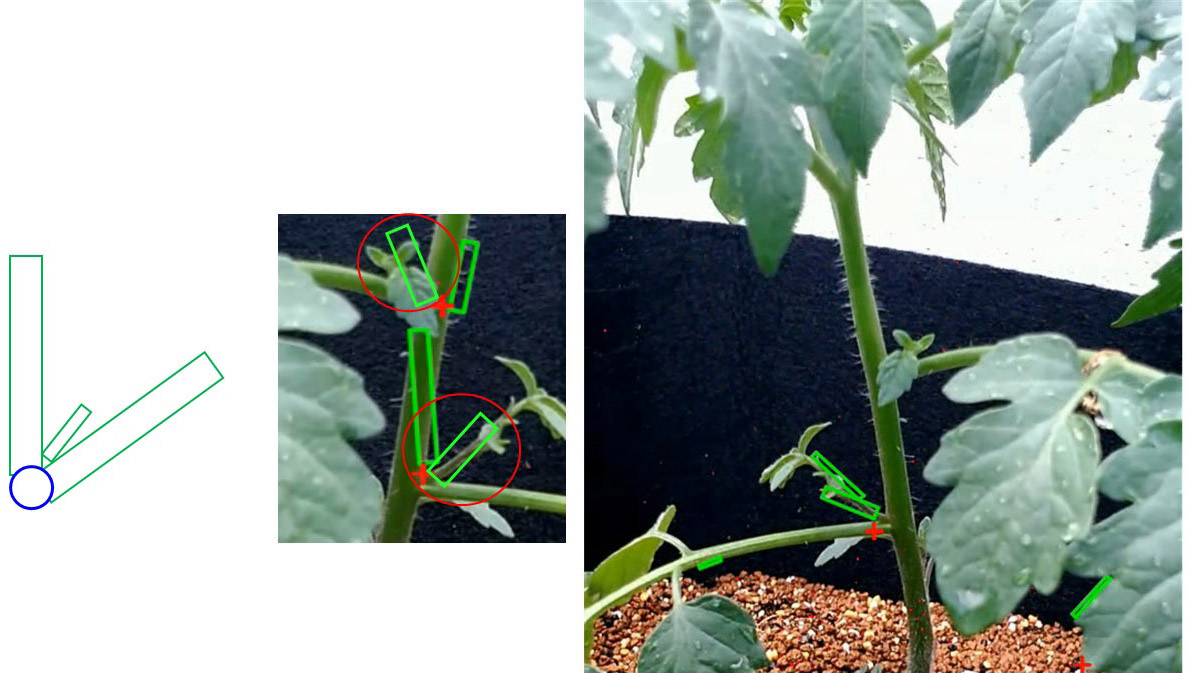

During the period from sowing and planting to harvesting, outdoor crops are directly affected by the natural environment, including wind, rain, frost, and sunlight. Under such circumstances, vegetables change their growth conditions, shape, and flexibility daily. We aimed to develop an agricultural work-support robot that automates monitoring, cultivation, disease detection, and treatment. In recent years, many researchers and venture companies have developed agricultural harvesting robots. In this study, instead of focusing on intensive harvesting operations, we focused on daily farm operations from the beginning of cultivation to immediately before harvest. Therefore, gripping and cutting are considered basic functions that are common to several routine agricultural tasks. To find the assumed objects from a camera image with a low computational load, this study focuses on branch points to detect and identify even if the stems, lateral branches, and axillary buds are swaying in the wind. A branch point is a characteristic part close to the working position, even when the wind blows. Therefore, we propose a method to detect the assumed branch points simultaneously and divide each branch point into the main stem, lateral branch, and axillary bud. The effectiveness of this method is demonstrated through experimental evaluations using three types of vegetables, regardless of whether their stems are swaying.

Detection of branch points and identification of each axillary bud

- [1] Y. Matsumoto, “Toward the realization of sustainable food systems – Achieving both productivity improvement and sustainability through smart agriculture, etc. –,” Japanese J. of Pesticide Science, Vol.47, No.2, pp. 117-120, 2022 (in Japanese). https://doi.org/10.1584/jpestics.W22-25

- [2] Y. Muto, “Contact Theoretic Analysis of Pest Management Support Services: Analysis Based on an Example Using Smart Farming Technologies,” J. of Rural Economics, Vol.93, No.3, pp. 295-300, 2021 (in Japanese). https://doi.org/10.11472/nokei.93.295

- [3] J. Saito, “Suspended Automatic Harvesting Robot Utilizing AI,” J. of the Robotics Society of Japan, Vol.39, No.10, pp. 901-906, 2021 (in Japanese). https://doi.org/10.7210/jrsj.39.901

- [4] T. Mikami et al., “Hidden Main Stem Detection of Tree in Dense Fruit Vegetable Field – Image-to-Image Translation by Deep Convolutional Neural Networks Learned with Realistic Computer Graphics –,” J. of the Robotics Society of Japan, Vol.40, No.2, pp. 143-153, 2022 (in Japanese). https://doi.org/10.7210/jrsj.40.143

- [5] T. Isokane, F. Okura, A. Ide, Y. Matsushita, and Y. Yagi, “Probabilistic plant modeling via multi-view image-to-image translation,” Proc. of the IEEE Conf. on Computer Vision and Pattern Recognition (CVPR), pp. 2906-2915, 2018. https://doi.org/10.48550/arXiv.1804.09404

- [6] Z. Wu et al., “A method for identifying grape stems using keypoints,” Computers and Electronics in Agriculture, Vol.209, Article No.107825, 2023. https://doi.org/10.1016/j.compag.2023.107825

- [7] M. Kawaguchi and N. Takesue, “Examination of functionality of agricultural work support robot in organic farming,” Robomech, A02-1, 2021 (in Japanese). https://doi.org/10.1299/jsmermd.2021.1P2-A02

- [8] W. Chen et al., “Sugarcane Stem Node Recognition in Field by Deep Learning Combining Data Expansion,” Applied Sciences, Vol.11, Issue 18, Article No.8663, 2021. https://doi.org/10.3390/app11188663

- [9] K. Yu et al., “MobileNet-YOLO v5s: An improved lightweight method for real-time detection of sugarcane stem nodes in complex natural environments,” IEEE Access, Vol.11, pp. 104070-104083, 2023. https://doi.org/10.1109/ACCESS.2023.3317951

- [10] L. Zhu et al., “Support vector machine and YOLO for a mobile food grading system,” Internet of Things, Vol.13, Article No.100359, 2021. https://doi.org/10.1016/j.iot.2021.100359

- [11] N. Kondo and Y. Shibano, “Studies on Image Recognition of fruit Vegetable (Part 1),” J. of the Japanese Society of Agricultural Machinery, Vol.53, No.5, pp. 51-58, 1991 (in Japanese). https://doi.org/10.11357/JSAM1937.53.5_51

- [12] T. Sato et al., “Measurements of Vibration of Plants by Strain Gauges,” J. of Agricultural Meteorology, Vol.36, No.2, pp. 103-107, 1980 (in Japanese). https://doi.org/10.2480/agrmet.36.103

- [13] Y. Morio et al., “Quality Evaluation of Cut Roses by Stem’s Curvature (Part 1) – Stem Extracting Method –,” J. of the Japanese Society of Agricultural Machinery, Vol.61, No.6, pp. 57-64, 1999 (in Japanese). https://doi.org/10.11357/jsam1937.61.6_57

- [14] T. Okamoto, “Biotechnology Robot,” J. of the Robotics Society of Japan, Vol.12, No.7, pp. 960-965, 1994 (in Japanese). https://doi.org/10.7210/jrsj.12.960

- [15] H. Yaguchi et al., “A Research of Construction Method for Autonomous Tomato Harvesting Robot focusing on Harvesting Device and Visual Recognition,” J. of the Robotics Society of Japan, Vol.36, No.10, pp. 693-702, 2018 (in Japanese). https://doi.org/10.7210/jrsj.36.693

- [16] A. Namiki and M. Ishikawa, “Recent Development and Applications of High-Speed Vision,” J. of the Robotics Society of Japan, Vol.32, No.9, pp. 766-768, 2014 (in Japanese). https://doi.org/10.7210/jrsj.32.766

- [17] A. Namiki, “Robot Application of High-Speed Visual Feedback Control,” J. of the Japan Society for Precision Engineering, Vol.84, No.3, pp. 243-247, 2018 (in Japanese). https://doi.org/10.2493/jjspe.84.243

- [18] S. Hayashi et al., “Visual Feedback Guidance of Manipulator for Eggplant Harvesting using Fuzzy Logic,” J. of Society of High Technology in Agriculture, Vol.12, No.2, pp. 83-92, 2000 (in Japanese). https://doi.org/10.2525/jshita.12.83

- [19] N. Yukawa et al., “Visual Feedback Control of Fruit Harvesting Robot (Part 1),” J. of the Japanese Society of Agricultural Machinery, Vol.52, No.3, pp. 53-59, 1990 (in Japanese). https://doi.org/10.11357/jsam1937.52.3_53

- [20] T. Shindo, L. Yang, Y. Hoshino, and Y. Cao, “A Fundamental Study on Plant Classification Using Image Recognition by AI,” Proc. of Japan Joint Automatic Control Conf., 9A2, pp. 175-179, 2018 (in Japanese).

- [21] T. Fujinaga, S. Yasukawa, and K. Ishii, “Tomato Growth State Map for the Automation of Monitoring and Harvesting,” J. Robot. Mechatron., Vol.32, No.6, pp. 1279-1291, 2020. https://doi.org/10.20965/jrm.2020.p1279

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.