Paper:

Effect of Viewpoint Change on Robot Hand Operation by Gesture- and Button-Based Methods

Qiang Yao, Tatsuro Terakawa, Masaharu Komori, Hirotaka Fujita, and Ikko Yasuda

Department of Mechanical Engineering and Science, Kyoto University

Kyoto daigaku-katsura, Nishikyo-ku, Kyoto 615-8540, Japan

Teaching pendants with multiple buttons are commonly employed to control working robots; however, such devices are not easy to operate. As an alternative, gesture-based manipulation methods using the operator’s upper limb movements have been studied as a way to operate the robots intuitively. Previous studies involving these methods have generally failed to consider the changing viewpoint of the operator relative to the robot, which may adversely affect operability. This study proposes a novel evaluation method and applies it in a series of experiments to compare the influence of viewpoint change on the operability of the gesture- and button-based operation methods. Experimental results indicate that the operability of the gesture-based method is superior to that of the button-based method for all viewpoint angles, due mainly to shorter non-operating times. An investigation of trial-and-error operation indicates that viewpoint change makes positional operation with the button-based method more difficult but has a relatively minor influence on postural operation.

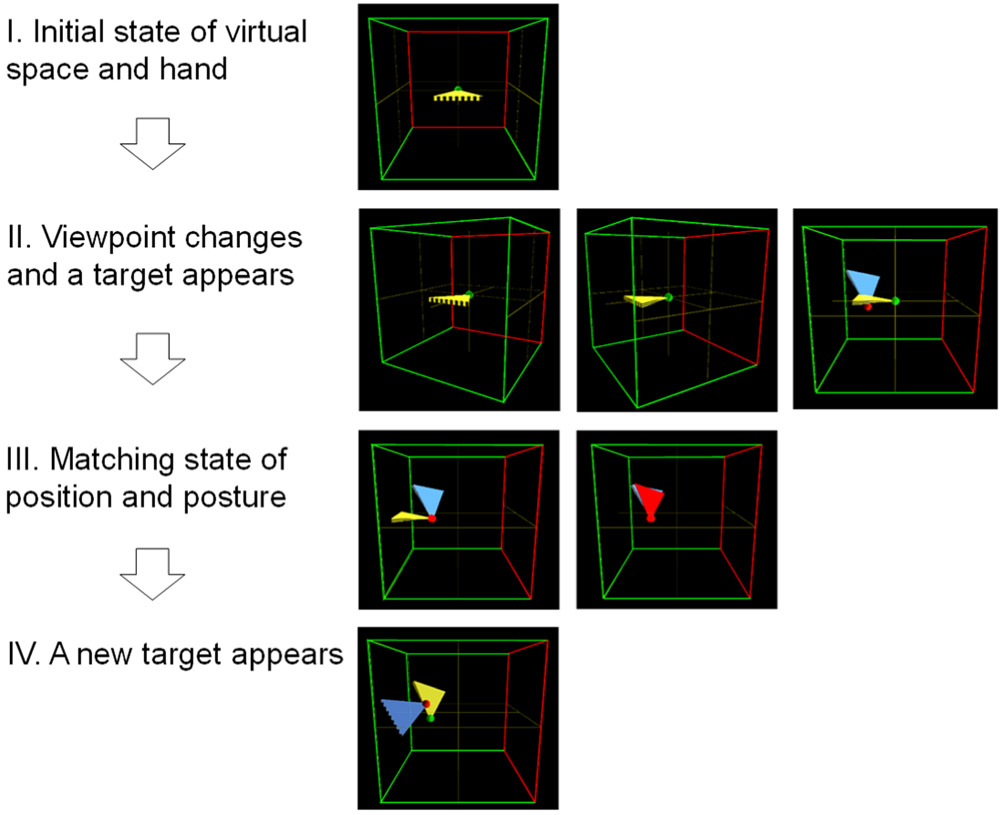

Process of evaluation game

- [1] M. Mizukawa and T. Koyama, “The present and future of teaching method in industrial robots,” J. of the Robotics Society of Japan, Vol.17, No.2, pp. 180-185, 1999 (in Japanese).

- [2] Y. Horiguchi, K. Kurono, H. Nakanishi, T. Sawaragi, T. Nagatani, A. Noda, and K. Tanaka, “Configural display to support position teaching to industrial robot,” Trans. of the SICE, Vol.47, No.12, pp. 656-665, 2012 (in Japanese).

- [3] Y. Inoue and K. Takaoka, “Teaching method for industrial robot,” J. of the Robotics Society of Japan, Vol.14, No.6, pp. 780-783, 1996 (in Japanese).

- [4] C. McGinn, A. Sena, and K. Kelly, “Controlling robots in the home: factors that affect the performance of novice robot operators,” Appl. Ergon., Vol.65, pp. 23-32, 2017.

- [5] D. Gong, J. Zhao, J. Yu, and G. Zuo, “Motion mapping of the heterogeneous master-slave system for intuitive telemanipulation,” Int. J. Adv. Robot. Syst., Vol.15, No.1, 2018.

- [6] T. Grzejszczak, M. Mikulski, T. Szkodny, and K. Jedrasiak, “Gesture based robot control,” Int. Conf. on Computer Vision and Graphics (ICCVG 2012), Lecture Notes in Computer Science, Vol.7594, pp. 407-413, 2012.

- [7] A. Jackowski, M. Gebhard, and A. Graser, “A novel head gesture based interface for hands-free control of a robot,” 2016 IEEE Int. Symp. on Medical Measurements and Applications (MeMeA), 2016.

- [8] Y. Katsuki, Y. Yamakawa, Y. Watanabe, and M. Ishikawa, “Development of fast-response master-slave system using high-speed non-contact 3D sensing and high-speed robot hand,” 2015 IEEE/RSJ Int. Conf. on Intelligent Robots and Systems (IROS), pp. 1236-1241, 2015.

- [9] Z. Wang, I. Reed, and A. M. Fey, “Toward intuitive teleoperation in surgery: human-centric evaluation of teleoperation algorithms for robotic needle steering,” 2018 IEEE Int. Conf. on Robotics and Automation (ICRA), pp. 5799-5806, 2018.

- [10] M. Komori, K. Kobayashi, and T. Tashiro, “Method of position command generation by index finger motion to mitigate influence of unintentional finger movements during operation,” Precis. Eng., Vol.53, pp. 96-106, 2018.

- [11] M. Komori, T. Uchida, K. Kobayashi, and T. Tashiro, “Operating method of three-dimensional positioning device using moving characteristics of human arm,” J. Adv. Mech. Des. Syst. Manuf., Vol.12, No.1, Article No.JAMDSM0009, 2018.

- [12] C. Hu, M. Q. Meng, P. X. Liu, and X. Wang, “Visual gesture recognition for human-machine interface of robot teleoperation,” Proc. 2003 IEEE/RSJ Int. Conf. on Intelligent Robots and Systems (IROS), pp. 1560-1565, 2003.

- [13] K. Miadlicki and M. Pajor, “Real-time gesture control of a CNC machine tool with the use Microsoft Kinect sensor,” Int. J. Sci. Eng. Res., Vol.6, No.9, pp. 538-543, 2015.

- [14] S. Hameed, M. A. Khan, B. Kumar, Z. Arain, and M. Hasan, “Gesture controlled robotic arm using Leap Motion,” Indian J. Sci. Technol., Vol.10, No.45, 2017.

- [15] G. Du, P. Zhang, J. Mai, and Z. Li, “Markerless Kinect-based hand tracking for robot teleoperation,” Int. J. Adv. Robot. Syst., Vol.9, No.2, 2012.

- [16] H. Yoshinada, K. Okamura, and S. Yokota, “Master-slave control method for hydraulic excavator,” J. Robot. Mechatron., Vol.24, No.6, pp. 977-984, 2012.

- [17] T. Nagano, R. Yajima, S. Hamasaki, K. Nagatani, A. Moro, H. Okamoto, G. Yamauchi, T. Hashimoto, A. Yamashita, and H. Asama, “Arbitrary viewpoint visualization for teleoperated hydraulic excavators,” J. Robot. Mechatron., Vol.32, No.6, pp. 1233-1243, 2020.

- [18] H. Yamada, T. Kawamura, and K. Ootsubo, “Development of a teleoperation system for a construction robot,” J. Robot. Mechatron., Vol.26, No.1, pp. 110-111, 2014.

- [19] M. Kamezaki, J. Yang, H. Iwata, and S. Sugano, “A basic framework of virtual reality simulator for advancing disaster response work using teleoperated work machines,” J. Robot. Mechatron., Vol.26, No.4, pp. 486-495, 2014.

- [20] D. Kondo, “Projection screen with wide-FOV and motion parallax display for teleoperation of construction machinery,” J. Robot. Mechatron., Vol.33, No.3, pp. 604-609, 2021.

- [21] T. Ikeda, N. Bando, and H. Yamada, “Semi-automatic visual support system with drone for teleoperated construction robot,” J. Robot. Mechatron., Vol.33, No.2, pp. 313-321, 2021.

- [22] A. Bilberg and A. A. Malik, “Digital twin driven human-robot collaborative assembly,” CIRP Annals, Vol.68, No.1, pp. 499-502, 2019.

- [23] D. Whitney, E. Rosen, D. Ullman, E. Phillips, and S. Tellex, “ROS reality: a virtual reality framework using consumer-grade hardware for ROS-enabled robots,” 2018 IEEE/RSJ Int. Conf. on Intelligent Robots and Systems (IROS), pp. 5018-5025, 2018.

- [24] M. Komori, T. Terakawa, and I. Yasuda, “Operability evaluation system and comparison experiment of gesture operation and button operation of robot manipulator,” IEEE Access, Vol.8, pp. 24966-24978, 2020.

- [25] M. C. Linn and A. C. Petersen, “Emergence and characterization of sex differences in spatial ability: a meta-analysis,” Child Dev., Vol.56, No.6, pp. 1479-1498, 1985.

- [26] J. L. Mohler, “A review of spatial ability research,” Eng. Des. Graph. J., Vol.72, No.2, pp. 19-30, 2008.

- [27] R. N. Shepard and J. Metzler, “Mental rotation of three-dimensional objects,” Science, Vol.171, No.3972, pp. 701-703, 1971.

- [28] Z. W. Pylyshyn, “The rate of ‘mental rotation’ of images: a test of a holistic analogue hypothesis,” Mem. Cogn., Vol.7, No.1, pp. 19-28, 1979.

- [29] R. B. Ekstrom, J. W. French, and H. H. Harman, “Manual for kit of factor-referenced cognitive tests,” Education Testing Service Princeton N.J., 1976.

- [30] M. Kozhevnikov and M. Hegarty, “A dissociation between object manipulation spatial ability and spatial orientation ability,” Mem. Cogn., Vol.29, No.5, pp. 745-756, 2001.

- [31] E. Hunt, J. W. Pellegrino, R. W. Frick, S. A. Farr, and D. Alderton, “The ability to reason about movement in the visual field,” Intelligence, Vol.12, No.1, pp. 77-100, 1988.

- [32] T. C. D’Oliveira, “Dynamic spatial ability: an exploratory analysis and a confirmatory study,” Int. J. Aviat. Psychol., Vol.14, No.1, pp. 19-38, 2004.

- [33] D. Pan, Y. Zhang, Z. Li, and Z. Tian, “Association of individual characteristics with teleoperation performance,” Aerosp. Med. Hum. Perform., Vol.87, No.9, pp. 772-780, 2016.

- [34] S. Ito, Y. Sakano, K. Fujino, and H. Ando, “Remote controlled construction equipment by using high-resolution stereoscopic 3D images,” J. of Japan Society of Civil Engineers, Ser. F3, Vol.73, No.1, pp. 15-24, 2017.

- [35] M. A. Menchaca-Brandan, A. M. Liu, C. M. Oman, and A. Natapoff, “Influence of perspective-taking and mental rotation abilities in space teleoperation,” 2007 2nd ACM/IEEE Int. Conf. on Human-Robot Interaction (HRI), pp. 271-278, 2007.

- [36] B. P. DeJong, J. E. Colgate, and M. A. Peshkin, “Improving teleoperation: reducing mental rotations and translations,” 2004 IEEE Int. Conf. on Robotics and Automation (ICRA), pp. 3708-3714, 2004.

- [37] L. O. Long, J. A. Gomer, K. S. Moore, and C. C. Pagano, “Investigating the relationship between visual spatial abilities and robot operation during direct line of sight and teleoperation,” Proc. Hum. Factors Ergon. Soc. 53rd Annual Meeting, pp. 1437-1441, 2009.

- [38] L. O. Long, J. A. Gomer, J. T. Wong, and C. C. Pagano, “Visual spatial abilities in uninhabited ground vehicle task performance during teleoperation and direct line of sight,” Presence (Camb), Vol.20, No.5, pp. 466-479, 2011.

- [39] D. Pan, Y. Zhang, Z. Li, and Z. Tian, “Effects of cognitive characteristics and information format on teleoperation performance: a cognitive fit perspective,” Int. J. Ind. Ergon., Vol.84, Article No.103157, 2021.

- [40] I. Vessey, “Cognitive fit: a theory-based analysis of the graphs versus tables literature,” Decis. Sci., Vol.22, No.2, pp. 219-240, 1991.

- [41] J. Nakagomi, M. Hikizu, Y. Kamiya, and H. Seki, “Improvement of the operation performance of the remote controlled robot,” Proc. JSPE Semestrial Meeting, pp. 401-402, 2011 (in Japanese).

- [42] T. A. Salthouse, “Adult age differences in integrative spatial ability,” Psychol. Aging, Vol.2, No.3, pp. 254-260, 1987.

- [43] N. Newcombe, M. M. Bandura, and D. G. Taylor, “Sex differences in spatial ability and spatial activities,” Sex Roles, Vol.9, pp. 377-386, 1983.

- [44] C. A. Lawton, “Gender differences in way-finding strategies: Relationship to spatial ability and spatial anxiety,” Sex Roles, Vol.30, pp. 765-779, 1994.

- [45] E. E. Ohnhaus and R. Adler, “Methodological problems in the measurement of pain: a comparison between the verbal rating scale and the visual analogue scale,” Pain, Vol.1, No.4, pp. 379-384, 1975.

- [46] A. M. Carlsson, “Assessment of the chronic pain. I. Aspects of the reliability and validity of the visual analogue scale,” Pain, Vol.16, No.1, pp. 87-101, 1983.

- [47] D. D. Price, P. A. McGrath, A. Rafii, and B. Buckingham, “The validation of visual analogue scales as ratio scale measures for chronic and experimental pain,” Pain, Vol.17, No.1, pp. 45-56, 1983.

- [48] W. Karwowski, “The human world of fuzziness, human entropy and general fuzzy systems theory,” J. of Japan Society for Fuzzy Theory and Systems, Vol.4, No.5, pp. 825-841, 1992.

- [49] M. Komori, T. Terakawa, and I. Yasuda, “Experimental investigation of operability in six-DOF gesture-based operation using a lower limb and comparison with that in an upper limb,” IEEE Access, Vol.8, pp. 118262-118272, 2020.

- [50] L. A. Zadeh, “Fuzzy sets,” Inf. Control, Vol.8, No.3, pp. 338-353, 1965.

- [51] D. Dubois and H. Prade, “Fuzzy sets, probability and measurement,” Eur. J. Oper. Res., Vol.40, No.2, pp. 135-154, 1989.

- [52] M. Smithson, “Applications of fuzzy set concepts to behavioral sciences,” Math. Soc. Sci., Vol.2, No.3, pp. 257-274, 1982.

- [53] T. Takagi and M. Sugeno, “Fuzzy identification of systems and its applications to modeling and control,” IEEE Trans. on Syst. Man Cybern., Vol.SMC-15, No.1, pp. 116-132, 1985.

- [54] K. Uehara and K. Hirota, “Fuzzy Inference: Basic Methods and Their Extensions (Part 1),” J. of Japan Society for Fuzzy Theory and Intelligent Informatics, Vol.28, No.4, pp. 107-112, 2016 (in Japanese).

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.