Paper:

Development of a Multi-User Remote Video Monitoring System Using a Single Mirror-Drive Pan-Tilt Mechanism

Ananta Adhi Wardana, Shaopeng Hu, Kohei Shimasaki, and Idaku Ishii

Smart Robotics Laboratory, Graduate School of Advanced Science and Engineering, Hiroshima University

1-4-1 Kagamiyama, Higashi-hiroshima, Hiroshima 739-8527, Japan

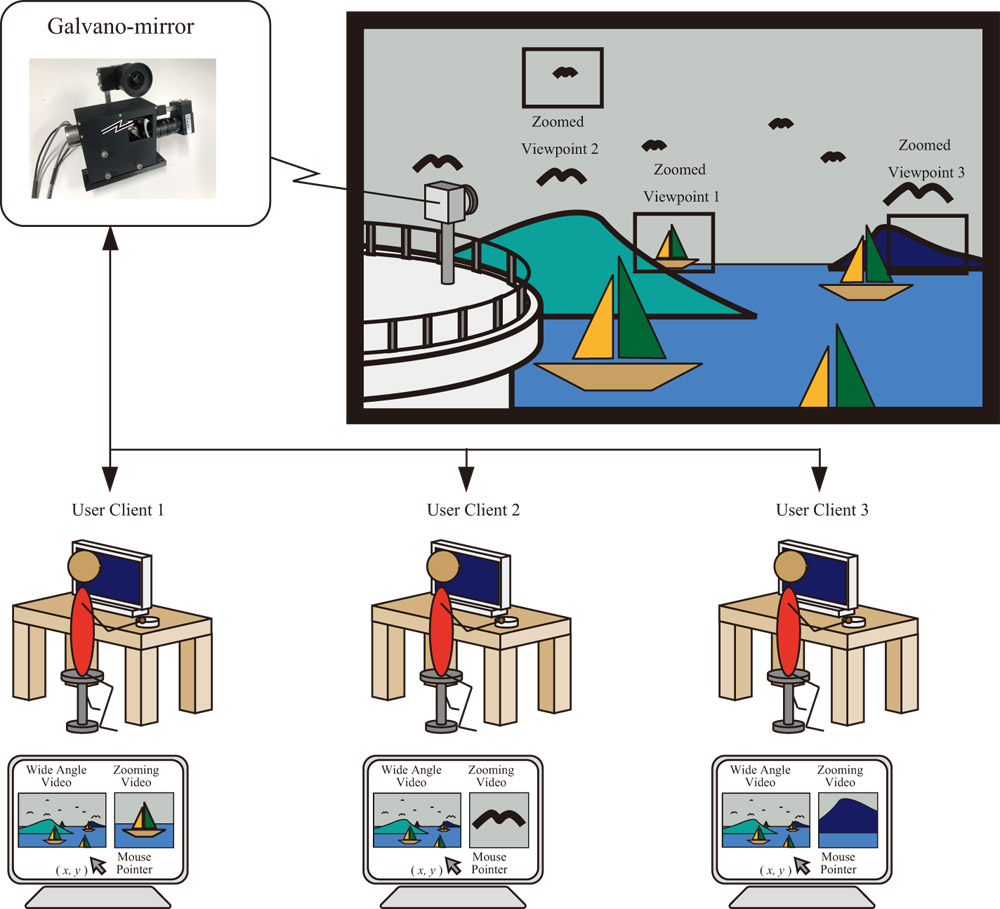

In this paper, we developed a concept of video monitoring system using a single mirror-drive pan-tilt mechanism. The system provides multiple zoomed videos with controllable viewing angle for each zoomed video and a wide-angle video. The system can be accessed by several users by connecting their personal computer (PC) to the server PC through the network. Every user is granted to change of their respected viewing angle of zoomed videos. The system is suitable for the remote observation deck for sight-seeing purpose. The system is composed of two high-speed cameras with wide-angle and zoomed lens, and a high-speed mirror-drive pan-tilt mechanism. The system implements a convoluted neural network-based (CNN-based) object detection to assist every user client identifying objects appearing on wide-angle and zoomed videos. We demonstrated that our proposed system is capable to provide wide-angle and zoomed videos with CNN-based object detection to four clients, where each client receives a 30 frames per second zoomed video.

System concept

- [1] J. Lu, X. Xiao et al., “The potential of virtual tourism in the recovery of tourism industry during the COVID-19 pandemic,” Current Issue in Tourism, Vol.25, No.3, pp. 441-457, 2022.

- [2] S. Gössling, D. Scott, and C. M. Hall, “Pandemics, tourism and global change: a rapid assessment of COVID-19,” J. Sustainable Tourism, Vol.29, No.1, pp. 1-20, 2020.

- [3] M. L. Famukhit, L. Yulianto, and B. E. P. Maryono, “Interactive application development policy object 3D virtual tour history Pacitan District based multimedia,” Int. J. of Advanced Computer Science and Applications, Vol.4, No.3, pp. 15-19, 2013.

- [4] D. Paor, F. Coba et al., “A google earth grand tour of the terrestrial planets,” J. Geoscience Education, Vol.64, No.4, pp. 292-302, 2016.

- [5] C. Njerekai, “An application of the virtual reality 360° concept to the Great Zimbabwe monument,” J. Heritage Tourism, Vol.15, No.5, pp. 567-579, 2020.

- [6] S. Wessels, H. Ruther, R. Bhurtha, and R. Schroeder, “Design and creation of a 3D Virtual Tour of the world heritage site of Petra,” Jordan. Proc. of Africa Geo, 2014.

- [7] J. A. Gonzalez and A. M. Martinez-Graña et al., “Virtual 3D tour of the neogene palaeontological heritage of Huelva (Guadalquivir Basin, Spain),” Environ. Earth Science, Vol.73, pp. 4609-4618, 2015.

- [8] A. Baik, “The use of interactive virtual BIM to boost virtual tourism in heritage sites, historic Jeddah,” Int. J. Geo-information, Vol.10, No.9, Article No.577, 2021.

- [9] L. Gamperle, K. Sayid et al., “An immersive telepresence system using a real-time omnidirectional camera and a virtual reality head-mounted display,” Proc. of IEEE Int. Symp. Multimedia, Taichung, Taiwan, pp. 175-178, December 2014.

- [10] O. Yeonju, T. McGraw et al., “360 VR Based robot teleoperation interface for virtual tour,” Int. Workshop on Virtual, Augmented and Mixed Reality for Human-Robot Interaction, Chicago, IL, USA, March 2018.

- [11] J. Lee, Y. Lu, Y. Xu, and D. Song, “Visual programming for mobile robot navigation using high-level landmarks,” 2016 IEEE/RSJ Int. Conf. on Intelligent Robots and Systems (IROS), pp. 2901-2906, 2016.

- [12] T. Kusu, Y. Ito et al., “A virtual campus tour service using remote control robots on robot service network protocol,” 27th Int. Conf. Advance Information Networking and Applications Workshop, Barcelona, Spain, March 2013.

- [13] Y. Kato, “A remote navigation system for a simple tele-presence robot with virtual tour,” IEEE/RSJ Int. Conf. Intell. Robot. Syst. (IROS), Hamburg, Germany, pp. 4524-4529, 2015.

- [14] D. Mirk and H. Hlavacs, “Using drones for virtual tourism,” D. Reidsma, L. Choi, and R. Bargar (Eds.), “Intelligent Technologies for Interactive Entertainment (INTETAIN 2014),” Lecture Notes of the Institute for Computer Sciences, Social Informatics and Telecommunications Engineering, Vol.136, Springer, 2014.

- [15] D. Mirk and H. Hlavacs, “Virtual tourism with drones: experiments and lag compensation,” Proc. of the First Workshop on Micro Aerial Vehicle Networks, Systems, and Applications for Civilian Use, pp. 45-50, Florence, Italy, May 2015.

- [16] Y. Jeong, D. Park, and K. Park, “PTZ Camera-based Displacement Sensor System with Perspective Distortion Correction Unit for Early Detection of Building Destruction,” Sensors, Vol.17, No.3, Article No.430, 2017.

- [17] M. Bramberger, A. Doblander, A. Maier, B. Rinner, and H. Schwabach, “Distributed embedded smart cameras for surveillance applications,” Computer, Vol.39, No.2, pp. 68-75, 2006.

- [18] C. Micheloni, B. Rinner, and G. Foresti, “Video Analysis in Pan-tilt-zoom Camera Networks,” IEEE Signal Processing Magazine, Vol.27, Issue 5, pp. 78-90, 2010.

- [19] C. H. Chen, Y. Yao, D. Page, B. Abidi, A. Koshan, and M. Abidi, “Heterogeneous fusion of omnidirectional and PTZ cameras for multiple object tracking,” IEEE Trans. Circuits Syst. Video Technol., Vol.18, No.8, pp. 1052-1063, 2008.

- [20] T. Aoyama, L. Li, M. Jiang et al., “Vibration Sensing of a Bridge Model Using a Multithread Active Vision System,” IEEE ASME Trans. Mechatron., Vol.23, pp. 179-189, 2018.

- [21] T. Aoyama, M. Kaneishi, T. Takaki et al., “View Expansion System for Microscope Photography Based on Viewpoint Movement Using Galvano-Mirror,” IEEE/RSJ Int. Conf. on Intelligent Robots and Systems (IROS), pp. 1140-1145, 2017.

- [22] T. Aoyama, S. Takeno, M. Takeuchi et al., “Microscopic Tracking System for Simultaneous Expansive Observations of Multiple Micro-targets Based on View-expansive Microscope,” IEEE/ASME Int. Conf. on Advanced Intelligent Mechatronics (AIM), pp. 501-506, 2019.

- [23] S. Hu, Y. Matsumoto et al., “Monocular Stereo Measurement Using High-speed Catadioptric Tracking,” Sensors, Vol.8, Article No.1839, 2017.

- [24] S. Hu, M. Jiang et al., “Real-time monocular three-dimensional motion tracking using a multithread active vision system,” J. Robot. Mechatron., Vol.30, No.3, pp. 453-466, 2018.

- [25] S. Hu, Y. H. Dong et al., “Omnidirectional panoramic video system with frame-by-frame ultrafast viewpoint control,” IEEE Robot. Autom. Letter, Vol.7, No.2, pp. 4086-4093, 2022.

- [26] S. Hu, K. Shimasaki et al., “A simultaneous multi-object zooming system using an ultrafast pan-tilt camera,” IEEE Sensors, Vol.21, No.7, pp. 9436-9448, 2021.

- [27] S. Hu, K. Shimasaki et al., “A Dual-Camera-Based Ultrafast Tracking System for Simultaneous Multi-target zoomed,” 2019 IEEE Int. Conf. on Robotics and Biomimetics (ROBIO), pp. 521-526, 2019.

- [28] V. Tsakanikas and T. Dagiuklas, “Video Surveillance Systems-Current Status and Future Trend,” Computers and Electrical Engineering, Vol.70, pp. 736-753, 2018.

- [29] M. Zablocki, K. Gościewska et. al, “Intelligent Video Surveillance Systems for Public Spaces- a Survey,” J. of Theoretical and Applied Computer Science, Vol.8, No.4, pp. 13-27, 2014.

- [30] M. Bramberger, J. Brunner, B. Rinner, and H. Schwabach, “Real-time video analysis on an embedded smart camera for traffic surveillance,” Proc. of 10th IEEE Real-Time and Embedded Technology and Applications Symp. (RTAS 2004), pp. 174-181, Toronto, Canada, May 2004.

- [31] S. Yang, P. Luo, C. Loy, and X. Tang, “Pedestrian detection and tracking using a mixture of view-based shape-texture models,” IEEE Trans. Pattern Anal. Mach. Intell., Vol.40, No.8, pp. 1845-1859, 2018.

- [32] Z. Guo, W. Liao, Y. Xiao, P. Veelaert, and W. Philips, “An occlusion robust feature selection framework in pedestrian detection,” Sensors, Vol.18, No.7, pp. 2272-2278, 2018.

- [33] W. Chu, Y. Liu, C. Shen, D. Cai, and X. Hua, “Multi-task vehicle detection with region-of-interest voting,” IEEE Trans. Image Process., Vol.27, No.1, pp. 432-441, 2018.

- [34] H. Mao, S. Yao, T. Tang, B. Li, J. Yao, and Y. Wang, “Towards real-time object detection on embedded systems,” IEEE Trans. Emerg. Topics Comput., Vol.6, No.3, pp. 417-431, 2016.

- [35] S. Kim, H. Kook, J. Sun, M. Kang, and S. Ko, “Parallel feature pyramid network for object detection,” Proc. Euro. Conf. Comp. Vis., pp. 234-250, Sep. 2018.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.