Paper:

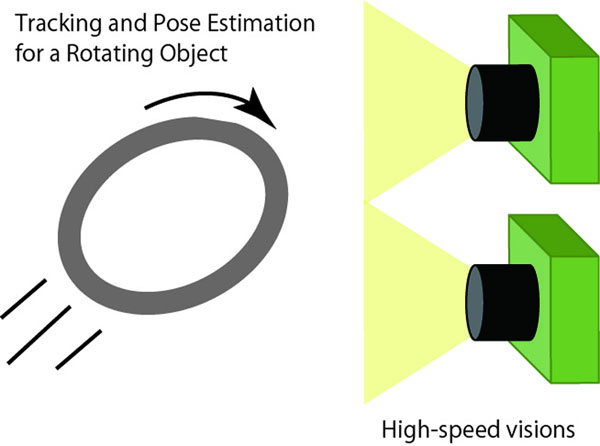

Real-Time Marker-Based Tracking and Pose Estimation for a Rotating Object Using High-Speed Vision

Xiao Liang*, Masahiro Hirano**, and Yuji Yamakawa***

*Department of Mechanical Engineering, The University of Tokyo

4-6-1 Komaba, Meguro-ku, Tokyo 153-8505, Japan

**Institute of Industrial Science, The University of Tokyo

4-6-1 Komaba, Meguro-ku, Tokyo 153-8505, Japan

***Interfaculty Initiative in Information Studies, The University of Tokyo

4-6-1 Komaba, Meguro-ku, Tokyo 153-8505, Japan

Object tracking and pose estimation have always been challenging tasks in robotics, particularly for rotating objects. Rotating objects move quickly and with complex pose variations. In this study, we introduce a marker-based tracking and pose estimation method for rotating objects using a high-speed vision system. The method can obtain pose information at frequencies greater than 500 Hz, and can still estimate the pose when parts of the markers are lost during tracking. A robot catching experiment shows that the accuracy and frequency of this system are capable of high-speed tracking tasks.

Pose estimation for a rotating object

- [1] H. Kim, Y. Yamakawa, T. Senoo, and M. Ishikawa, “Robotic manipulation of rotating object via twisted thread using high-speed visual sensing and feedback,” 2015 IEEE Int. Conf. on Multisensor Fusion and Integration for Intelligent Systems (MFI), pp. 265-270, 2015.

- [2] M. Yashima and T. Yamawaki, “Robotic nonprehensile catching: Initial experiments,” 2014 IEEE/RSJ Int. Conf. on Intelligent Robots and Systems, pp. 2461-2467, 2014.

- [3] T. Murooka, K. Okada, and M. Inaba, “Diabolo orientation stabilization by learning predictive model for unstable unknown-dynamics juggling manipulation,” 2020 IEEE/RSJ Int. Conf. on Intelligent Robots and Systems (IROS), pp. 9174-9181, 2020.

- [4] B. Tekin, S. N. Sinha, and P. Fua, “Real-Time Seamless Single Shot 6D Object Pose Prediction,” 2018 IEEE/CVF Conf. on Computer Vision and Pattern Recognition (CVPR), 2018.

- [5] S. Peng, Y. Liu, Q. Huang, X. Zhou, and H. Bao, “PVNet: Pixel-wise voting network for 6dof pose estimation,” 2019 IEEE/CVF Conf. on Computer Vision and Pattern Recognition (CVPR), 2019.

- [6] X. Zhu and K. F. Li, “Real-time motion capture: An overview,” 2016 10th Int. Conf. on Complex, Intelligent, and Software Intensive Systems (CISIS), pp. 522-525, 2016.

- [7] H. Sunada, K. Yokoyama, T. Hirata, T. Matsukawa, and H. Takada, “Visualization system for the information measured by motion capture system,” 2016 11th Int. Conf. on Computer Science Education (ICCSE), pp. 21-26, 2016.

- [8] R. Sakata, F. Kobayashi, and H. Nakamoto, “Development of motion capture system using multiple depth sensors,” 2017 Int. Symposium on Micro-NanoMechatronics and Human Science (MHS), pp. 1-7, 2017.

- [9] T. Senoo, Y. Yamakawa, S. Huang, K. Koyama, M. Shimojo, Y. Watanabe, L. Miyashita, M. Hirano, T. Sueishi, and M. Ishikawa, “Dynamic intelligent systems based on high-speed vision,” J. Robot. Mechatron., Vol.31, No.1, pp. 45-56, 2019.

- [10] S. Hu, M. Jiang, T. Takaki, and I. Ishii, “Real-time monocular three-dimensional motion tracking using a multithread active vision system,” J. Robot. Mechatron., Vol.30, No.3, pp. 453-466, 2018.

- [11] M. Ishikawa, A. Namiki, T. Senoo, and Y. Yamakawa, “Ultra high-speed robot based on 1 khz vision system,” 2012 IEEE/RSJ Int. Conf. on Intelligent Robots and Systems, pp. 5460-5461, 2012.

- [12] Y. Yamakawa, A. Namiki, and M. Ishikawa, “Motion planning for dynamic folding of a cloth with two high-speed robot hands and two high-speed sliders,” 2011 IEEE Int. Conf. on Robotics and Automation, pp. 5486-5491, 2011.

- [13] Y. Yamakawa, Y. Matsui, and M. Ishikawa, “Development and analysis of a high-speed human-robot collaborative system and its application,” 2018 IEEE Int. Conf. on Robotics and Biomimetics (ROBIO), pp. 2415-2420, 2018.

- [14] Y. Nakabo, I. Ishi, and M. Ishikawa, “3d tracking using two high-speed vision systems,” IEEE/RSJ Int. Conf. on Intelligent Robots and Systems, Vol.1, pp. 360-365, 2002.

- [15] X. Liang, H. Zhu, Y. Chen, and Y. Yamakawa, “Tracking and catching of an in-flight ring using a high-speed vision system and a robot arm,” 47th Annual Conf. of the IEEE Industrial Electronics Society (IECON 2021), pp. 1-7, 2021.

- [16] S. Kagami, “Utilizing opencv for high-speed vision processing,” J. of the Robotics Society of Japan, Vol.31, No.3, pp. 244-248, 2013.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.