Paper:

Visual SLAM Framework Based on Segmentation with the Improvement of Loop Closure Detection in Dynamic Environments

Leyuan Sun*,**,***, Rohan P. Singh*,**,***, and Fumio Kanehiro*,**,***

*Department of Intelligent and Mechanical Interaction Systems, Graduate School of Science and Technology, University of Tsukuba

1-1-1 Tennodai, Tsukuba, Ibaraki 305-8577 Japan

**CNRS-AIST JRL (Joint Robotics Laboratory), International Research Laboratory (IRL)

1-1-1 Umezono, Tsukuba, Ibaraki 305-8560, Japan

***National Institute of Advanced Industrial Science and Technology (AIST)

1-1-1 Umezono, Tsukuba, Ibaraki 305-8560, Japan

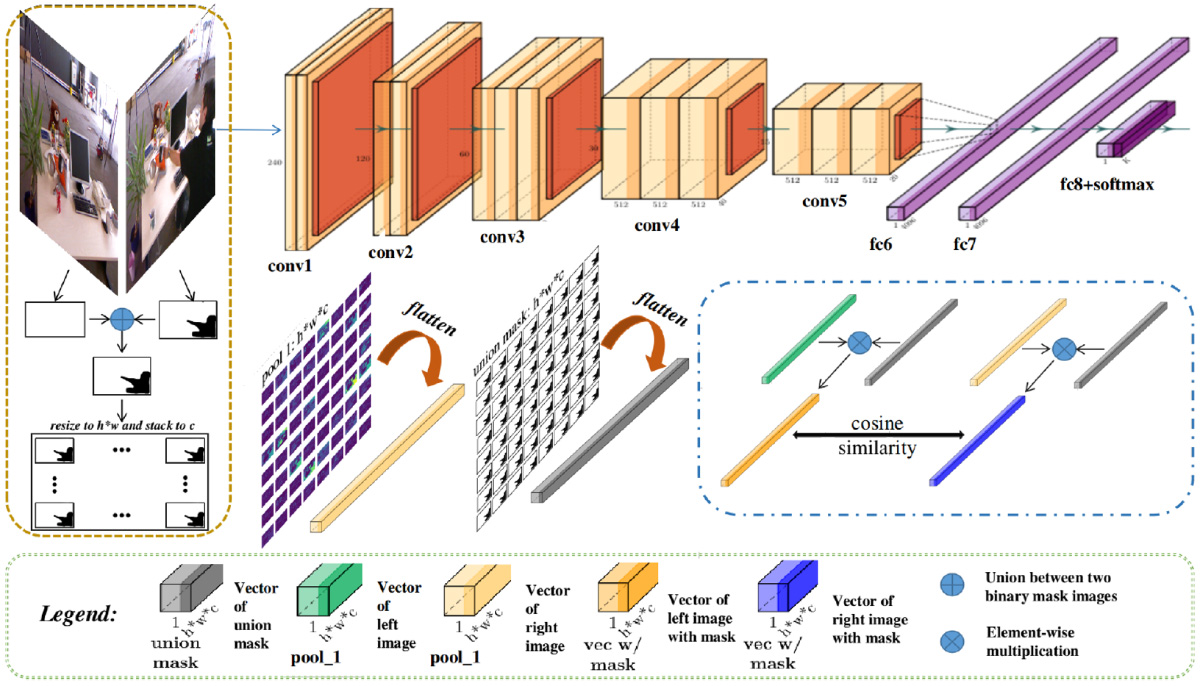

Most simultaneous localization and mapping (SLAM) systems assume that SLAM is conducted in a static environment. When SLAM is used in dynamic environments, the accuracy of each part of the SLAM system is adversely affected. We term this problem as dynamic SLAM. In this study, we propose solutions for three main problems in dynamic SLAM: camera tracking, three-dimensional map reconstruction, and loop closure detection. We propose to employ geometry-based method, deep learning-based method, and the combination of them for object segmentation. Using the information from segmentation to generate the mask, we filter the keypoints that lead to errors in visual odometry and features extracted by the CNN from dynamic areas to improve the performance of loop closure detection. Then, we validate our proposed loop closure detection method using the precision-recall curve and also confirm the framework’s performance using multiple datasets. The absolute trajectory error and relative pose error are used as metrics to evaluate the accuracy of the proposed SLAM framework in comparison with state-of-the-art methods. The findings of this study can potentially improve the robustness of SLAM technology in situations where mobile robots work together with humans, while the object-based point cloud byproduct has potential for other robotics tasks.

CNN-based LCD in dynamic environment

- [1] H. Date and T. Takubo, “Special Issue on Real World Robot Challenge in Tsukuba and Osaka,” J. Robot. Mechatron., Vol.32, No.6, p. 1103, 2020.

- [2] A. Handa, A. Suzuki, H. Date et al., “Navigation Based on Metric Route Information in Places Where the Mobile Robot Visits for the First Time,” J. Robot. Mechatron., Vol.31, No.2, pp. 180-193, 2019.

- [3] R. Mur-Artal and J. D. Tardós, “ORB-SLAM2: an open-source SLAM system for monocular, stereo, and RGB-D cameras,” IEEE Trans. Robot., Vol.33, No.5, pp. 1255-1262, 2017.

- [4] L. Sun, F. Kanehiro, I. Kumagai et al., “Multi-purpose SLAM framework for dynamic environment,” IEEE/SICE Int. Symposium on System Integration (SII) pp. 519-524, 2020.

- [5] K. Simonyan and A. Zisserman, “Very deep convolutional networks for large-scale image recognition,” Proc. of Int. Conf. Learn. Representat., pp. 1-14, 2015.

- [6] Y. Yoshimoto and H. Tamukoh, “FPGA Implementation of a Binarized Dual Stream Convolutional Neural Network for Service Robots,” J. Robot. Mechatron., Vol.33, No.2, pp. 386-399, 2021.

- [7] J. Long, E. Shelhamer, and T. Darrell, “Fully convolutional networks for semantic segmentation,” Proc. of the IEEE Conf. on Computer Vision and Pattern Recognition (CVPR), pp. 3431-3440, 2015.

- [8] L. Xiao, J. Wang, X. Qiu et al., “Dynamic-SLAM: Semantic monocular visual localization and mapping based on deep learning in dynamic environment,” Robot. Auton. Syst., Vol.117, pp. 1-16, 2019.

- [9] W. Liu, D. Anguelov, D. Erhan et al., “SSD: Single Shot MultiBox Detector,” European Conf. on Computer Vision, pp. 21-37, 2016.

- [10] R. Wang, W. Wan, Y. Wang et al., “A new RGB-D SLAM method with moving object detection for dynamic indoor scenes,” Remote Sens., Vol.11, No.10, 1143, 2019.

- [11] J. Cheng, Y. Sun, and M. Q. Meng, “Improving monocular visual SLAM in dynamic environments: an optical-flow-based approach,” Adv. Robotics, Vol.32, No.12, pp. 576-589, 2019.

- [12] B. Bescos, J. M. Fácil, J. Civera et al., “DynaSLAM: Tracking, mapping, and inpainting in dynamic scenes,” IEEE Robot. Autom. Lett., Vol.3, No.4, pp. 4076-4083, 2018.

- [13] K. He, G. Gkioxari, P. Dollár et al., “Mask R-CNN,” The IEEE Int. Conf. on Computer Vision (ICCV), pp. 2961-2969, 2017.

- [14] C. Yu et al., “DS-SLAM: A semantic visual SLAM towards dynamic environments,” IEEE/RSJ Int. Conf. on Intelligent Robots and Systems (IROS), pp. 1168-1174, 2018.

- [15] V. Badrinarayanan, A. Kendall, and R. Cipolla, “SegNet: A Deep Convolutional Encoder-Decoder Architecture for Image Segmentation,” IEEE Trans. on Pattern Analysis and Machine Intelligence, Vol.39, No.12, pp. 2481-2495, 2017.

- [16] M. Cummins, “Newman pp. FAB-MAP: Probabilistic localization and mapping in the space of appearance,” Int. J. Robot. Res., Vol.27, No.6, pp. 647-665, 2008.

- [17] D. A. Filliat, “Visual bag of words method for interactive qualitative localization and mapping,” Proc. 2007 IEEE Int. Conf. on Robotics and Automation, pp. 3921-3926, 2007.

- [18] Z. Chai and T. Matsumaru, “ORB-SHOT SLAM: trajectory correction by 3D loop closing based on bag-of-visual-words (BoVW) model for RGB-D visual SLAM,” J. Robot. Mechatron., Vol.29, No.2, pp. 365-380, 2017.

- [19] N. Sünderhauf et al., “On the performance of convnet features for place recognition,” IEEE/RSJ Int. Conf. on Intelligent Robots and Systems (IROS), pp. 4297-4304, 2015.

- [20] F. Wang, R. Xiaogang, and H. Jing, “Visual Loop Closure Detection Based on Stacked Convolutional and Autoencoder Neural Networks,” IOP Conf. Series: Materials Science and Engineering, Vol.563, No.5, 052082, 2019.

- [21] A. R. Memon, H. Wang, and A. Hussain, “Loop closure detection using supervised and unsupervised deep neural networks for monocular SLAM systems,” Robotics and Autonomous Systems, Vol.126, 103470, 2020.

- [22] M. Lopez-Antequera et al., “Appearance-invariant place recognition by discriminatively training a convolutional neural network,” Pattern Recognition Letters, Vol.92, pp. 89-95, 2017.

- [23] H. Hu, Y. Zhang, Q. Duan et al., “Loop closure detection for visual slam based on deep learning,” IEEE 7th Annual Int. Conf. on CYBER Technology in Automation, Control, and Intelligent Systems (CYBER), pp. 1214-1219, 2017.

- [24] Y. Xia, J. Li, L. Qi et al., “Loop closure detection for visual SLAM using PCANet features,” Int. Joint Conf. on Neural Networks (IJCNN), pp. 2274-2281, 2016.

- [25] B. Zhou, H. Zhao, X. Puig et al., “Scene parsing through ADE20K dataset,” Proc. of the IEEE Conf. on Computer Vision and Pattern Recognition, pp. 633-641, 2017.

- [26] J. Sturm, N. Engelhard, F. Endres et al., “A benchmark for the evaluation of RGB-D SLAM systems,” IEEE/RSJ Int. Conf. on Intelligent Robots and Systems, pp. 573-580, 2012.

- [27] X. Gao and T. Zhang, “Unsupervised learning to detect loops using deep neural networks for visual SLAM system,” Auton. Robots, Vol.41, No.1, pp. 1-18, 2017.

- [28] D. Yang, S. Bi, W. Wang et al., “DRE-SLAM: Dynamic RGB-D encoder SLAM for a differential-drive robot,” Remote Sens., Vol.11, No.4, 380, 2019.

- [29] E. Olson and M. Kaess, “Evaluating the performance of map optimization algorithms,” RSS Workshop on Good Experimental Methodology in Robotics, p. 15, 2009.

- [30] K. Konolige, M. Agrawal, and J. Sola, “Large-scale visual odometry for rough terrain,” Robotics Research, Berin: Springer, pp. 201-212, 2010.

- [31] T. Zhang and Y. Nakamura, “HRPSlam: A benchmark for RGB-D dynamic SLAM and humanoid vision,” Third IEEE Int. Conf. on Robotic Computing (IRC), pp. 110-116, 2019.

- [32] T. Zhang, E. Uchiyama, and Y. Nakamura, “Dense RGB-D SLAM for humanoid robots in the dynamic humans environment,” IEEE-RAS 18th Int. Conf. on Humanoid Robots (Humanoids), pp. 270-276, 2018.

- [33] T. Whelan, S. Leutenegger, R. Salas-Moreno et al., “ElasticFusion: Dense SLAM without a pose graph,” Robotics: Science and Systems, 2015.

- [34] C. Godard, O. M. Aodha, M. Firman et al., “Digging into self-supervised monocular depth estimation,” Proc. of the IEEE/CVF Int. Conf. on Computer Vision, pp. 3828-3838, 2019.

- [35] K. Tateno, F. Tombari, I. Laina et al., “Cnn-slam: Real-time dense monocular slam with learned depth prediction,” Proc. of the IEEE Conf. on Computer Vision and Pattern Recognition, pp. 6243-6252, 2017.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.