Paper:

CNN-Based Terrain Classification with Moisture Content Using RGB-IR Images

Tomoya Goto and Genya Ishigami

Keio University

3-14-1 Hiyoshi, Kohoku-ku, Yokohama, Kanagawa 223-8522, Japan

Unmanned mobile robots in rough terrains are a key technology for achieving smart agriculture and smart construction. The mobility performance of robots highly depends on the moisture content of soil, and past few studies have focused on terrain classification using moisture content. In this study, we demonstrate a convolutional neural network-based terrain classification method using RGB-infrared (IR) images. The method first classifies soil types and then categorizes the moisture content of the terrain. A three-step image preprocessing for RGB-IR images is also integrated into the method that is applicable to an actual environment. An experimental study of the terrain classification confirmed that the proposed method achieved an accuracy of more than 99% in classifying the soil type. Furthermore, the classification accuracy of the moisture content was approximately 69% for pumice and 100% for dark soil. The proposed method can be useful for different scenarios, such as small-scale agriculture with mobile robots, smart agriculture for monitoring the moisture content, and earthworks in small areas.

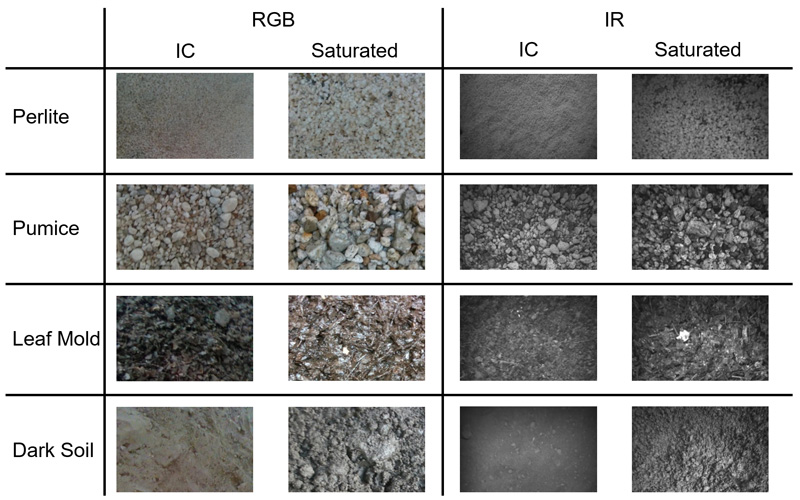

Typical examples of RGB-IR images of each soil sample (IC: initial condition)

- [1] V. Badrinarayanan, A. Kendall, and R. Cipolla, “SegNet: A Deep Convolutional Encoder-Decoder Architecture for Image Segmentation,” IEEE Trans. on Pattern Analysis and Machine Intelligence, Vol.39, No.12, pp. 2481-2495, doi: 10.1109/TPAMI.2016.2644615, 2015.

- [2] V. Badrinarayanan, A. Kendall, and R. Cipolla, “U-Net: Convolutional Networks for Biomedical Image Segmentation,” Medical Image Computing and Computer-Assisted Intervention (MICCAI 2015), Vol.9351, pp. 234-241, doi: 10.1007/978-3-319-24574-4_28, 2015.

- [3] M. G. Bekker, “Off-The-Road Locomotion,” Ann Arbor, MI, USA, The University of Michigan Press, 1960.

- [4] C. Weiss et al., “Vibration-based Terrain Classification Using Support Vector Machines,” Proc. of IEEE/RSJ Int. Conf. on Intelligent Robots and Systems, pp. 4429-4434, doi: 10.1109/IROS.2006.282076, 2006.

- [5] D. Andujar et al., “Discriminating Crop, Weeds and Soil Surface with a Terrestrial LiDAR SENSOR,” Sensors, Vol.13, pp. 14662-14675, doi: 10.3390/s131114662, 2013.

- [6] Y. Francisco et al., “Terrain classification using ToF sensors for the enhancement of agricultural machinery traversability,” J. of Terramechanics, Vol.76, No.2, pp. 1-13, doi: 10.1016/j.jterra.2017.10.005, 2018.

- [7] Y. Kuroda, M. Suzuki, T. Saitoh, and E. Terada, “Self-Supervised Online Long-Range Road Estimation in Complicated Urban Environments,” J. Robot. Mechatron., Vol.24, No.1, pp. 16-27, doi: 10.20965/jrm.2012.p0016, 2012.

- [8] M. Häselich, M. Arends, N. Wojke, F. Neuhaus, and D. Paulus, “Probabilistic terrain classification in unstructed environments,” Robotics and Autonomous Systems, Vol.61, No.10, pp. 1051-1059, doi: 10.1016/j.robot.2012.08.002, 2015.

- [9] S. Yagi, “[Research on the relationship between driving performance of agricultural machines and the moisture content of soil] Nougyou kikai to dojou suibun ryou no kanren ni tuite,” Japanese Society of Soil Physics, Vol.24, pp. 27-31, 1971 (in Japanese).

- [10] K. Yoneyama and Y. N. Takayabu, “Convective Activity and Moisture Variation During Field Experiment MISMO in the Equatorial Indian Ocean,” J. Disaster Res., Vol.3, No.1, pp. 69-77, doi: 10.20965/jdr.2008.p0069, 2008.

- [11] A. Krizhevsky, I. Sutskever, and G. Hinton, “ImageNet Classification with Deep Convolutional Neural Networks,” Proc. of the 25th Int. Conf. on Neural Information Processing Systems (NIPS’12), Vol.1, pp. 1097-1105, doi: 10.1145/3065386, 2012.

- [12] R. Selvaraju, M. Cogswell, A. Das, R. Vedantam, D. Parikh, and D. Batra, “Grad-CAM: Visual Explanations From Deep Networks via Gradient-Based Localization,” Proc. of The IEEE Int. Conf. on Computer Vision, pp. 618-626, doi: 10.1007/s11263-019-01228-7, 2017.

- [13] C. Dong, C. Loy, K. He, and X. Tang, “Image Super-Resolution Using Deep Convolutional Networks,” IEEE Trans. on Pattern Analysis and Machine Intelligence, Vol.38, No.2, pp. 295-307, doi: 10.1109/TPAMI.2015.2439281, 2015.

- [14] Z. Wang et al., “Image Quality Assessment: From Error Visibility to Structural Similarity,” IEEE Trans. on Image Processing, Vol.13, No.4, pp. 600-612, doi: 10.1109/TIP.2003.819861, 2004.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.