Paper:

Device-Free Handwritten Character Recognition Method Using Acoustic Signal

Atsushi Ogura, Hiroki Watanabe, and Masanori Sugimoto

Hokkaido University

Kita 14, Nishi 9, Kita-ku, Sapporo 060-0814, Japan

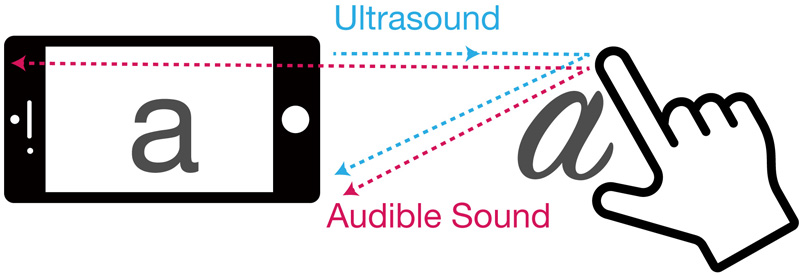

In this paper, we propose a method for recognizing handwritten characters by a finger using acoustic signals. This method is carried out using a smartphone placed on a flat surface, such as a desk. Specifically, this method uses an ultrasonic wave transmitted from the smartphone, which is reflected by the finger, and an audible sound is generated when writing a handwritten character. The proposed method does not require an additional device for handwritten character recognition because it uses the microphone/speaker built into the device. Evaluation results showed that it was able to recognize 36 types of characters with an average accuracy of 77.8% in a low noise environment for 10 subjects. In addition, it was verified that combining an audible sound and an ultrasonic wave in this method achieved higher recognition accuracy than when only an audible sound or an ultrasonic wave was used.

Overview of the proposed method

- [1] M. Billinghurst and T. Starner, “Wearable Devices: New Ways to Manage Information,” Computer, Vol.32, No.1, pp. 57-64, 1999.

- [2] K. Siek, Y. Rogers, and K. Connelly, “Fat Finger Worries: How Older and Younger Users Physically Interact with PDAs,” Proc. of IFIP Conf. on Human-Computer Interaction, pp. 267-280, 2005.

- [3] J. Wang and F. Chuang, “An Accelerometer-Based Digital Pen With a Trajectory Recognition Algorithm for Handwritten Digit and Gesture Recognition,” IEEE Trans. on Industrial Electronics, Vol.59, No.7, pp. 2998-3007, 2012.

- [4] C. Zhang, A. Waghmare, P. Kundra, Y. Pu, S. Gilliland, T. Ploetz, T. Starner, O. Inan, and G. Abowd, “FingerSound: Recognizing Unistroke Thumb Gestures using a Ring,” Proc. of the ACM on Interactive, Mobile, Wearable and Ubiquitous Technologies, Vol.1 Issue 3, 120, 2017.

- [5] N. Kimura, M. Kono, and J. Rekimoto, “SottoVoce: An Ultrasound Imaging-Based Silent Speech Interaction Using Deep Neural Networks,” Proc. of 2019 CHI Conf. on Human Factors in Computing Systems (CHI 2019), No.146, pp. 1-11, 2019.

- [6] Y. Sato, M. Shingyouuchi, T. Furuta, and T. Beppu, “Novel Device for Inputting Handwriting Trajectory,” J. Robot. Mechatron., Vol.13, No.2, pp. 134-139, 2001.

- [7] Z. Lu, X. Chen, Q. Li, X. Zhang, and P. Zhou, “A Hand Gesture Recognition Framework and Wearable Gesture-Based Interaction Prototype for Mobile Devices,” IEEE Trans. on Human-Machine Systems, Vol.44, No.2, pp. 293-299, 2014.

- [8] S. Yun, Y. Chen, and L. Qiu, “Turning a Mobile Device into a Mouse in the Air,” Proc. of the 13th Annual Int. Conf. on Mobile Systems, Applications, and Services, pp. 15-29, 2015.

- [9] J. Wang, D. Vasisht, and D. Katabi, “RF-IDraw: Virtual Touch Screen in the Air Using RF Signals,” Proc. of ACM SIGCOMM Computer Communication Review, pp. 235-246, 2015.

- [10] C. Harrison, D. Tan, and D. Morris, “Skinput: Appropriating the Body as an Input Surface,” Proc. of the SIGCHI Conf. on Human Factors in Computing Systems (CHI 2010), pp. 453-462, 2010.

- [11] S. Norieda, H. Mitsuhashi, M. Sato, “FingerKeypad: Detection of Tap Location on Finger by Arrival Time Difference of Impact,” J. of Human Interface Society, Vol.14, No.4, pp. 393-402, 2012 (in Japanese).

- [12] Y. Nakamura, T. Sakai, and K. Yazaki, “PhKey: An Input Interface for Wearable Devices Using Phalanges as Keys,” J. of Information Processing, Vol.62, No.2, pp. 701-712, 2021 (in Japanese).

- [13] T. Yamagishi and K. Umeda, “Input of Japanese Characters by Recognizing the Number of Fingers,” J. Robot. Mechatron., Vol.16, No.4, pp. 420-425, 2004.

- [14] J. Lien, N. Gillian, M. Karagozler, P. Amihood, C. Schwesig, E. Olson, H. Raja, and I. Poupyrev, “Soli: Ubiquitous Gesture Sensing with Millimeter Wave Radar,” ACM Trans. on Graphics, Vol.35, No.4, 142, 2016.

- [15] F. Adib, Z. Kabelac, and D. Katabi, “Multi-Person Localization via RF Body Reflections,” Proc. of the 12th USENIX Symposium on Networked Systems Design and Implementation (NSDI), pp. 279-292, 2015.

- [16] T. Wei and X. Zhang, “mTrack: High-Precision Passive Tracking Using Millimeter Wave Radios,” Proc. of the 21st Annual Int. Conf. on Mobile Computing and Networking, pp. 117-129, 2015.

- [17] Y. Wang and Y. Zheng, “Modeling RFID Signal Reflection for Contact-free Activity Recognition,” Proc. of the ACM on Interactive, Mobile, Wearable and Ubiquitous Technologies, Vol.2, No.4, 193, 2018.

- [18] S. Gupta, D. Morris, S. Patel, and D. Tan, “SoundWave: Using the Doppler Effect to Sense Gestures,” Proc. of SIGCHI Conf. on Human Factors in Computing Systems, pp. 1911-1914, 2016.

- [19] K. Ling, H. Dai, Y. Liu, and A. Liu, “UltraGesture: Fine-Grained Gesture Sensing and Recognition,” IEEE Trans. on Mobile Computing (Early Access), 2020.

- [20] K. Ling, H. Dai, Y. Liu, A. X. Liu, W. Wang, and Q. Gu, “Push the Limit of Acoustic Gesture Recognition,” IEEE Trans. on Mobile Computing (Early Access), doi: 10.1109/TMC.2020.3037241, 2020.

- [21] R. Nandakumar, V. Iyer, D. Tan, and S. Gollakota, “FingerIO: Using Active Sonar for Fine-Grained Finger Tracking,” Proc. of the CHI Conf. on Human Factors in Computing Systems, pp. 1515-1525, 2016.

- [22] W. Wang, A. Liu, and K. Sun, “Device-Free Gesture Tracking Using Acoustic Signals,” Proc. of the 22nd Annual Int. Conf. on Mobile Computing and Networking, pp. 82-94, 2016.

- [23] S. Yun, Y. Chen, H. Zheng, L. Qiu, and W. Mao, “Strata: Fine-Grained Acoustic-based Device-Free Tracking,” Proc. of the 15th Annual Int. Conf. on Mobile Systems, Applications, and Services, pp. 15-28, 2017.

- [24] F. Ding, D. Wang, Q. Zhang, and R. Zhao, “ASSV: Handwritten Signature Verification Using Acoustic Signals,” Proc. of the ACM on Interactive, Mobile, Wearable and Ubiquitous Technologies, Vol.3, No.3, 80, 2019.

- [25] M. Zhang, P. Yang, C. Tian, L. Shi, S. Tang, and F. Xiao, “SoundWrite: Text Input on Surfaces through Mobile Acoustic Sensing,” Proc. of the 1st ACM Int. Workshop on Experiences with the Design and Implementation of Smart Objects, pp. 13-17, 2015.

- [26] G. Luo, M. Chen, P. Li, M. Zhang, and P. Yang, “SoundWrite II: Ambient Acoustic Sensing for Noise Tolerant Device-Free Gesture Recognition,” IEEE 23rd Int. Conf. on Parallel and Distributed Systems, pp. 121-126, 2017.

- [27] G. Luo, P. Yang, M. Chen, and P. Li, “HCI on the Table: Robust Gesture Recognition Using Acoustic Sensing in Your Hand,” IEEE Access, Vol.8, pp. 31481-31498, 2020.

- [28] T. Yu, H. Jin, and K. Nahrstedt, “WritingHacker: Audio based Eavesdropping of Handwriting via Mobile Devices,” Proc. of 2016 ACM Int. Joint Conf. on Pervasive and Ubiquitous Computing, pp. 463-473, 2016.

- [29] T. Yu, H. Jin, and K. Nahrstedt, “Audio based Handwriting Input for Tiny Mobile Devices,” IEEE Conf. on Multimedia Information Processing and Retrieval, pp. 130-135, 2018.

- [30] M. Chen, P. Yang, S. Cao, M. Zhang, and P. Li, “WritePad: Consecutive Number Writing on Your Hand With Smart Acoustic Sensing,” IEEE Access, Vol.6, pp. 77240-77249, 2018.

- [31] M. Chen, P. Yang, J. Xiong, M. Zhang, Y. Lee, C. Xiang, and C. Tian, “Your Table Can Be an Input Panel: Acoustic-based Device-Free Interaction Recognition,” Proc. of the ACM on Interactive, Mobile, Wearable and Ubiquitous Technologies, Vol.3, No.1, 3, 2019.

- [32] A. Rodriguez Valiente, A. Trinidad, J. R. Garcia Berrocal, C. Gorriz, and R. Ramirez Camacho, “Extended High-frequency (9–20 kHz) Audiometry Reference Thresholds in 645 Healthy Subjects,” Int. J. of Audiology, Vol.53, No.8, pp. 531-545, 2014.

- [33] T. Hasegawa, S. Hirahashi, and M. Koshino, “Determining Smartphone’s Placement Through Material Detection, Using Multiple Features Produced in Sound Echoes,” IEEE Access, Vol.5, pp. 5331-5339, 2017.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.