Paper:

Tomato Growth State Map for the Automation of Monitoring and Harvesting

Takuya Fujinaga, Shinsuke Yasukawa, and Kazuo Ishii

Kyushu Institute of Technology

2-4 Hibikino, Wakamatsu, Kitakyushu, Fukuoka 808-0196, Japan

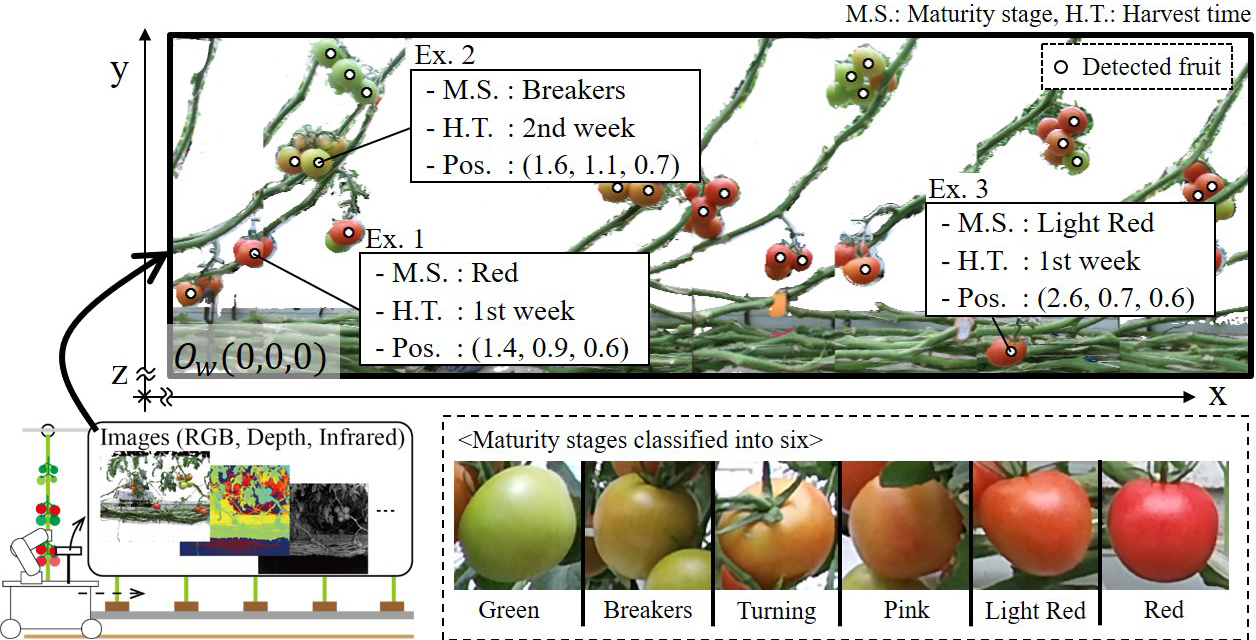

To realize smart agriculture, we engaged in its systematization, from monitoring to harvesting tomato fruits using robots. In this paper, we explain a method of generating a map of the tomato growth states to monitor the various stages of tomato fruits and decide a harvesting strategy for the robots. The tomato growth state map visualizes the relationship between the maturity stage, harvest time, and yield. We propose a generation method of the tomato growth state map, a recognition method of tomato fruits, and an estimation method of the growth states (maturity stages and harvest times). For tomato fruit recognition, we demonstrate that a simple machine learning method using a limited learning dataset and the optical properties of tomato fruits on infrared images exceeds more complex convolutional neural network, although the results depend on how the training dataset is created. For the estimation of the growth states, we conducted a survey of experienced farmers to quantify the maturity stages into six classifications and harvest times into three terms. The growth states were estimated based on the survey results. To verify the tomato growth state map, we conducted experiments in an actual tomato greenhouse and herein report the results.

Tomato growth state map with fruit information

- [1] N. Noguchi, “Agricultural Vehicle Robot,” J. Robot. Mechatron., Vol.30, No.2, pp. 165-172, 2018.

- [2] N. Kondo, K. Yata, M. Iida, T. Shiigi, M. Monta, M. Kurita, and H. Omori, “Development of an End-effector for a Tomato Cluster Harvesting Robot,” Engineering in Agriculture, Environment and Food, Vol.3, No.1, pp. 20-24, 2010.

- [3] H. Yaguchi, K. Nagahama, T. Hasegawa, and M. Inaba, “Development of An Autonomous Tomato Harvesting Robot with Rotational Plucking Gripper,” 2016 IEEE/RSJ Int. Conf. on Intelligent Robots and Systems, pp. 652-657, 2016.

- [4] T. Yoshida, T. Fukao, and T. Hasegawa, “Fast Detection of Tomato Peduncle Using Point Cloud with a Harvesting Robot,” J. Robot. Mechatron., Vol.30, No.2, pp. 180-186, 2018.

- [5] T. Fukatsu and M. Hirafuji, “Field Monitoring Using Sensor-Nodes with a Web Server,” J. Robot. Mechatron., Vol.17, No.2, pp. 164-172, 2005.

- [6] R. Fukui, K. Kawae, and S. Warisawa, “Development of a Tomato Volume Estimating Robot that Autonomously Searches an Appropriate Measurement Position – Basic Feasibility Study Using a Tomato Bed Mock-Up –,” J. Robot. Mechatron., Vol.30, No.2, pp. 173-179, 2018.

- [7] K. Choi, G. Lee, Y. J. Han, and J. M. Bunn, “Tomato Maturity Evaluation Using Color Image Analysis,” American Society of Agricultural Engineers, Vol.38, No.1, pp. 171-176, 1995.

- [8] D. Kusui, H. Shimazu, K. Suezawa, and A. Shinjo, “A Novel Agricultural Skill Learning Support System and its Development and Evaluation,” 2018 Joint 10th Int. Conf. on Soft Computing and Intelligent Systems and 19th Int. Symp. on Advanced Intelligent Systems, pp. 1020-1025, 2018.

- [9] K. Wakamori and H. Mineno, “Optical Flow-Based Analysis of the Relationships between Leaf Wilting and Stem Diameter Variations in Tomato Plants,” Plant Phenomics, Vol.2019, Artile ID 9136298, 2019.

- [10] T. Fujinaga, S. Yasukawa, B. Li, and K. Ishii, “Image Mosaicing Using Multi-Modal Images for Generation of Tomato Growth State Map,” J. Robot. Mechatron., Vol.30, No.2, pp. 187-197, 2018.

- [11] T. Fujinaga, S. Yasukawa, and K. Ishii, “System Development of Tomato Harvesting Robot Based on Modular Design,” 2019 Int. Workshop on Smart Info-Media Systems in Asia, SS1-1, pp. 1-6, 2019.

- [12] R. F. Teimourlou, A. Arefi, and A. M. Motlagh, “A Machine Vision System for the Real-Time Harvesting of Ripe Tomato,” J. of Agricultural Machinery Science, Vol.7, No.2, pp. 159-164, 2011.

- [13] K. Hatou and Y. Hashimoto, “Recognition System for Tomato Fruits Based on the Wire Size Reduction Using Thermal Image,” Environmental Control in Biology, Vol.40, No.1, pp. 75-80, 2002 (in Japanese).

- [14] L. Wang, B. Zhao, J. Fan, X. Hu, S. Wei, Y. Li, Q. Zhou, and C. Wei, “Development of a tomato harvesting robot used in greenhouse,” Int. J. of Agricultural and Biological Engineering, Vol.10, No.4, pp. 140-149, 2017.

- [15] T. Fujiura, S. Nakao, N. Kondo, M. Dohi, and J. Yamashita, “Dichromatic 3-D Vision Sensor for the Agricultural Robot,” J. of Japanese Society of Agricultural Technology Management, Vol.2, No.1, pp. 59-64, 1995 (in Japanese).

- [16] K. Yamamoto, W. Guo, Y. Yoshioka, and S. Ninomiya, “On Plant Detection of Intact Tomato Fruits Using Image Analysis and Machine Learning Methods,” Sensors, Vol.14, No.7, pp. 12191-12206, 2014.

- [17] N. Kondo, “Selection of Suitable Wavelength Bands for Discrimination between Parts of Plant Body Using Their Spectral Reflectances,” Environmental Control in Biology, Vol.26, No.4, pp. 175-183, 1988 (in Japanese).

- [18] M. Monta, N. Kondo, S. Arima, and K. Namba, “Robotic Vision for Bioproduction Systems,” J. Robot. Mechatron., Vol.15, No.3, pp. 341-348, 2003.

- [19] B. Li, J. Lecourt, and G. Bishop, “Advances in Non-Destructive Early Assessment of Fruit Ripeness towards Defining Optimal Time of Harvest and Yield Prediction – A Review,” Plants, Vol.7, No.3, 2018.

- [20] T. Ota, S. Hayashi, K. Kubota, K. Ajiki, T. Komeda, and S. Otsuka, “Tomato Fruit Detection System Using Specular Reflection,” J. of the Japanese Society of Agricultural Machinery, Vol.67, No.6, pp. 95-104, 2005 (in Japanese).

- [21] N. Dalal and B. Triggs, “Histograms of Oriented Gradients for Human Detection,” 2005 IEEE Computer Society Conf. on Computer Vision and Pattern Recognition, pp. 886-893, 2005.

- [22] C. Cortes and V. Vapnik, “Support-Vector Networks,” Machine Learning, Vol.20, pp. 273-297, 1995.

- [23] Y. LeCun, L. Bottou, Y. Bengio, and P. Haffner, “Gradient-Based Learning Applied to Document Recognition,” Proc. of the IEEE, Vol.86, No.11, pp. 2278-2324, 1998.

- [24] C. Szegedy, W. Liu, Y. Jia, P. Sermanet, S. Reed, D. Anguelov, D. Erhan, V. Vanhoucke, and A. Rabinovich, “Going deeper with convolutions,” 2015 IEEE Conf. on Computer Vision and Pattern Recognition, 2015.

- [25] D. Comaniciu and P. Meer, “Mean Shift: A Robust Approach Toward Feature Space Analysis,” IEEE Trans. on Pattern Analysis and Machine Intelligence, Vol.24, No.5, pp. 603-619, 2002.

- [26] S. Ren, K. He, R. Girshick, and J. Sun, “Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks,” Advances in Neural Information Processing Systems, pp. 91-99, 2015.

- [27] W. Liu, D. Anguelov, D. Erhan, C. Szegedy, S. Reed, C.-Y. Fu, and A. C. Berg, “SSD: Single Shot MultiBox Detector,” European Conf. on Computer Vision, pp. 21-37, 2016.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.