Development Report:

Rapid Development of a Mobile Robot for the Nakanoshima Challenge Using a Robot for Intelligent Environments

Tomohiro Umetani, Yuya Kondo, and Takuma Tokuda

Department of Intelligence and Informatics, Konan University

8-9-1 Okamoto, Higashinada, Kobe, Hyoto 658-8501, Japan

Automated mobile platforms are commonly used to provide services for people in an intelligent environment. Data on the physical position of personal electronic devices or mobile robots are important for information services and robotic applications. Therefore, automated mobile robots are required to reconstruct location data in surveillance tasks. This paper describes the development of an autonomous mobile robot to achieve tasks in intelligent environments. In particular, the robot constructed route maps in outdoor environments using laser imaging detection and ranging (LiDAR), and RGB-D sensors via simultaneous localization and mapping. The mobile robot system was developed based on a robot operating system (ROS), reusing existing software. The robot participated in the Nakanoshima Challenge, which is an experimental demonstration test of mobile robots in Osaka, Japan. The results of the experiments and outdoor field tests demonstrate the feasibility of the proposed robot system.

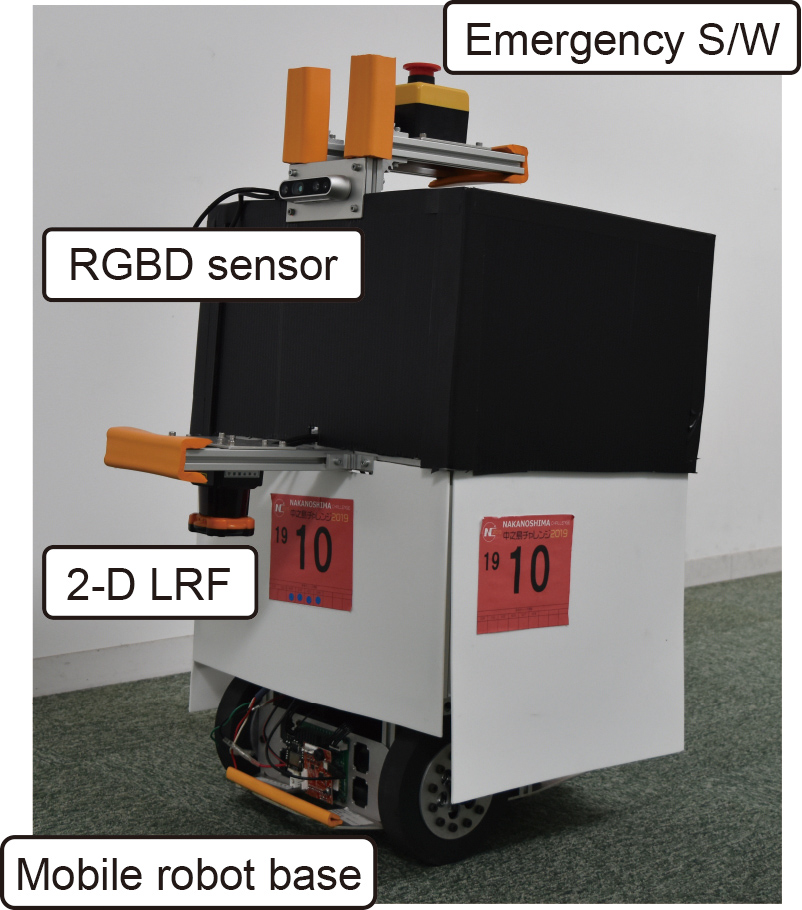

Robot URI-Konan (a) for the Nakanoshima Challenge

- [1] S. Yuta, “Tsukuba Challenge: Open Experiments for Autonomous Navigation of Mobile Robots in the City – Activities and Results of the First and Second Stages –,” J. Robot. Mechatron., Vol.30, No.4, pp. 504-512, 2018.

- [2] T. Takubo, S. Aoyagi, Y. Inoue, A. Imazu, and K. Ikoma, “Report of Nakanoshima Challenge 2019,” Proc. 20th SICE System Integration Division Conf., pp. 1937-1938, 2019 (in Japanese).

- [3] T. Takubo, “Recommendation of Participating Robotics Challenges,” Proc. 63rd Annual Conf. of the Institute of Systems, Control and Information Engineers, pp. 685-688, 2019 (in Japanese).

- [4] T. Tomizawa, M. Shibuya, R. Tanaka, and T. Nishida, “Developing a Remotely Operated Portable Mobile Robot,” J. Robot. Mechatron., Vol.30, No.4, pp. 584-590, 2018.

- [5] Y. Aotani, T. Ienaga, N. Machinaka, Y. Sadakuni, R. Yamazaki, Y. Hosoda, R. Sawahashi, and Y. Kuroda, “Development of Autonomous Navigation System Using 3D Map with Geometric and Semantic Information,” J. Robot. Mechatron., Vol.29, No.4, pp. 639-648, 2017.

- [6] A. Shimizu, T. Koide, Y. Fujiwara, and A. Imadu, “Development of Mobile Robot in Machine Dynamics Laboratory of Osaka City University for Tsukuba Challenge 2017,” Proc. 18th SICE System Integration Division Conf., pp. 1210-1212, 2017 (in Japanese).

- [7] T. Takubo, Y. Uchibori, and A. Ueno, “Introduction for Development of the Mobile Robot of Osaka City University in 2016,” Proc. 17th SICE System Integration Division Conf., pp. 96-98, 2016 (in Japanese).

- [8] T. Eda, T. Hasegawa, S. Nakamura, and S. Yuta, “Development of Autonomous Mobile Robot “MML-05” Based on i-Cart Mini for Tsukuba Challenge 2015,” J. Robot. Mechatron., Vol.28, No.4, pp. 461-469, 2016.

- [9] T. Umetani, Y. Kondo, and T. Tokuda, “Development of Mobile Service Robot from Robotics Laboratory at Konan University for Nakanoshima Challenge 2019,” Proc. 20th SICE System Integration Division Conf., p. 1934, 2019 (in Japanese).

- [10] J. H. Lee and H. Hashimoto, “Intelligent Space – concept and contents,” Adv. Robotics, Vol.16, No.3, pp. 265-280, 2002.

- [11] T. Umetani, T. Yamashita, and Y. Tamura, “Probabilistic Localization of Mobile Wireless LAN Client in Multistory Building Based on Sparse Bayesian Learning,” J. Robot. Mechatron., Vol.23, No.4, pp. 475-483, 2011.

- [12] M. Tanaka, M. Wada, T. Umetani, and M. Ito, “Detection of Mobile Objects by Mixture PDF Model for Mobile Robots,” Trans. of the Institute of Systems, Control and Information Engineers, Vol.25, No.11, pp. 323-327, 2012.

- [13] T. Umetani, S. Yamane, and Y. Tamura, “Indoor Localization for Augmented Reality Aided Operation and Maintenance System based on Sensor Data Integration,” Plasma and Fusion Research, Vol.9, No.3406054, pp. 1-4, 2014.

- [14] Y. Hara, “Autonomous Navigation with ROS,” J. Robotics Society of Japan, Vol.35, No.4, pp. 286-290, 2017 (in Japanese).

- [15] T. Umetani, D. Kiyose, H. Sakakibara, S. Aoki, and T. Kitamura, “Component-based Tabletop Robot System Executed on a Self-Contained Micro Controller,” Trans. of the Society of Instrument and Control Engineers, Vol.54, No.1, pp. 126-128, 2018 (in Japanese).

- [16] T. Umetani, S. Aoki, T. Kitamura, and A. Nadamoto, “System Integration for Component-Based Manzai Robots with Improved Scalability,” J. Robot. Mechatron., Vol.32, No.2, pp. 459-468, 2020.

- [17] W. Hess, D. Kohler, H. Rapp, and D. Andor, “Real-Time Loop Closure in 2D LIDAR SLAM,” Proc. the 2016 IEEE Int. Conf. on Robotics and Automation, pp. 1271-1218, 2016.

- [18] W. Liu, D. Anguelov, D. Erhan, C. Szegedy, S. Reed, C.-Y. Fu, and A. C. Berg, “SSD: Single Shot MultiBox Detector,” Proc. European Conf. on Computer Vision (ECCV 2016), Lecture Notes in Computer Science, Vol.9905, pp. 21-37, 2016.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.