Paper:

Outdoor Human Detection with Stereo Omnidirectional Cameras

Shunya Tanaka and Yuki Inoue

Osaka Institute of Technology

1-45 Chayamachi, Kita-ku, Osaka 530-8568, Japan

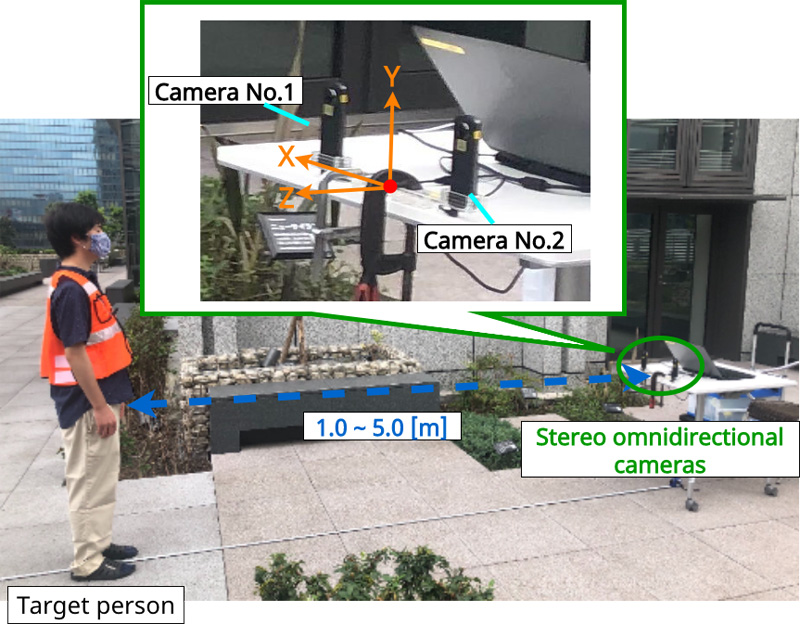

An omnidirectional camera can simultaneously capture all-round (360°) environmental information as well as the azimuth angle of a target object or person. By configuring a stereo camera set with two omnidirectional cameras, we can easily determine the azimuth angle of a target object or person per camera on the image information captured by the left and right cameras. A target person in an image can be localized by using a region-based convolutional neural network and the distance measured by the parallax in the combined azimuth angles.

Depth estimation of target person by object recognition and color similarity

- [1] S. Thrun, W. Burgard, and D. Fox, “Probabilistic robotics,” MIT Press, Cambridge, 2005.

- [2] A. F. Elaraby, A. Hamdy, and M. Rehan, “A Kinect-Based 3D Object Detection and Recognition System with Enhanced Depth Estimation Algorithm,” 2018 IEEE 9th Annual Information Technology, Electronics and Mobile Communication Conf. (IEMCON 2018), pp. 247-252, 2019.

- [3] M. A. Haseeb, J. Guan, D. Ristic-Durrant, and A. Gräser, “Disnet: A novel method for distance estimation from monocular camera,” 10th Planning, Perception and Navigation for Intelligent Vehicles (PPNIV18), IROS, 2018.

- [4] T. Akita, Y. Yamauchi, and H. Fujiyoshi, “Stereo vision by combination of machine-learning techniques for pedestrian detection at intersections utilizing surround-view cameras,” J. Robot. Mechatron., Vol.32, No.3, pp. 494-502, 2020.

- [5] T. Trzcinski and V. Lepetit, “Efficient Discriminative Projections for Compact Binary Descriptors,” 12th European Conf. on Computer Vision (ECCV 2012), pp. 228-242, 2012.

- [6] T. Aoki, S. Mengcheng, and H. Watanabe, “Position Estimation and Distance Measurement from Omnidirectional Cameras,” The 80th Information Processing Society of Japan, Vol.2018, No.1, pp. 265-266, 2018.

- [7] A. Ohashi, Y. Tanaka, G. Masuyama, K. Umeda, D. Fukuda, T. Ogata, T. Narita, S. Kaneko, Y. Uchida, and K. Irie, “Fisheye stereo camera using equirectangular images,” 2016 11th France-Japan 9th Europe-Asia Congress on Mechatronics (MECATRONICS) /17th Int. Conf. on Research and Education in Mechatronics (REM), pp. 284-289, 2016.

- [8] J. Redmon, S. Divvala, R. Girshick, and A. Farhadi, “You only look once: Unified, real-time object detection,” Proc. of the IEEE Conf. on Computer Vision and Pattern Recognition, pp. 779-788, 2016.

- [9] A. Farhadi and and J. Redmon, “Yolov3: An incremental improvement,” Computer Vision and Pattern Recognition, 2018.

- [10] J. Redmon and A. Farhadi, “YOLO9000: better, faster, stronger,” Proc. of the IEEE Conf. on Computer Vision and Pattern Recognition, pp. 7263-7271, 2017.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.