Paper:

Outdoor Autonomous Navigation Utilizing Proximity Points of 3D Pointcloud

Yuichi Tazaki and Yasuyoshi Yokokohji

Graduate School of Engineering, Kobe University

1-1 Rokkodai-cho, Nada-ku, Kobe, Hyogo 657-8501, Japan

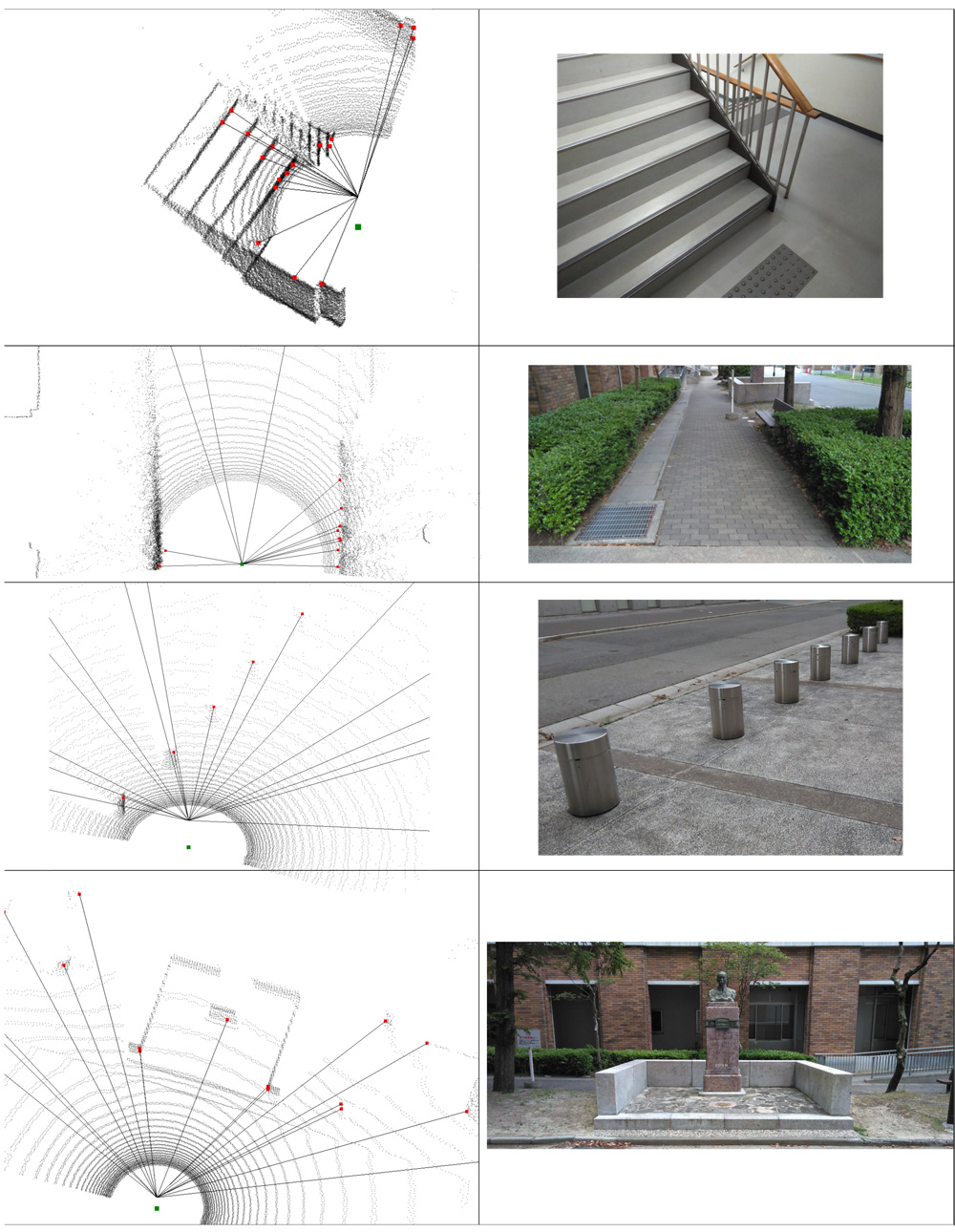

In this paper, an autonomous navigation method that utilizes proximity points of 3D range data is proposed for use in mobile robots. Some useful geometric properties of proximity points are derived, and a computationally efficient algorithm for extracting such points from 3D pointclouds is presented. Unlike previously proposed keypoints, the proximity point does not require any computationally expensive analysis of the local curvature, and is useful for detecting reliable keypoints in an environment where objects with definite curvatures such as edges and flat surfaces are scarce. Moreover, a particle-filter-based self-localization method that uses proximity points for a similarity measure of observation is presented. The proposed method was implemented in a real mobile robot system, and its performance was tested in an outdoor experiment conducted during Nakanoshima Challenge 2019.

Proximity points extracted from 3D pointcloud

- [1] M. Kaess and F. Dellaert, “A Markov Chain Monte Carlo Approach to Closing the Loop in SLAM,” IEEE Int. Conf. on Robotics and Automation, pp. 643-648, 2005.

- [2] G. D. Tipaldi and K. O. Arras, “FLIRT – Interest Regions for 2D Range Data,” IEEE Int. Conf. on Robotics and Automation, pp. 3616-3622, 2010.

- [3] M. Himstedt, J. Frost, S. Hellbach, H. J. Böhme, and E. Maehle, “Large Scale Place Recognition in 2D LIDAR Scans Using Geometric Landmark Relations,” IEEE/RSJ Int. Conf. on Intelligent Robots and Systems, pp. 5030-5035, 2014.

- [4] M. Himstedt and E. Maehle, “Geometry Matters: Place Recognition in 2D Range Scans Using Geometrical Surface Relations,” European Conf. on Mobile Robots, pp. 1-6, 2015.

- [5] F. Kallasi and D. L. Rizzini, “Efficient Loop Closure Based on FALKO LIDAR Features for Online Robot Localization and Mapping,” IEEE/RSJ Int. Conf. on Intelligent Robots and Systems, pp. 1206-1213, 2016.

- [6] D. L. Rizzini, “Place Recognition of 3D Landmarks Based on Geometric Relations,” IEEE/RSJ Int. Conf. on Intelligent Robots and Systems, pp. 648-654, 2017.

- [7] M. Magnusson, H. Andreassson, A. Nüchter, and A. J. Lilienthal, “Automatic Appearance-based Loop Detection from Three-dimensional Laser Data Using the Normal Distributions Transform,” J. of Field Robotics, Vol.26, Nos.11-12, pp. 892-914, 2009.

- [8] J. Zhang and S. Singh, “LOAM: Lidar Odometry and Mapping in Real-time,” Robotics: Science and Systems Conf., 2014.

- [9] Y. Tazaki, Y. Miyauchi, and Y. Yokokohji, “Loop Detection of Outdoor Environment Using Proximity Points of 3D Pointcloud,” IEEE/SICE Int. Symp. of Systems Integration, 2017.

- [10] G. Grisetti, R. Kümmerle, C. Stachniss, and W. Burgard, “A Tutorial on Graph-Based SLAM,” IEEE Intelligent Transportation Magazine, Vol.2, No.4, pp. 31-43, 2010.

- [11] S. Thrun, W. Burgard, and D. Fox, “Probabilistic Robotics,” MIT Press, 2005.

- [12] K. Kimoto, N. Asada, T. Mori, Y. Hara, A. Ohya, and S. Yuta, “Development of small size 3D LIDAR,” IEEE Int. Conf. on Robotics and Automation, pp. 4620-4626, 2014.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.