Paper:

Autonomous Mobile Robot for Outdoor Slope Using 2D LiDAR with Uniaxial Gimbal Mechanism

Shunya Hara*, Toshihiko Shimizu*, Masanori Konishi*, Ryotaro Yamamura*, and Shuhei Ikemoto**

*Kobe City College of Technology

8-3 Gakuen-Higashimachi, Nishi-ku, Kobe 651-2194, Japan

**Graduate School of Life Science and Systems Engineering, Kyusyu Institute of Technology

2-4 Hibikino, Wakamatsu, Kitakyushu, Fukuoka 808-0196, Japan

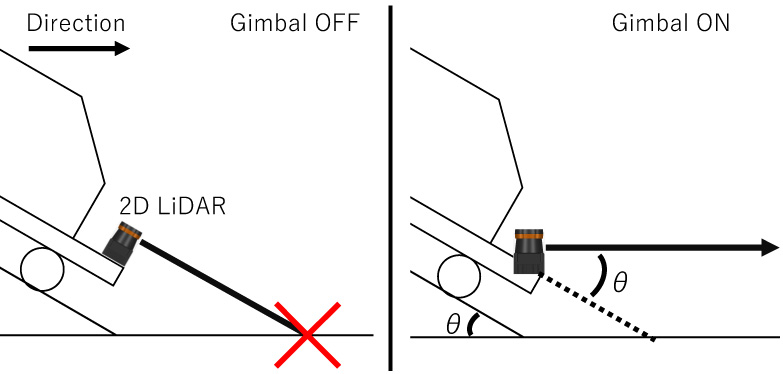

The Nakanoshima Challenge is a contest for developing sophisticated navigation systems of robots for collecting garbage in outdoor public spaces. In this study, a robot named Navit(oo)n is designed, and its performance in public spaces such as city parks is evaluated. Navit(oo)n contains two 2D LiDAR scanners with uniaxial gimbal mechanism, improving self-localization robustness on a slope. The gimbal mechanism adjusts the angle of the LiDAR scanner, preventing erroneous ground detection. We evaluate the navigation performance of Navit(oo)n in the Nakanoshima and its Extra Challenges.

Uniaxial gimbal mechanism for slope

- [1] R. Sakai, S. Katsumata, T. Miki, T. Yano, W. Wei, Y. Okadome, N. Chihara, N. Kimura, Y. Nakai, I. Matsuo, and T. Shimizu, “A mobile dual-arm manipulation robot system for stocking and disposing of items in a convenience store by using universal vacuum grippers for grasping items,” Advanced Robotics, Vol.34, Nos.3-4, pp. 219-234, 2020.

- [2] I. Matsuo, T. Shimizu, Y. Nakai, M. Kakimoto, Y. Sawasaki, Y. Mori, T. Sugano, S. Ikemoto, and T. Miyamoto, “Q-bot: heavy object carriage robot for in-house logistics based on universal vacuum gripper,” Advanced Robotics, Vol.34, Nos.3-4, pp. 173-188, 2020.

- [3] J. Shintake, V. Cacucciolo, D. Floreano, and H. Shea, “Soft robotic grippers,” Advanced Materials, Vol.30, 1707035, 2018.

- [4] T. Watanabe, K. Yamazaki, and Y. Yokokohji, “Survey of robotic manipulation studies intending practical applications in real environments – object recognition, soft robot hand, and challenge program and benchmarking –,” Advanced Robotics, Vol.31, pp. 1114-1132, 2017.

- [5] E. Brown, N. Rodenberg, J. Amend, A. Mozeika, E. Steltz, M. R. Zakin, H. Lipson, and H. M. Jaeger, “Universal robotic gripper based on the jamming of granular material,” Proc. of the National Academy of Sciences, Vol.107, pp. 18809-18814, 2010.

- [6] J. R. Amend, Jr, E. Brown, N. Rodenberg, H. M. Jaeger, and H. Lipson, “A Positive Pressure Universal Gripper Based on the Jamming of Granular Material,” IEEE Trans. on Robotics, Vol.28, pp. 341-350, 2012.

- [7] J. Amend, N. Chang, S. Fakhouri, and B. Culley, “Soft Robotics Commercialization: Jamming Grippers from Research of Product,” Soft Robotics, Vol.3, pp. 213-222, 2016.

- [8] M. Fujita, K. Tadakuma, H. Komatsu, E. Takane, A. Nomura, T. Ichimura, M. Konyo, and S. Tadokoro, “Jamming layered membrane gripper mechanism for grasping differently shaped-objects without excessive pushing force for search and rescue missions,” Advanced Robotics, Vol.32, No.11, pp. 590-604, 2018.

- [9] M. Fujita, S. Ikeda, T. Fujimoto, T. Shimizu, S. Ikemoto, and T. Miyamoto, “Development of universal vacuum gripper for wall-climbing robot,” Advanced Robotics, Vol.32, No.6, pp. 283-296, 2018.

- [10] T. Tomokazu, S. Kikuchi, M. Suzuki, and S. Aoyagi, “Vacuum gripper imitated octopus sucker-effect of liquid membrane for absorption,” 2015 IEEE/RSJ Int. Conf. on Intelligent Robots and Systems (IROS), pp. 2929-2936, 2015.

- [11] K. Inoue, S. A. Rahok, and K. Ozaki, “Proposal and consideration of design policy for autonomous mobile robots in real world robot challenge,” J. of the Robotics Society of Japan, Vol.30, pp. 234-244, 2018 (in Japanese).

- [12] T. Yoshida, K. Irie, E. Koyanagi, and M. Tomono, “An Outdoor Navigation Platform with a 3D Scanner and Gyro-assisted Odometry,” Trans. of the Society of Instrument and Control Engineers, Vol.47, pp. 493-500, 2011 (in Japanese).

- [13] N. Kimura and J. Ota, “Unknown object detection using floor height map for mobile robots on indoor floor with non-horizontal partial areas,” J. of the Robotics Society of Japan, Vol.34, pp. 699-710, 2011 (in Japanese).

- [14] S. Thrun, “Probabilistic robotics,” Communications of the ACM, Vol.45, No.3, pp. 52-57, 2002.

- [15] G. Grisetti, C. Stachniss, and W. Burgard, “Improved techniques for grid mapping with Rao-Blackwellized particle filters,” IEEE Trans. on Robotics, Vol.23, No.1, pp. 34-46, 2007.

- [16] S. Nakamura, T. Hasegawa, T. Hiraoka, and Y. Ochiai, “Person searching through an omnidirectional camera using CNN in the Tsukuba Challenge,” J. Robot. Mechatron., Vol.30, No.4, pp. 540-551, 2018.

- [17] Y. Kanuki and N. Ohta, “Development of Autonomous Robot with Simple Navigation System for Tsukuba Challenge 2015,” J. Robot. Mechatron., Vol.28, No.4, pp. 432-440, 2016.

- [18] J. Redmon and A. Angelova, “Real-time grasp detection using convolutional neural networks,” Proc. of the 2015 IEEE Int. Conf. on Robotics and Automation (ICRA), 2015.

- [19] R. Joseph, S. Divvala, R. Girshick, and A. Farhadi, “You only look once: Unified, real-time object detection,” Proc. of the IEEE Conf. on Computer Vision and Pattern Recognition, pp. 779-788, 2016.

- [20] J. Redmon and A. Farhadi, “Yolov3: An incremental improvement,” arXiv: 1804.02767, 2018.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.