Paper:

Development of Edge-Node Map Based Navigation System Without Requirement of Prior Sensor Data Collection

Kazuki Takahashi, Jumpei Arima, Toshihiro Hayata, Yoshitaka Nagai, Naoya Sugiura, Ren Fukatsu, Wataru Yoshiuchi, and Yoji Kuroda

Meiji University

1-1-1 Higashimita, Tama-ku, Kawasaki, Kanagawa 214-8571, Japan

In this study, a novel framework for autonomous robot navigation system is proposed. The navigation system uses an edge-node map, which is easily created from electronic maps. Unlike a general self-localization method using an occupancy grid map or a 3D point cloud map, there is no need to run the robot in the target environment in advance to collect sensor data. In this system, the internal sensor is mainly used for self-localization. Assuming that the robot is running on the road, the position of the robot is estimated by associating the robot’s travel trajectory with the edge. In addition, node arrival determination is performed using branch point information obtained from the edge-node map. Because this system does not use map matching, robust self-localization is possible, even in a dynamic environment.

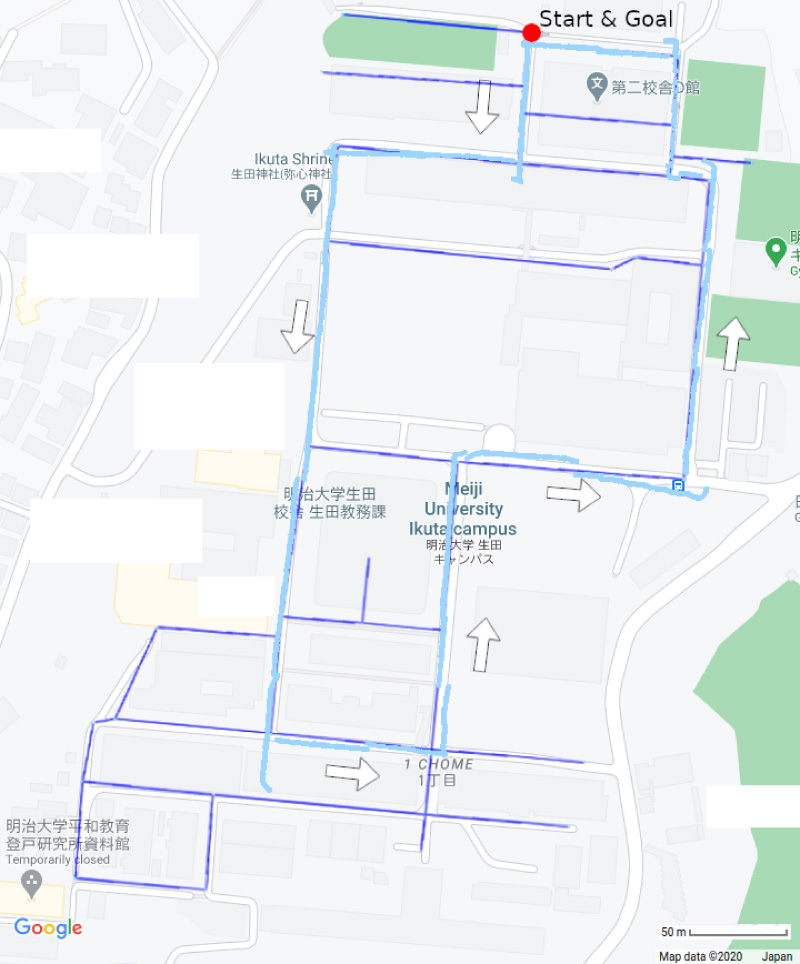

Navigation result using an edge-node map

- [1] Y. Aotani, T. Ienaga, N. Machinaka, Y. Sadakuni, R. Yamazaki, Y. Hosoda, R. Sawahashi, and Y. Kuroda, “Development of Autonomous Navigation System Using 3D Map with Geometric and Semantic Information,” J. Robot. Mechatron., Vol.29, No.4, pp. 639-648, 2017.

- [2] M. Saito, K. Kiuchi, S. Shimizu, T. Yokota, Y. Fujino, T. Saito, and Y. Kuroda, “Pre-driving needless system for autonomous mobile robots navigation in real world robot challenge 2013,” J. Robot. Mechatron., Vol.26, No.2, pp. 185-195, 2014.

- [3] P. Biber and W. Straßer, “The normal distributions transform: A new approach to laser scan matching,” Proc. of the 2003 IEEE/RSJ Int. Conf. on Intelligent Robots and Systems (IROS 2003), Vol.3, pp. 2743-2748, 2003.

- [4] M. Magnusson, A. Lilienthal, and T. Duckett, “Scan registration for autonomous mining vehicles using 3D-NDT,” J. of Field Robotics, Vol.24, No.10, pp. 803-827, 2007.

- [5] Y. Hosoda, R. Sawahashi, N. Machinaka, R. Yamazaki, Y. Sadakuni, K. Onda, R. Kusakari, M. Kimba, T. Oishi, and Y. Kuroda, “Robust Road-Following Navigation System with a Simple Map,” J. Robot. Mechatron., Vol.30, No.4. pp. 552-562, 2018.

- [6] M. Kimba, N. Machinaka, and Y. Kuroda, “Edge-Node Map Based Localization without External Sensor Data,” IFAC-PapersOnLine, Vol.51, No.22, pp. 203-208, 2018.

- [7] S. Thrun, M. Montemerlo, H. Dahlkamp, D. Stavens, A. Aron, J. Diebel, P. Fong, J. Gale, M. Halpenny, G. Hoffmann et al., “Stanley: The robot that won the DARPA Grand Challenge,” J. of Field Robotics, Vol.23, No.9, pp. 661-692, 2006.

- [8] S. Thrun, W. Burgard, and D. Fox, “Probabilistic Robotics,” The MIT Press, 2005.

- [9] F. Neuhaus, D. Dillenberger, J. Pellenz, and D. Paulus, “Terrain drivability analysis in 3D laser range data for autonomous robot navigation in unstructured environments,” Proc. of the 2009 IEEE Conf. on Emerging Technologies & Factory Automation, pp. 1-4, 2009.

- [10] N. Otsu, “A threshold selection method from gray-level histograms,” IEEE Trans. on Systems, Man, and Cybernetics, Vol.9, No.1, pp. 62-66, 1979.

- [11] Y. Nagai, R. Kusakari, and Y. Kuroda, “Classification of Point Cloud Using Received Light Intensity According to the Degree of Separation,” Proc. of the 2020 IEEE/SICE Int. Symp. on System Integration (SII), pp. 323-328, 2020.

- [12] J. Hu, A. Razdan, J. C. Femiani, M. Cui, and P. Wonka, “Road network extraction and intersection detection from aerial images by tracking road footprints,” IEEE Trans. on Geoscience and Remote Sensing, Vol.45, No.12, pp. 4144-4157, 2007.

- [13] T. Chen, B. Dai, D. Liu, and Z. Liu, “LiDAR-based long range road intersection detection,” Proc. of the 2011 6th Int. Conf. on Image and Graphics, pp. 754-759, 2011.

- [14] Y. Zhang, J. Wang, X. Wang, C. Li, and L. Wang, “3D LiDAR-based intersection recognition and road boundary detection method for unmanned ground vehicle,” Proc. of the 2015 IEEE 18th Int. Conf. on Intelligent Transportation Systems, pp. 499-504, 2015.

- [15] E. W. Dijkstra et al., “A note on two problems in connexion with graphs,” Numerische Mathematik, Vol.1, No.1, pp. 269-271, 1959.

- [16] T. M. Howard and A. Kelly, “Optimal rough terrain trajectory generation for wheeled mobile robots,” The Int. J. of Robotics Research, Vol.26, No.2, pp. 141-166, 2007.

- [17] T. M. Howard, C. J. Green, A. Kelly, and D. Ferguson, “State space sampling of feasible motions for high-performance mobile robot navigation in complex environments,” J. of Field Robotics, Vol.25, Nos.6-7, pp. 325-345, 2008.

- [18] D. Ferguson, T. M. Howard, and M. Likhachev, “Motion planning in urban environments,” J. of Field Robotics, Vol.25, Nos.11-12, pp. 939-960, 2008.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.