Paper:

Previous Announcement Method Using 3D CG Face Interface for Mobile Robot

Masahiko Mikawa*, Jiayi Lyu*, Makoto Fujisawa*, Wasuke Hiiragi**, and Toyoyuki Ishibashi***

*University of Tsukuba

1-2 Kasuga, Tsukuba, Ibaraki 305-8550, Japan

**Chubu University

1200 Matsumoto-cho, Kasugai, Aichi 487-8501, Japan

***Gifu Women’s University

80 Taromaru, Gifu, Gifu 501-2592, Japan

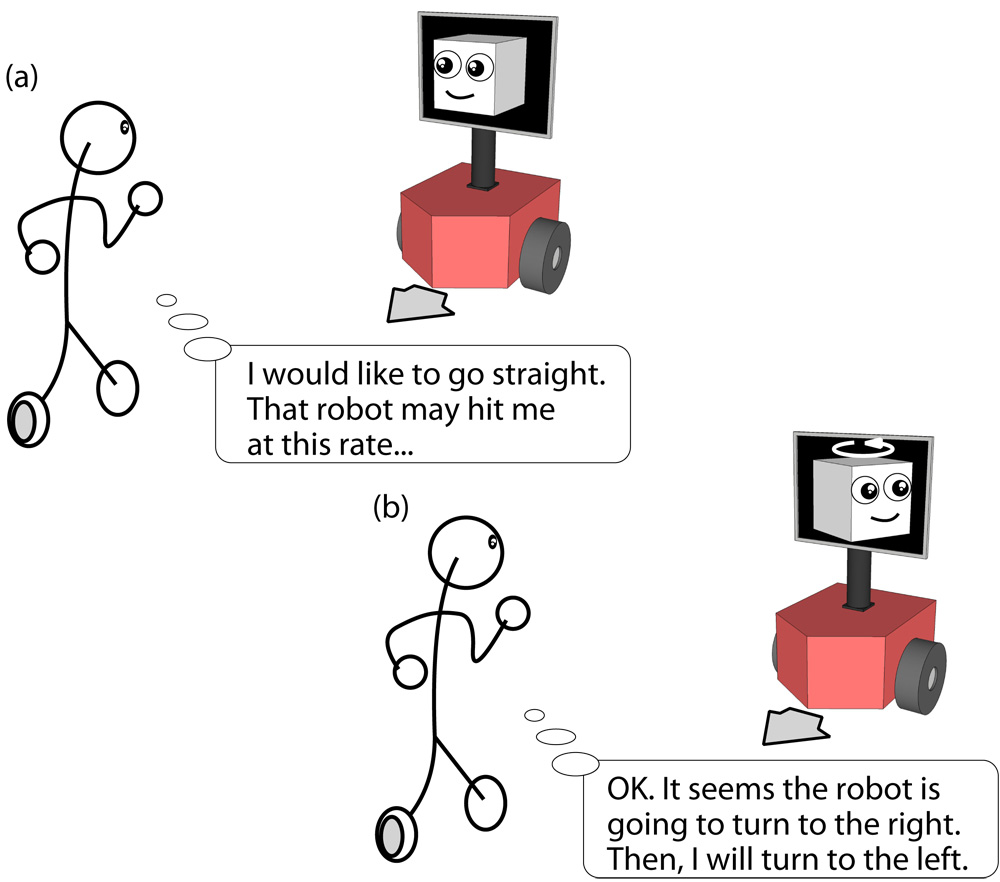

When a robot works among people in a public space, its behavior can make some people feel uncomfortable. One of the reasons for this is that it is difficult for people to understand the robot’s intended behavior based on its appearance. This paper presents a new intention expression method using a three dimensional computer graphics (3D CG) face model. The 3D CG face model is displayed on a flat panel screen and has two eyes and a head that can be rotated freely. When the mobile robot is about to change its traveling direction, the robot rotates its head and eyes in the direction it intends to go, so that an oncoming person can know the robot’s intention from this previous announcement. Three main types of experiment were conducted, to confirm the validity and effectiveness of our proposed previous announcement method using the face interface. First, an appropriate timing for the previous announcement was determined from impression evaluations as a preliminary experiment. Secondly, differences between two experiments, in which a pedestrian and the robot passed each other in a corridor both with and without the previous announcement, were evaluated as main experiments of this study. Finally, differences between our proposed face interface and the conventional robot head were analyzed as a reference experiments. The experimental results confirmed the validity and effectiveness of the proposed method.

Previous announcement using combination of face and gaze directions

- [1] T. Shibata, “Therapeutic seal robot as biofeedback medical device: Qualitative and quantitative evaluations of robot therapy in dementia care,” Proc. of the IEEE, Vol.100, No.8, pp. 2527-2538, 2012.

- [2] T. Shibata, “Aibo: Toward the era of digital creatures,” The Int. J. of Robotics Research, Vol.20, No.10, pp. 781-794, 2001.

- [3] D. Wooden, M. Malchano, K. Blankespoor, A. Howardy, A. A. Rizzi, and M. Raibert, “Autonomous navigation for BigDog,” Proc. of 2010 IEEE Int. Conf. on Robotics and Automation, pp. 4736-4741, 2010.

- [4] J. Jones, “Robots at the tipping point: the road to irobot roomba,” IEEE Robotics Automation Magazine, Vol.13, No.1, pp. 76-78, 2006.

- [5] S. Cremer, L. Mastromoro, and D. O. Popa, “On the performance of the baxter research robot,” Proc. of 2016 IEEE Int. Symp. on Assembly and Manufacturing (ISAM 2016), pp. 106-111, 2016.

- [6] A. K. Pandey and R. Gelin, “A mass-produced sociable humanoid robot: Pepper: The first machine of its kind,” IEEE Robotics Automation Magazine, Vol.25, No.3, pp. 40-48, 2018.

- [7] T. Kanda, “Enabling Harmonized Human-Robot Interaction in a Public Space,” pp. 115-137, Springer Japan, 2017.

- [8] E. Guizzo, “Cynthia Breazeal unveils Jibo, a social robot for the home,” IEEE SPECTRUM, July 16, 2014.

- [9] J. K. Westlund et al., “Tega: A social robot,” Proc. of 2016 11th ACM/IEEE Int. Conf. on Human-Robot Interaction (HRI 2016), pp. 561-561, 2016.

- [10] K. Kaneko, H. Kaminaga, T. Sakaguchi, S. Kajita, M. Morisawa, I. Kumagai, and F. Kanehiro, “Humanoid robot HRP-5P: An electrically actuated humanoid robot with high-power and wide-range joints,” IEEE Robotics and Automation Letters, Vol.4, No.2, pp. 1431-1438, 2019.

- [11] N. A. Radford et al., “Valkyrie: NASA’s first bipedal humanoid robot,” J. of Field Robotics, Vol.32, No.3, pp. 397-419, 2015.

- [12] M. Mikawa, Y. Yoshikawa, and M. Fujisawa, “Expression of intention by rotational head movements for teleoperated mobile robot,” Proc. of 2018 IEEE 15th Int. Workshop on Advanced Motion Control (AMC2018), pp. 249-254, 2018.

- [13] D. Todorović, “Geometrical basis of perception of gaze direction,” Vision Research, Vol.46, No.21, pp. 3549-3562, 2006.

- [14] I. Kawaguchi, H. Kuzuoka, and Y. Suzuki, “Study on gaze direction perception of face image displayed on rotatable flat display,” Proc. of the 33rd Annual ACM Conf. on Human Factors in Computing Systems (CHI’15), pp. 1729-1737, 2015.

- [15] D. Miyauchi, A. Nakamura, and Y. Kuno, “Bidirectional eye contact for human-robot communication,” IEICE – Trans. on Information and Systems, Vol.E88-D, No.11, pp. 2509-2516, 2005.

- [16] I. Shindev, Y. Sun, M. Coovert, J. Pavlova, and T. Lee, “Exploration of intention expression for robots,” Proc. of the 7th ACM/IEEE Int. Conf. on Human-Robot Interaction (HRI2012), pp. 247-248, 2012.

- [17] T. Matsumaru, “Comparison of Displaying with Vocalizing on Preliminary-Announcement of Mobile Robot Upcoming Operation,” C. Ciufudean and L. Garcia (Eds.), “Advances in Robotics – Modeling, Control and Applications,” pp. 133-147, iConcept Press, 2013.

- [18] S. Andrist, X. Z. Tan, M. Gleicher, and B. Mutlu, “Conversational gaze aversion for humanlike robots,” Proc. of the 2014 ACM/IEEE Int. Conf. on Human-robot Interaction, pp. 25-32, 2014.

- [19] H. Admoni and B. Scassellati, “Social eye gaze in human-robot interaction: A review,” J. of Human-Robot Interaction, Vol.6, No.1, pp. 25-63, 2017.

- [20] J. J. Gibson and A. D. Pick, “Perception of another person’s looking behavior,” The American J. of Psychology, Vol.76, No.3, pp. 386-394, 1963.

- [21] S. M. Anstis, J. W. Mayhew, and T. Morley, “The perception of where a face or television ‘portrait’ is looking,” The American J. of Psychology, Vol.82, No.4, pp. 474-489, 1969.

- [22] H. Hecht, E. Boyarskaya, and A. Kitaoka, “The Mona Lisa effect: Testing the limits of perceptual robustness vis-à-vis slanted images,” Psihologija, Vol.47, pp. 287-301, 2014.

- [23] N. L. Kluttz, B. R. Mayes, R. W. West, and D. S. Kerby, “The effect of head turn on the perception of gaze,” Vision Research, Vol.49, No.15, pp. 1979-1993, 2009.

- [24] S. A. Moubayed, J. Edlund, and J. Beskow, “Taming Mona Lisa: Communicating gaze faithfully in 2d and 3d facial projections,” ACM Trans. on Interactive Intelligent Systems (TiiS), Vol.1, No.2, pp. 11:1-11:25, 2012.

- [25] M. Gonzalez-Franco and P. A. Chou, “Non-linear modeling of eye gaze perception as a function of gaze and head direction,” Proc. of 2014 6th Int. Workshop on Quality of Multimedia Experience (QoMEX), pp. 275-280, 2014.

- [26] J. Rollo, “Tracking for a roboceptionist,” Master’s thesis, School of Computer Science, Computer Science Department, Carnegie Mellon University, 2007.

- [27] D. Fox, W. Burgardy, and S. Thrun, “The dynamic window approach to collision avoidance,” IEEE Robotics Automation Magazine, Vol.4, No.1, pp. 23-33, 1997.

- [28] E. Pacchierotti, H. I. Christensen, and P. Jensfelt, “Evaluation of passing distance for social robots,” Proc. of the 15th IEEE Int. Symp. on Robot and Human Interactive Communication (RO-MAN 2006), pp. 315-320, 2006.

- [29] J. Redmon and A. Farhadi, “YOLOv3: An incremental improvement,” arXiv, abs/1804.02767, 2018.

- [30] E. T. Hall, “The Hidden Dimension,” Anchor Books, 1966.

- [31] J. Lyu, M. Mikawa, M. Fujisawa, and W. Hiiragi, “Mobile robot with previous announcement of upcoming operation using face interface,” Proc. of 2019 IEEE/SICE Int. Symp. on System Integration (SII2019), pp. 782-787, 2019.

- [32] J. Minguez and L. Montano, “Nearness diagram (nd) navigation: collision avoidance in troublesome scenarios,” IEEE Trans. on Robotics and Automation, Vol.20, No.1, pp. 45-59, 2004.

- [33] S. Masuko and J. Hoshino, “Head-eye animation corresponding to a conversation for cg characters,” Computer Graphics Forum, Vol.26, No.3, pp. 303-312, 2007.

- [34] T. Matsumaru, S. Kudo, T. Kusada, K. Iwase, K. Akiyama, and T. Ito, “Simulation of preliminary-announcement and display of mobile robot’s following action by lamp, party-blowouts, or beam-light,” Proc. of IEEE/ASME Int. Conf. on Advanced Intelligent Mechatronics (AIM 2003), Vol.2, pp. 771-777, 2003.

- [35] J. Xu and A. M. Howard, “The Impact of First Impressions on Human-Robot Trust During Problem-Solving Scenarios,” Proc. of 2018 27th IEEE Int. Symp. on Robot and Human Interactive Communication (RO-MAN), pp. 435-441, 2018.

- [36] K. Bergmann, F. Eyssel, and S. Kopp, “A Second Chance to Make a First Impression? How Appearance and Nonverbal Behavior Affect Perceived Warmth and Competence of Virtual Agents over Time,” Proc. of Int. Conf. on Intelligent Virtual Agents, pp. 126-138, 2012.

- [37] C. Bartneck, E. Croft, and D. Kulic, “Measurement instruments for the anthropomorphism, animacy, likeability, perceived intelligence, and perceived safety of robots,” Int. J. of Social Robotics, Vol.1, No.1, pp. 71-81, 2009.

- [38] E. Goffman, “Relations in public, Chapter The Individual as a Unit,” pp. 3-27, Harper & Row, Publishers, Inc., 1971.

- [39] E. Goffman, “Behavior in Public Places, Chapter Face Engagements,” pp. 83-88, The Free Press Publishers, Inc., 1963.

- [40] M. Makatchev and R. Simmons, “Incorporating a user model to improve detection of unhelpful robot answers,” Proc. of Int. Symp. on Robot and Human Interactive Communication (RO-MAN 2009), pp. 973-978, 2009.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.