Paper:

Autonomous Mobile Robot Moving Through Static Crowd: Arm with One-DoF and Hand with Involute Shape to Maneuver Human Position

Noriaki Imaoka*, Kazuma Kitazawa*, Mitsuhiro Kamezaki**, Shigeki Sugano***, and Takeshi Ando*

*Panasonic Corporation

Shiodome Hamarikyu Building, 8-21-1 Ginza, Chuo-ku, Tokyo 104-0061, Japan

**Research Institute for Science and Engineering, Waseda University

17 Kikui-cho, Shinjuku-ku, Tokyo 162-0044, Japan

***Department of Modern Mechanical Engineering, School of Creative Science and Engineering, Waseda University

3-4-1 Okubo, Shinjuku-ku, Tokyo 169-8555, Japan

Owing to manpower shortages, robots are expected to be increasingly integrated into society in the future. Moreover, robots will be required to navigate through crowded environments. Thus, we proposed a new method of autonomous movement compatible with physical contact signaling used by humans. The method of contact was investigated before using an arm with six degrees of freedom (DoF), which increases the cost of the robot. In this paper, we propose a novel method of navigating through a human crowd by using a conventional driving system for autonomous mobile robots and an involute-shaped hand with an one-DoF arm. Finally, the effectiveness of the method was confirmed experimentally.

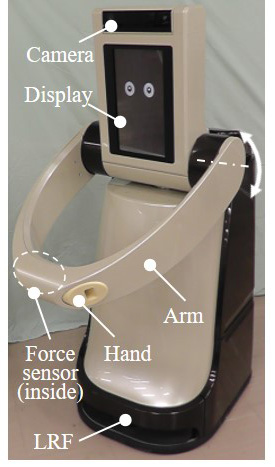

Outline of developed robot

- [1] T. Brogardh, “Present and future robot control development: An industrial perspective,” Annual Reviews in Control, Vol.4, No.1, pp. 69-79, 2007.

- [2] E. Clemens, S. Höfer, R. Jonschkowski, R. Martín-Martín, A. Sieverling, V. Wall, and O. Brock, “Lessons from the Amazon Picking Challenge: Four Aspects of Building Robotic Systems,” Proc. of 26th Int. Joint Conf. on Artificial Intelligence, pp. 4831-4835, 2017.

- [3] W. Burgard, A. B. Cremers, D. Fox, D. Hähnel, G. Lakemeyer, D. Schulz, W. Steiner, and S. Thrun, “Experiences with an interactive museum tour-guide robot,” Artificial Intelligence, Vol.114, Nos.1-2, pp. 3-55, 1999.

- [4] C. C. Wang, C. Thorpe, S. Thrun, M. Hebert, and H. Durrant-Whyte, “Simultaneous Localization, Mapping and Moving Object Tracking,” The Int. J. of Robotics Research (IJRR), Vol.26, No.9, pp. 889-916, 2007.

- [5] D. Wolf and G. S. Sukhatme, “Online Simultaneous Localization and Mapping in Dynamic Environments,” Proc. of 2004 IEEE Int. Conf. on Robotics and Automation (ICRA’04), Vol.2, pp. 1301-1307, 2004.

- [6] F. T. Ramos, T. Fabio, J. Nieto, and H. F. Durrant-Whyte, “Recognizing and Modelling Landmarks to Close Loops in Outdoor SLAM,” 2007 IEEE Int. Conf. on Robotics and Automation, pp. 2036-2041, 2007.

- [7] P. Biber and T. Duckett, “Dynamic Maps for Long Term Operation of Mobile Service Robots,” Proc. of Robotics: Science and Systems (RSS), pp. 17-24, 2005.

- [8] T. Matsumaru, “Experimental Examination in Simulated Interactive Situation between People and Mobile Robot with Preliminary-announcement and Indication Function of Upcoming Operation,” 2007 IEEE Int. Conf. on Robotics and Automation, pp. 2036-2041, 2007.

- [9] E. Pacchierotti, H. I. Christensen, and P. Jensfelt, “Human-Robot Embodied Interaction in Hallway Settings: A Pilot User Study,” IEEE Int. Workshop on Robot and Human Interactive Communication (ROMAN 2005), pp. 164-171, 2005.

- [10] E. Pacchierotti, H. I. Christensen, and P. Jensfelt, “Evaluation of Passing Distance for Social Robots,” Proc. of 2006 IEEE Int. Symp. on Robot and Human Interactive Communication, pp. 315-320, 2006.

- [11] D. Helbing and P. Molnar, “Social Force Model for Pedestrian Dynamics,” Physical Review E, Vol.51, No.5, pp. 4282-4286, 1995.

- [12] T. Kruse, A. K. Pandey, R. Alami, and A. Kirsch, “Human-aware robot navigation: A survey,” Robotics and Autonomous Systems, Vol.61, No.12, pp. 1726-1743, 2013.

- [13] P. Trautman and A. Krause, “Unfreezing the Robot: Navigation in Dense, Interacting Crowds,” Proc. of 2010 IEEE/RSJ Int. Conf. on Intelligent Robots and Systems (IROS), pp. 797-803, 2010.

- [14] M. C. Shrestha, A. Kobayashi, T. Onishi, E. Uno, H. Yanagawa, Y. Yokoyama, M. Kamezaki, A. Schmitz, and S. Sugano, “Intent communication in navigation through the use of light and screen indicators,” 2016 11th ACM/IEEE Int. Conf. on Human-Robot Interaction (HRI), pp. 523-524, 2016.

- [15] K. Baraka, S. Rosenthal, and M. Veloso, “Enhancing Human Understanding of a Mobile Robot’s State and Actions using Expressive Lights,” Proc. of 2016 25th IEEE Int. Symp. on Robot and Human Interactive Communication (RO-MAN), pp. 652-657, 2016.

- [16] M. Kamezaki, A. Kobayashi, Y. Yokoyama, H. Yanagawa, M. Shrestha, and S. Sugano, “A Preliminary Study of Interactive Navigation Framework with Situation-Adaptive Multimodal Inducement: Pass-By Scenario,” Int. J. of Social Robotics, pp. 1-22, 2019.

- [17] A. Bicchi, M. A. Pershkin, and J. E. Colgate, “Safety for Physical Human-Robot Interaction,” B. Siciliano and O. Khatib (Eds.), “Springer Handbook of Robotics,” pp. 1335-1348, 2008.

- [18] D. Fox, “Adapting the sample size in particle filters through KLD-sampling,” Int. J. of Robotics Research, Vol.22, No.12, pp. 985-1004, 2003.

- [19] M. C. Shrestha et al., “Using contact-based inducement for efficient navigation in a congested environment,” 2015 24th IEEE Int. Symp. on Robot and Human Interactive Communication (RO-MAN), pp. 456-461, 2015.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.