Paper:

Cross-Domain Change Object Detection Using Generative Adversarial Networks

Takuma Sugimoto, Kanji Tanaka, and Kousuke Yamaguchi

University of Fukui

3-9-1 Bunkyo, Fukui-shi, Fukui 910-8507, Japan

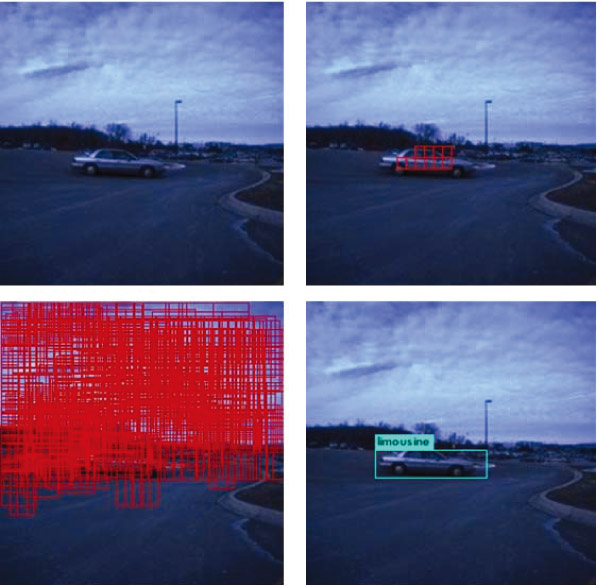

Image change detection is a fundamental problem for robotic map maintenance and long-term map learning. Local feature-based image comparison is one of the most basic schemes for addressing this problem. However, the local-feature approach encounters difficulties when the query and reference images involve different domains (e.g., time of the day, weather, season). In this paper, we address the local-feature approach from the novel perspective of object-level region features. This study is inspired by the recent success of object-level region features in cross-domain visual place recognition (CD-VPR). Unlike the previous contributions of the CD-VPR task, in the cross-domain change detection (CD-CD) tasks, we consider matching a small part (i.e., the change) of the scene and not the entire image, which is considerably more demanding. To address this issue, we explore the use of two independent object proposal techniques: supervised object proposal (e.g., YOLO) and unsupervised object proposal (e.g., BING). We combine these techniques and compute appearance features of their arbitrarily shaped objects by aggregating local features from a deep convolutional neural network (DCN). Experiments using a publicly available cross-season NCLT dataset validate the efficacy of the proposed approach.

Cross-domain change object detection

- [1] D. W. van de Wouw, G. Dubbelman, and P. H. de With, “Hierarchical 2.5-d scene alignment for change detection with large viewpoint differences,” IEEE Robotics and Automation Letters, Vol.1, No.1, pp. 361-368, 2016.

- [2] B. Mathias, D. Marcin, G. Igor, C. Cesar, S. Roland, and N. Juan, “Map management for efficient long-term visual localization in outdoor environments,” Proc. of 2018 IEEE Intelligent Vehicles Symp. (IV), pp. 682-688, 2018.

- [3] R. J. Radke, S. Andra, O. Al-Kofahi, and B. Roysam, “Image change detection algorithms: a systematic survey,” IEEE Trans. on Image Processing, Vol.14, No.3, pp. 294-307, 2005.

- [4] R. Finman, T. Whelan, M. Kaess, and J. J. Leonard, “Toward lifelong object segmentation from change detection in dense rgb-d maps,” 2013 European Conf. on Mobile Robots (ECMR), pp. 178-185, 2013.

- [5] K. Kim, T. H. Chalidabhongse, D. Harwood, and L. Davis, “Background modeling and subtraction by codebook construction,” 2004 Int. Conf. on Image Processing (ICIP’04), pp. 3061-3064, 2004.

- [6] J. Košecka, “Detecting changes in images of street scenes,” Asian Conf. on Computer Vision, pp. 590-601, 2012.

- [7] L. Gueguen and R. Hamid, “Large-scale damage detection using satellite imagery,” Proc. of 2015 IEEE Conf. on Computer Vision and Pattern Recognition, pp. 1321-1328, 2015.

- [8] T. Cover and P. Hart, “Nearest neighbor pattern classification,” IEEE Trans. on Information Theory, Vol.13, No.1, pp. 21-27, 1967.

- [9] T. Tuytelaars, K. Mikolajczyk et al., “Local invariant feature detectors: a survey,” Foundations and Trends® in Computer Graphics and Vision, Vol.3, No.3, pp. 177-280, 2008.

- [10] J. Li, X. Liang, Y. Wei, T. Xu, J. Feng, and S. Yan, “Perceptual generative adversarial networks for small object detection,” 2017 IEEE Conf. on Computer Vision and Pattern Recognition (CVPR), pp. 1951-1959, 2017.

- [11] J. Redmon and A. Farhadi, “YOLO9000: Better, Faster, Stronger,” 2017 IEEE Conf. on Computer Vision and Pattern Recognition (CVPR), pp. 6517-6525, 2016.

- [12] M.-M. Cheng, Z. Zhang, W.-Y. Lin, and P. Torr, “BING: Binarized normed gradients for objectness estimation at 300fps,” Proc. of the IEEE Conf. on Computer Vision and Pattern Recognition, pp. 3286-3293, 2014.

- [13] N. Carlevaris-Bianco, A. K. Ushani, and R. M. Eustice, “University of Michigan North Campus Long-Term Vision and Lidar Dataset,” The Int. J. of Robotics Research, Vol.35, No.9, pp. 1023-1035, 2016.

- [14] Y. Konishi, K. Shigematsu, T. Tsubouchi, and A. Ohya, “Detection of Target Persons Using Deep Learning and Training Data Generation for Tsukuba Challenge,” J. Robot. Mechatron., Vol.30, No.4, pp. 513-522, 2018.

- [15] W. Li, X. Li, Y. Wu, and Z. Hu, “A novel framework for urban change detection using vhr satellite images,” Proc. of the 18th Int. Conf. on Pattern Recognition, pp. 312-315, 2006.

- [16] P. Drews, P. Núñez, R. Rocha, M. Campos, and J. Dias, “Novelty detection and 3d shape retrieval using superquadrics and multi-scale sampling for autonomous mobile robots,” 2010 IEEE Int. Conf. on Robotics and Automation (ICRA), pp. 3635-3640, 2010.

- [17] A. Taneja, L. Ballan, and M. Pollefeys, “City-scale change detection in cadastral 3d models using images,” Proc. of the IEEE Conf. on Computer Vision and Pattern Recognition, pp. 113-120, 2013.

- [18] P. F. Alcantarilla, S. Stent, G. Ros, R. Arroyo, and R. Gherardi, “Street-view change detection with deconvolutional networks,” Autonomous Robots, Vol.42, Issue 7, pp. 1301-1322, 2016.

- [19] P.-L. St-Charles, G.-A. Bilodeau, and R. Bergevin, “Universal background subtraction using word consensus models,” IEEE Trans. on Image Processing, Vol.25, No.10, pp. 4768-4781, 2016.

- [20] J. Sivic and A. Zisserman, “Video Google: a text retrieval approach to object matching in videos,” Proc. of 9th IEEE Int. Conf. on Computer Vision, Vol.2, pp. 1470-1477, 2003.

- [21] K. Tanaka, Y. Kimuro, N. Okada, and E. Kondo, “Global localization with detection of changes in non-stationary environments,” Proc. IEEE Int. Conf. on Robotics and Automation (ICRA’04), Vol.2, pp. 1487-1492, 2004.

- [22] T. Murase, K. Tanaka, and A. Takayama, “Change detection with global viewpoint localization,” 2017 4th IAPR Asian Conf. on Pattern Recognition (ACPR), pp. 31-36, 2017.

- [23] H. Andreasson, M. Magnusson, and A. Lilienthal, “Has somethong changed here? Autonomous difference detection for security patrol robots,” Proc. 2007 IEEE/RSJ Int. Conf. on Intelligent Robots and Systems, pp. 3429-3435, 2007.

- [24] P. Ross, A. English, D. Ball, B. Upcroft, G. Wyeth, and P. Corke, “Novelty-based visual obstacle detection in agriculture,” Proc. 2014 IEEE Int. Conf. on Robotics and Automation (ICRA), pp. 1699-1705, 2014.

- [25] S. Stent, R. Gherardi, B. Stenger, K. Soga, and R. Cipolla, “An image-based system for change detection on tunnel linings,” MVA, pp. 359-362, 2013.

- [26] A. Krizhevsky, I. Sutskever, and G. E. Hinton, “ImageNet classification with deep convolutional neural networks,” NIPS’12 Proc. of the 25th Int. Conf. on Neural Information Processing Systems, Vol.1, pp. 1097-1105, 2012.

- [27] M. Everingham, L. Van Gool, C. K. Williams, J. Winn, and A. Zisserman, “The pascal visual object classes (voc) challenge,” Int. J. of Computer Vision, Vol.88, No.2, pp. 303-338, 2010.

- [28] R. J. Radke, S. Andra, O. Al-Kofahi, and B. Roysam, “Image change detection algorithms: a systematic survey,” IEEE Trans. on Image Processing, Vol.14, No.3, pp. 294-307, 2005.

- [29] P. Isola, J.-Y. Zhu, T. Zhou, and A. A. Efros, “Image-to-image translation with conditional adversarial networks,” 2017 IEEE Conf. on Computer Vision and Pattern Recognition (CVPR), pp. 5967-5976, 2017.

- [30] K. Tanaka, “Self-localization from images with small overlap,” 2016 IEEE/RSJ Int. Conf. on Intelligent Robots and Systems (IROS), pp. 4497-4504, 2016.

- [31] E. Mohedano, K. McGuinness, N. E. O’Connor, A. Salvador, F. Marques, and X. Giro-i Nieto, “Bags of local convolutional features for scalable instance search,” Proc. of the 2016 ACM on Int. Conf. on Multimedia Retrieval (ICMR’16), pp. 327-331, 2016.

- [32] D. G. Lowe, “Distinctive image features from scale-invariant keypoints,” Int. J. of Computer Vision, Vol.60, No.2, pp. 91-110, 2004.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.