Paper:

Three-States-Transition Method for Fall Detection Algorithm Using Depth Image

Xiangbo Kong, Zelin Meng, Lin Meng, and Hiroyuki Tomiyama

College of Science and Engineering, Ritsumeikan University

1-1-1 Noji-higashi, Kusatsu, Shiga 525-8577, Japan

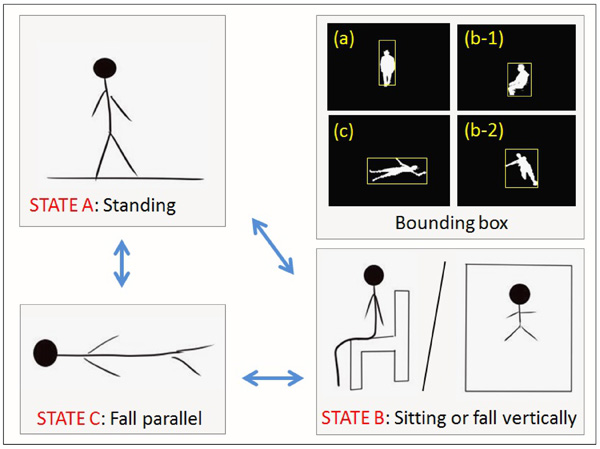

Currently, the proportion of elderly persons is increasing all over the world, and accidents involving falls have become a serious problem especially for those who live alone. In this paper, an enhancement to our algorithm to detect such falls in an elderly person’s living room is proposed. Our previous algorithm obtains a binary image by using a depth camera and obtains an outline of the binary image by Canny edge detection. This algorithm then calculates the tangent vector angles of each outline pixels and divide them into 15° range groups. If most of the tangent angles are below 45°, a fall is detected. Traditional fall detection systems cannot detect falls towards the camera so at least two cameras are necessary in related works. To detect falls towards the camera, this study proposes the addition of a three-states-transition method to distinguish a fall state from a sitting-down one. The proposed algorithm computes the different position states and divides these states into three groups to detect the person’s current state. Futhermore, transition speed is calculated in order to differentiate sit states from fall states. This study constructes a data set that includes over 1500 images, and the experimental evaluation of the images demonstrates that our enhanced algorithm is effective for detecting the falls with only a single camera.

Stand/sit/fall states transition

- [1] E. Cippitelli, F. Fioranelli, E. Gambi, and S. Spinsante, “Radar and RGB-Depth Sensors for Fall Detection: A Review,” IEEE Sensors J., Vol.17, pp. 3585-3604, 2017.

- [2] L. Meng, X. B. Kong, and D. Taniguchi, “Dangerous Situation Detection for Elderly Persons in Restrooms Using Center of Gravity and Ellipse Detection,” J. Robot. Mechatron., Vol.29, No.6, pp. 1057-1064, 2017.

- [3] N. Noury, A. Fleury, P. Rumeau, A. K. Bourke, G. O. Laighin, V. Rialle, and J. E. Lundy, “Fall Detection Principles and Methods,” Annual Int. Conf. of the IEEE Engineering in Medicine and Biology Society, Lyon, France, 2007.

- [4] E. E. Stone and M. Skubic, “Fall Detection in Homes of Older Adults Using the Microsoft Kinect,” IEEE J. of Biomedical and Health Informatics, Vol.19, No.1, pp. 290-301, 2015.

- [5] R. Freitas, M. Terroso, M. Marques, J. Gabriel, A. T. Marques, and R. Simoes, “Wearable Sensor Networks Supported by Mobile Devices for Fall Detection,” Proc. of IEEE Sensors 2014, Valencia, Spain, 2014.

- [6] W. Yi and J. Saniie, “Design Flow of a Wearable System for Body Posture Assessment and Fall Detection with Android Smartphone,” IEEE Int. Technology Management Conf., Chicago, USA, 2014.

- [7] P. Pierleoni, A. Belli, L. Palma, and M. Pellegrini, “A High Reliability Wearable Device for Elderly Fall Detection,” IEEE Sensors J., Vol.15, No.8, pp. 4544-4553, 2015.

- [8] C. T. Chu, H. K. Chiang. C. H. Chang, H. W. Li, and T. J. Chang, “An Exponential Smoothing Gray Prediction Fall Detection Signal Analysis in Wearable Device,” Int. Symp. on Next Generation Electronics, Keelung, Taiwan, 2017.

- [9] E. Auvinet, F. Multon, A. Sait-Arnaud, J. Rousseau, and J. Meunier, “Fall Detection with Multiple Cameras: An Occlusion-resistant Method Based on 3-D Silhouette Vertical Distribution,” IEEE Trans. on Information Technology in Biomedicine, Vol.15, No.2, pp. 290-300, 2011.

- [10] D. Anderson, J. M. Keller, M. Skubic, X. Chen, and Z. He, “Recognizing Falls From Silhouettes,” Int. Conf. of the IEEE Engineering in Medicine and Biology Society, New York, USA, 2006.

- [11] F. Merrouche and N. Baha, “Depth Camera Based Fall Detection Using Human Shape and Movement,” IEEE Int. Conf. on Signal and Image Processing, Beijing, China, 2016.

- [12] C. Rougier, E. Auvinet, J. Rousseau, M. Mignotte, and J. Meunier, “Fall Detection from Depth Map Video Sequences,” Int. Conf. on Smart Homes and Health Telematics, Montreal, Canada, 2011.

- [13] R. Planinc and M. Kampel, “Introducing the Use of Depth Data for Fall Detection,” Personal and Ubiquitous Computing, Vol.17, pp. 1063-1072, 2012.

- [14] J. Tao, M. Turjo, M. F. Wong, M, Wang, and Y. Tan, “Fall Incidents Detection for Intelligent Video Surveillance,” Int. Conf. on Information Communications and Signal Processing, Bangkok, Thailand, 2005.

- [15] X. B. Kong, L. Meng, and H. Tomiyama, “Fall Detection for Elderly Persons Using a Depth Camera,” Int. Conf. on Advanced Mechatronic Systems, Xiamen, China, 2017.

- [16] J. Shotton, A. Fitzgibbon, M. Cook, T. Sharp, M. Finocchio, R. Moore, A. Kipman, and A. Blake, “Real-Time Human Pose Recognition in Parts from Single Depth Images,” IEEE Computer Vision and Pattern Recognition, Colorado, USA, 2011.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.