Paper:

Effects of a Novel Sympathy-Expression Method on Collaborative Learning Among Junior High School Students and Robots

Felix Jimenez*, Tomohiro Yoshikawa*, Takeshi Furuhashi*, and Masayoshi Kanoh**

*Graduate School of Engineering, Nagoya University

Furo-cho, Chikusa-ku, Nagoya 464-8603, Japan

**School of Engineering, Chukyo University

101-2 Yagoto Honmachi, Showa-ku, Nagoya 466-8666, Japan

In recent years, educational-support robots, which are designed to aid in learning, have received significant attention. However, learners tend to lose interest in these robots over time. To solve this problem, researchers studying human-robot interactions have developed models of emotional expression by which robots autonomously express emotions. We hypothesize that if an educational-support robot uses an emotion-expression model alone and expresses emotions without considering the learner, then the problem of losing interest in the robot will arise once again. To facilitate collaborative learning with a robot, it may be effective to program the robot to sympathize with the learner and express the same emotions as them. In this study, we propose a sympathy-expression method for use in educational-support robots to enable them to sympathize with learners. Further, the effects of the proposed sympathy-expression method on collaborative learning among junior high school students and robots are investigated.

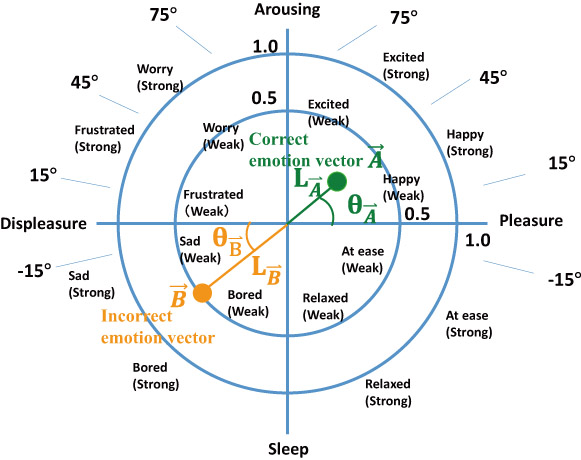

Sympathy-expression method for educational-support robots

- [1] M. Shiomi, T. Kanda, I. Howly, K. Hayashi, and N. Hagita, “Can a Social Robot Stimulate Science Curiosity in Classrooms?” Int. J. of Social Robotics, Vol.7, No.5, pp. 641-652, 2015.

- [2] T. Kanda, T. Hirano, D. Eaton, and H. Ishiguro, “Interactive robots as social partners and peer tutors for children: A field trial,” Human-Computer Interaction, Vol.19, No.1, pp. 61-84, 2004.

- [3] T. Kanda, R. Sato, N. Saiwaki, and H. Ishiguro, “A Two-Month Field Trial in an Elementary School for Long-Term Human-Robot Interaction,” IEEE Trans. on Robotics, Vol.23, No.5, pp. 962-971, 2007.

- [4] F. Jimenez, M. Kanoh, T. Yoshikawa, and T. Furuhashi, “Effect of collaborative learning with robot that prompts constructive interaction,” IEEE Int. Conf. on Systems, Man, and Cybernetics, 2014.

- [5] K. Wada and T. Shibata, “Robot therapy in a care house – its sociopsychological and physiological effects on the residents,” IEEE Int. Conf. on Robotics and Automation 2006, pp. 3966-3971, 2006.

- [6] F. Jimenez, T. Ando, M. Kanoh, and T. Nakamura, “Psychological effects of a synchronously reliant agent on human beings,” J. of Advanced Computational Intelligence and Intelligent Informatics, Vol.17, No.3, pp. 433-442, 2013.

- [7] I. Leite, G. Castellano, A. Pereira,. C. Martinho, and A. Paiva, “Empathic Robots for Long-Term Interaction: Evaluating Social Presence, Engagement and Perceived Support in Children,” Int. J. of Social Robotics, Vol.6, No.3, pp. 329-341, 2014.

- [8] J. A. Russell, “A circumplex model of affect,” J. of Personality and Social Psychology, pp. 1161-1178, 1980.

- [9] K. Nakadai, K. Hidai, G. H. Mizoguchi, and H. Kitano, “Real-time Auditory and Visual Multiple-speaker Tracking for Human-robot Interaction,” J. of Robotics and Mechatronics, Vol.14, No.5, pp. 497-505, 2002.

- [10] K. Suzuki, R. Hikiji, and S. Hashimoto, “Development of an Autonomous Humanoid Robot, iSHA, for Harmonized Human-Machine Environment,” J. of Robotics and Mechatronics, Vol.14, No.5, pp. 479-489, 2002

- [11] K. Suzuki and M. Kanoh, “Investigating Effectiveness of an Expression Education Support Robot That Nods and Gives Hints,” J. of Advanced Computational Intelligence and Intelligent Informatics, Vol.21, No.3, pp. 483-495, 2017.

- [12] H. Okazaki, Y. Kanai, M. Ogata, K. Hasegawa, K. Ishii, and M. Imai, “Toward Understanding Pedagogical Relationship in Human-Robot Interaction,” J. of Robotics and Mechatronics, Vol.28, No.1, pp. 69-78, 2016.

- [13] H. Cramer, J. Goddijn, B. Wielinga, and V. Evers, “Effects of (in)accurate empathy and situational valence on attitudes towards robots,” ACM/IEEE Int. Conf. on human-robot interaction, ACM, pp. 141-142, 2010.

- [14] L. D. Riek, P. C. Paul, and P. Robinson, “When my robot smiles at me: enabling human-robot rapport via real-time head gesture mimicry,” J. on Multimodal User Interfaces, Vol.3, No.1, pp. 99-108, 2010.

- [15] T. Ando and M. Kanoh, “Psychological effects of a self-sufficiency model based on urge system,” J. of Advanced Computational Intelligence and Intelligent Informatics, Vol.14, No.7, pp. 877-884, 2010.

- [16] M. Toda, “Basic structure of the urge operations,” The Urge Theory of Emotion and Cognition, Chapter 2, SCCS Technical Report, 1994.

- [17] H. Shirouzu, N. Miyake, and H. Masukawa, “Cognitively active externalization for situated reflection,” Cognitive Science, Vol.26, pp. 469-501, 2002.

- [18] N. Miyake, “Conceotual change through collaboration,” In S. Vosniadou (Ed.), Int. Handbook of research on conceptual change, pp. 453-478, 2008.

- [19] Y. Matsui, M. Kanoh, S. Kato, and H. Itoh, “Generating Interactive Facial Expressions of Kansei Robots Using Simple Recurrent Network (in Japanese),” J. of the Robotics Society of Japan, Vol.28, No.3, pp. 120-128, 2010.

- [20] F. Jimenez, T. Yoshikawa, T. Furuhashi, and M. Kanoh, “Effect of Collaborative Learning with Robots using Model of Emotional Expression (in Japanese),” J. of Japan Society for Fuzzy Theory and Intelligent Informatics, Vol.28, No.4, pp. 700-7004, 2016.

- [21] N. Salkind (Ed.), “Tukey-Kramer Procedure,” Encyclopedia of measurement and statistics, Vol.3, SAGE publications, pp. 1016-1018, 2007.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.