Development Report:

Development of a Tomato Volume Estimating Robot that Autonomously Searches an Appropriate Measurement Position – Basic Feasibility Study Using a Tomato Bed Mock-Up –

Rui Fukui, Kenta Kawae, and Shin’ichi Warisawa

Department of Human and Engineered Environmental Studies, Graduate School of Frontier Sciences, The University of Tokyo

5-1-5 Kashiwa-no-ha, Kashiwa-shi, Chiba 277-8563, Japan

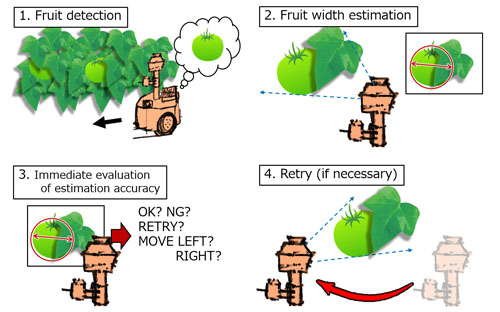

Recently, the promotion of the utilization of data mining in Japanese agriculture has become noteworthy. The purpose of such data mining is to transform the knowledge and know-how of experienced farmers into an explicit form. In particular, it is required for creating a tomato cultivation database to acquire the growth data of not only red mature tomatoes, but also green immature tomatoes. We are developing a robot to estimate the volume of a tomato that actively searches an appropriate measurement position. While patrolling a tomato bed, the robot first detects a tomato by using saliency-based image processing technology. When a tomato has been detected, a motion stereo camera installed on the robot generates a point cloud and a clustering process extracts the fruit region. A three-point-algorithm-based ellipse detector then estimates the width of the extracted fruit region. Finally, the estimation result is immediately evaluated using multiple indicators. This immediate evaluation process rejects unreliable data and suggests the correct position for re-measurement.

Tomato monitoring procedures including immediate evaluation

- [1] A. Shinjo et al., “The practical use of IT in agriculture: The movement into high-value-added crops and integrated solutions,” The J. of the Institute of Electronics, Information and Communication Engineers, Vol.96, No.4, pp. 280-285, 2013 (in Japanese).

- [2] D. Zermas et al., “Automation solutions for the evaluation of plant health in corn fields,” Proc. of IEEE/RSJ Int. Conf. on Intelligent Robots and Systems, pp. 6521-6527, Hamburg, Germany, Sep. 2015.

- [3] S. Bargoti et al., “Deep fruit detection in orchards,” Proc. of IEEE Int. Conf. on Robotics and Automation, pp. 3626-3633, Singapore, May 2017.

- [4] M. W. Hannan et al., “A machine vision algorithm combining adaptive segmentation and shape analysis for orange fruit detection,” Agricultural Engineering Int.: the CIGR E-Journal, Vol.11, pp. 1-17, 2009.

- [5] M. Teixido et al., “Definition of linear color models in the RGB vector color space to detect red peaches in orchard images taken under natural illumination,” Sensors, Vol.12, pp. 7701-7718, 2012.

- [6] S. Bachche et al., “Design, modeling and performance testing of end-effector for sweet pepper harvesting robot hand,” J. of Robotics and Mechatronics, Vol.25, No.4, pp. 705-717, 2013.

- [7] N. Irie et al., “Asparagus harvesting robot,” J. of Robotics and Mechatronics, Vol.26, No.2, pp. 267-268, 2014.

- [8] J. Gill et al., “A review of automatic fruit classification using soft computing techniques,” Int. J. of Computer Science and Electronics Engineering, Vol.2, No.2, pp. 990-105, 2014.

- [9] A. Gongal et al., “Sensors and systems for fruit detection and localization: A review,” Computers and Electronics in Agriculture, Vol.116, pp. 8-19, 2015.

- [10] J. Lu et al., “Detecting citrus fruits and occlusion recovery under natural illumination conditions,” Computers and Electronics in Agriculture, Vol.110, pp. 121-130, 2015.

- [11] M. Monta et al., “Robotic vision for bioproduction systems,” J. of Robotics and Mechatronics, Vol.15, No.3, pp. 341-348, 2003.

- [12] F. Qingchun et al., “Design of structured-light vision system for tomato harvesting robot,” Int. J. of Agricultural and Biological Engineering, Vol.7, No.2, pp. 44-51, 2011.

- [13] X. Chen et al., “A practical solution for ripe tomato recognition and localization,” J. of real-time image processing, Vol.8, No.1, pp. 35-51, 2013.

- [14] R. Fukui et al., “Development of volume estimation method of low texture tomato fruit using pseudo stereo camera,” Proc. of 21st Robotics Symposia, pp. 377-383, Nagasaki, Japan, March 2016 (in Japanese).

- [15] L. Itti et al., “A model of saliency-based visual attention for rapid scene analysis,” IEEE Trans. on Pattern Analysis and Machine Intelligence, Vol.20, No.11, pp. 1254-1259, 1998.

- [16] G. Csurka et al., “Visual categorization with bag of keypoints,” Workshop on statistical learning in computer vision (ECCV), pp. 1-22, 2004.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.