Paper:

Computationally Efficient Mapping for a Mobile Robot with a Downsampling Method for the Iterative Closest Point

Shodai Deguchi and Genya Ishigami

Keio University

3-14-1 Hiyoshi, Kohoku-ku, Yokohama 223-8522, Japan

This paper proposes a computationally efficient method for generating a three-dimensional environment map and estimating robot position. The proposed method assumes that a laser range finder mounted on a mobile robot can be used to provide a set of point cloud data of an environment around the mobile robot. The proposed method then extracts typical feature points from the point cloud data using an intensity image taken by the laser range finder. Subsequently, feature points extracted from two or more different sets of point cloud data are correlated by the iterative closest point algorithm that matches the points between the sets, creating a large map of the environment as well as estimating robot location in the map. The proposed method maintains an accuracy of the mapping while reducing the computational cost by downsampling the points used for the iterative closest point. An experimental demonstration using a mobile robot test bed confirms the usefulness of the proposed method.

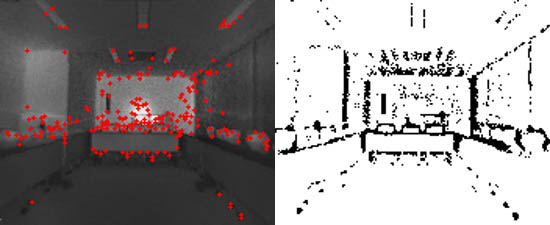

Extracting feature point for ICP processing from intensity images

- [1] H. Durrant-Whyte and T. Bailey, “Simultaneous localisation and mapping (SLAM): Part I the essential algorithms,” IEEE Robot. Automat. Mag., Vol.13, No.2, June 2006.

- [2] P. J. Besl and N. D. Mckay, “A method for registration of 3-D shapes,” IEEE Trans. on Pattern Analysis and Machine Intelligence, Vol.14, No.2, pp. 239-256, 1992.

- [3] F. Lu and E. Milios, “Robot Pose Estimation in Unknown Environments by Matching 2D Range Scans,” J. of Intelligent and Robotic Systems, Vol.18, pp. 249-275, 1997.

- [4] T. Kaminade, T. Takubo, and T. Arai, “The Generation of Environmental Map Based on a NDT Grid Mapping – Proposal of Convergence Calculation Corresponding to High Resolution Grid –,” Proc. of the 2008 IEEE In. Conf. on Robotics and Automation, pp. 1874-1879, 2008.

- [5] L. Matthies, “Stereo Vision for Planetary Rovers: Stochastic Modeling to Near Real-Time Implementation,” Int. J. of Computer Vision, Vol.8, No.1, pp. 71-99, 1992.

- [6] O. Wulf and B. Wagner, “Fast 3D Scanning Methods for Laser Measurement Systems,” Proc. of the Int. Conf. on Control Systems and Computer Science, Bucharest, Romania, pp. 312-317, 2003.

- [7] S. Rusinkiewucz, “Efficient Variants of the ICP Algorithm,” 3rd Int. Conf. on 3-D Digital Imaging and Modeling, pp. 145-152, 2001.

- [8] A. E. Johnson and S. B. Kang, “Registration and Integration of Textured 3-D Data,” Proc. of the Int. Conf. on Recent Advances in 3-D Digital Imaging and Modeling, 1997.

- [9] P. Henry, M. Krainin, E. Herbst, X. Ren, and D. Fox, “RGB-D Mapping: Using Depth Cameras for Dense 3D Modeling of Indoor Environments,” Proc. of the Int. Symposium on Experimental Robotics, 2010.

- [10] H. Men, B. Gebre, and K. Pochiraju, “Color Point Cloud Registration with 4D ICP Algorithm,” Proc. of the 2011 IEEE Int. Conf. on Robotics and Automation, pp. 1511-1516, 2011.

- [11] Y. Hara, H. Kawata, A. Ohya, and S. Yuta, “Mobile Robot Localization and Mapping by Scan Matching using Laser Reflection Intensity of the SOKUIKI Sensor,” 32nd IEEE Industrial Electronics, IECON 2006, pp. 3018-3023, 2006.

- [12] M. Tomono, “Robust 3D SLAM with a Stereo Camera Based on an Edge-Point ICP Algorithm,” Proc. of the 2009 IEEE Int. Conf. on Robotics and Automation, pp. 4306-4311, 2009.

- [13] C. Ye and M. Bruch, “A Visual Odometry Method Based on the SwissRanger SR4000,” Proc. of SPIE-Unmanned System Technology XII, Vol.7692, 2010.

- [14] D. Zhang, R Kurazume, Y. Iwashita, and T. Hasegawa, “Robust Global Localization Using Laser Reflectivity,” J. of Robotics and Mechatronics, Vol.25, No.1, pp. 38-52, 2013.

- [15] C. Tomasi and T. Kanade, “Detection and Tracking of Point Features,” Carnegie Mellon University Technical Report CMU-CS-91-132, 1991.

- [16] D. G. Lowe, “Distinctive Image Features from Scale-Invariant Keypoints,” Int. J. of Computer Vision, Vol.2, No.60, pp. 91-110, 2004.

- [17] M. Fischler and R. bolles, “Random sample consensus: A paradigm for model fitting with applications to image analysis and automated cartography,” Communications of the ACM, Vol.24, No.6, pp. 381-395, 1981.

- [18] S. Gokturk, H. Yalcin, and C. Bamji, “A time-of-flight depth sensor, system description, issues and solutions,” Proc. IEEE Conf. Computer Vision and Pattern Recognition, pp. 35-45, 2004.

- [19] E. Rosten and T. Drummond, “Machine learning for high-speed corner detection,” European Conf. on Computer Vision, pp. 430-443, 2006.

- [20] P. Newman and K. Ho, “SLAM-Loop Closing with Visually Salient Features,” Proc. of the 2005 IEEE Int. Conf. on Robotics and Automation, pp. 635-642, 2005.

- [21] J. Sprickerhof, A. Nuchter, K. Lingemann, and J. Hertzberg, “An Explicit Loop Closing Technique for 6D SLAM,” Proc. of 4th European Conf. on Mobile Robots (ECMR), 2009.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.