Paper:

Using Difference Images to Detect Pedestrian Signal Changes

Tetsuo Tomizawa and Ryunosuke Moriai

National Defense Academy of Japan

1-10-20 Hashirimizu, Yokosuka, Kanagawa 239-8686, Japan

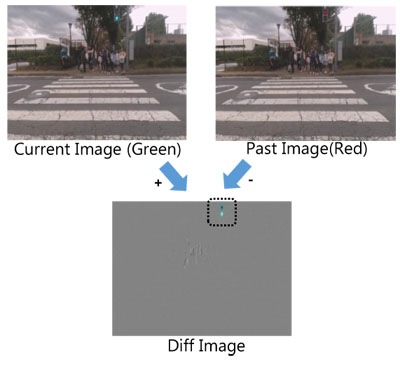

This paper describes a method of using camera images to detect changes in the display status of pedestrian traffic signals. In much of the research previously done on signal detection, the color or shape of images or machine learning has been used to estimate the signal status. However, it is known that these methods are greatly affected by occlusion and changes in illumination. We propose a method of detecting, using multiple image sequences captured over time, changes in appearance that occur when a signal changes. If this method is used, the position and the status of the traffic light can be accurately detected as long as it appears in the image, even if its relative position or the lighting conditions in the area changes. In this paper, we first describe how pedestrian signals are seen when difference images are used, and we propose an algorithm for detecting when a signal changes. Then, the effectiveness of the proposed method is confirmed through verification tests.

Actual traffic light images and difference image

- [1] T. Tomizawa, S. Muramatsu, M. Sato, M. Hirai, S. Kudoh, and T. Suehiro, “Development of an Intelligent Senior-Car in a Pedestrian Walkway,” Advanced Robotics, Vol.26, No.14, pp. 1577-1602, 2012.

- [2] Y. Kanuki and N. Ohta, “Development of Autonomous Robot with Simple Navigation System for Tsukuba Challenge 2015,” J. of Robotics and Mechatronics, Vol.28, No.4, pp. 432-440, 2016.

- [3] A. Sujiwo, T. Ando, E. Takeuchi, Y. Ninomiya, and M. Edahiro, “Monocular Vision-Based Localization Using ORB-SLAM with LIDAR-Aided Mapping in Real-World Robot Challenge,” J. of Robotics and Mechatronics, Vol.28, No.4, pp. 479-490, 2016.

- [4] J. Eguchi and K. Ozaki, “Development of Autonomous Mobile Robot Based on Accurate Map in the Tsukuba Challenge 2014,” J. of Robotics and Mechatronics, Vol.27, No.4, pp. 346-355, 2015.

- [5] K. Okawa, “Three Tiered Self-Localization of Two Position Estimation Using Three Dimensional Environment Map and Gyro-Odometry,” J. of Robotics and Mechatronics, Vol.26, No.2, pp. 196-203, 2014.

- [6] S. Kotani, T. Nakata, and H. Mori, “A Strategy for Crossing of the Robotic Travel Aid Harunobu,” Proc. of the 2001 IEEE/RSJ Int. Conf. on Intelligent Robots and Systems (IROS), pp. 668-673, 2001.

- [7] J. Aranda and P. Mares, “Visual System to Help Blind People to Cross the Street,” Proc. of the Int. Conf. on Computers for Handicapped Persons, pp. 454-461, 2004.

- [8] R. de Charette and F. Nashashibi, “Real time visual traffic lights recognition based on Spot Light Detection and adaptive traffic lights templates,” 2009 IEEE Intelligent Vehicles Symposium, pp. 358-363 ,2009.

- [9] V. Ivanchenko, J. Coughlan, and H. Shen, “Real-Time Walk Light Detection with a Mobile Phone,” Proc. of the 12th Int. Conf. on Computers Helping People with Special Needs, pp. 229-234, 2010.

- [10] S. Mascetti, D. Ahmetovic, A. Gerino, C. Bernareggi, M. Busso, and A. Rizzi, “Robust traffic lights detection on mobile devices for pedestrians with visual impairment,” Computer Vision and Image Understanding, Vol.148, pp. 123-135, 2016.

- [11] Y. Matoba, T. Sato, and H. Koike, “Traffic Signals Detection using High Frequency Signal Light Blinking,” Proc. of the 19th Workshop on Interactive Systems and Software (WISS) 2011, 2012 (in Japanese).

- [12] S. Bando, T. Nakabayashi, S. Kawamoto, and H. Bando, “Approach of Tsuchiura Project in Tsukuba Challenge 2016,” Proc. of the 17th SICE SI Division Annual Conf., pp. 1392-1397, 2016 (in Japanese).

- [13] K. Shigematsu, Y. Konishi, T. Tsubouchi, K. Suwabe, R. Mitsudome, H. Date, and A. Ohya, “Recognition of a Traffic Signal and a Search Target using Deep learning for Tsukuba Challenge 2016,” Proc. of the 17th SICE SI Division Annual Conf., pp. 1398-1401, 2016 (in Japanese).

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.