Paper:

Development of Autonomous Navigation System Using 3D Map with Geometric and Semantic Information

Yoshihiro Aotani, Takashi Ienaga, Noriaki Machinaka, Yudai Sadakuni, Ryota Yamazaki, Yuki Hosoda, Ryota Sawahashi, and Yoji Kuroda

Meiji University

1-1-1 Higashimita, Tama-ku, Kawasaki, Kanagawa 214-8571, Japan

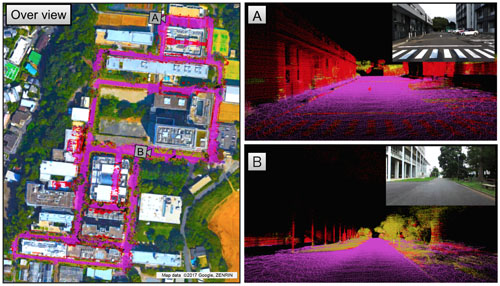

This paper presents an autonomous navigation system. Our system is based on an accurate 3D map, which includes “geometric information” (e.g., curb, wall, street tree) and “semantic information” (e.g., sidewalk, roadway, crosswalk) extracted by environmental recognition. By using the semantic map, we can obtain the suitable area to keep away from undesired places. Furthermore, by comparing the map with real-time 3D geometric information from LIDAR, we obtain the robot position. To show the effectiveness of our system, we conduct a 3D semantic map construction experiment and driving test. The experiment results show that the proposed system enables accurate and highly reproducible localization and stable autonomous mobility.

3D map with geometric and semantic information

- [1] R. Kümmerle et al., “Autonomous robot navigation in highly populated pedestrian zones,” J. of Field Robotics, Vol.32, No.4, pp. 565-589, 2015.

- [2] A. Krizhevsky, S. Ilya, and G. E. Hinton, “Imagenet classification with deep convolutional neural networks,” Advances in neural information processing systems, 2012.

- [3] B. Zhou et al., “Learning deep features for scene recognition using places database,” Advances in neural information processing systems, 2014.

- [4] T. Hagiwara, D. Katakura et al., “Development of a stable navigation system in the known environment by using autonomous 3D map construction,” The 16th SICE System Integration Division Annual Conf., pp. 811-814, 2015 (in Japanese).

- [5] S. Rusinliewicz and M. Levoy, “Efficient variants of the ICP algorithm,” Proc. of the Int. Conf. on 3-D Digital Imaging and Modeling (3DIM), pp. 145-152, 2001.

- [6] G. Grisetti, R. Kümmerle, H. Strasdat, and K. Konolige, “g2o: A general Framework for (Hyper) Graph Optimization,” Technical report, 2011.

- [7] G. Grisetti, R. Kümmerle, C. Stachniss, and W. Burgard, “A Tutorial on Graph-Based SLAM,” IEEE Intelligent Transportation Systems Magazine, Vol.2, pp. 31-43, 2010.

- [8] D. Katakura and Y. Kuroda, “3D LIDAR-based Detection and Tracking of Multiple human,” The 21st Robotics Symposia, Nagasaki, 2016 (in Japanese).

- [9] S. Thrun et al., “Probabilistic Robotics,” MIT Press, Vol.1, pp. 201-215, 2005.

- [10] S. Shimizu and Y. Kuroda, “High-Speed Registration of Point Clouds,” The 19th Robotics Symposia, Hyogo, 2014 (in Japanese).

- [11] P. Biber and W. Straber, “The normal distributions transform: A new approach to laser scan matching,” Proc. 2003 IEEE/RSJ Int. Conf. on Intelligent Robots and Systems (IROS), pp. 2743-2748, 2003.

- [12] S. Thrun et al., “Stanley: The robot that won the DARPA Grand Challenge,” Proc. of the JFR, Vol.23, No.9, pp. 661-692, 2006.

- [13] F. Neuhaus et al., “Terrain drivability analysis in 3D laser range data for autonomous robot navigation in unstructured environments,” Emerging Technologies and Factory Automation (ETFA), pp. 1-4, 2009.

- [14] V. Badrinarayanan, A. Kendall, and R. Cipolla, “SegNet: A Deep Convolutional Encoder-Decoder Architecture for Image Segmentation,” arXiv preprint arXiv:1511.00561, 2015.

- [15] G. J. Brostow, J. Fauqueur, and R. Cipolla, “Semantic object classes in video: A high-definition ground truth database,” Pattern Recognition Letters, Vol.30, No.2, pp. 88-97, 2009.

- [16] D. Ferguson, T. M. Howard, and M. Likhachev, “Motion planning in urban environments,” Proc. of the JFR, Vol.25, pp 939-960, 2008.

- [17] T. M. Howard and A. Kelly, “Optimal rough terrain trajectory generation for wheeled mobile robots,” Proc. of the IJRR, Vol.26, pp. 141-166, 2007.

- [18] T. M. Howard and C. J. Green, “State space sampling of feasible motions for high-performance mobile robot navigation in complex environments,” Proc. of the JFR, Vol.25, pp. 325-345, 2008.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.