Paper:

Swarm of Sound-to-Light Conversion Devices to Monitor Acoustic Communication Among Small Nocturnal Animals

Takeshi Mizumoto*1, Ikkyu Aihara*2, Takuma Otsuka*3, Hiromitsu Awano*4, and Hiroshi G. Okuno*5

*1Honda Research Institute Japan Co., Ltd.

8-1 Honcho, Wako-shi, Saitama 351-0188, Japan

*2Graduate School of Systems and Information Engineering, Tsukuba University

1-1-1 Tennodai, Tsukuba, Ibaraki 305-8573, Japan

*3NTT Communication Science Laboratories, NTT Corporation

2-4 Hikaridai, Seikacho, Kyoto 619-0237, Japan

*4VLSI Design and Education Center, The University of Tokyo

7-3-1 Hongo, Bunkyo-ku, Tokyo 113-8656, Japan

*5Faculty of Science and Engineering, Waseda University

2-4-12 Okubo, Shinjuku, Tokyo 169-0072, Japan

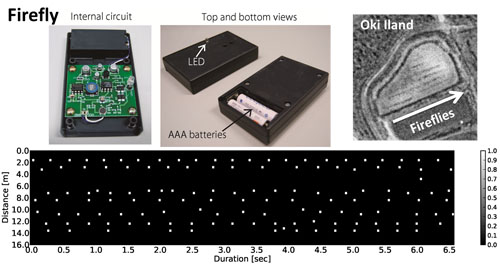

Sound-to-light conversion devices, Fireflies, in Oki Island and their lighting pattern of frog calling

- [1] M. Dunbabin and L. Marques, “Robotics for environmental monitoring: Significant advancements and applications,” IEEE Robotics & Automation Magazine, pp. 24-39, 2012.

- [2] J. Laut, E. Henry, O. Nov, and M. Porfini, “Development of a mechatronics-based citizen science platform for aquatic environmental monitoring,” IEEE/ASM Trans. on Mechatronics, Vol.19, No.5, pp. 1541-1551, 2014.

- [3] K. Tanaka, H. Ishii, S. Kinoshita, Q. Shi, H. Sugita, S. Okabayashi, Y. Sugahara, and A. Takanishi, “Design of operating software and electrical system of mobile robot for environmental monitoring,” Proc. Int. Conf. on Robotics and Biomimetics, pp. 1763-1768, 2014.

- [4] K. Tanaka, H. Ishii, Y. Okamoto, D. Kuroiwa, Y. Miura, D. Endo, J. Mitsuzuka, Q. Shi, S. Okabayashi, Y. Sugahara, and A. Takanishi, “Novel method of estimating surface condition for tiny mobile robot to improve locomotion performance,” Proc. IEEE/RSJ Int. Conf. on Intelligent Robots and Systems, pp. 6515-6520, 2015.

- [5] P. Plonski, P. Tokekar, and V. Isler, “Energy-efficient path planning for solar-powered mobile robots. J. of Field Robotics,” Vol.30, pp. 583-601, 2013.

- [6] E. cSahin, “Swarm Robotics: From Source of Inspiration to Domain of Applications,” Lecture Notes in Computer Science, Springer, Vol.3341, pp. 10-20, 2005.

- [7] H. C. Gerhardt and F. Huber, “Acoustic communication in insects and anurans,” The University of Chicago Press, Chicago, 2002.

- [8] K. D. Wells, “The ecology and behavior of amphibians,” The University of Chicago Press, Chicago, 2007.

- [9] P. M. Narins and R. R. Capranica, “Communicative significance of the two-note call of the treefrog eleutherodactylus coqui,” J. of Comparative Physiology A, Vol.127, pp. 1-9, 1978.

- [10] A. M. Simmons, “Call recognition in the bullfrog, rana catesbiana: Generalization along the duration continuum,” J. of Acoust. Soc. Am., Vol.115, No.3, pp. 1345-1355, 2004.

- [11] D. N. Suggs and A. M. Simmons, “Information theory analysis of patterns of modulation in the advertisement call of the male bullfrog, rana catesbiana,” J. of Acoust. Soc. Am., Vol.117, No.4, pp. 2330-2337, 2005.

- [12] A. S. Feng, P. M. Narins, C.-H. Xu, W.-Y. Lin, Z.-L. Yu, Q. Qiu, Z.-M. Xu, and J.-X. Shen, “Ultrasonic communication in frogs,” Nature, Vol.440, pp. 2333-2336, 2006.

- [13] P. M. Narins, A. S. Feng, R. R. Fay, and A. N. Popper (Eds.), “Hearing and Sound Communication in Amphibians,” Springer, 2007.

- [14] B. Hedwig and J. F. A. Poulet, “Complex auditory behavior emerges from simple reactive steering,” Nature, Vol.430, pp. 781-785, 2004.

- [15] J. A. Simmons, M. B. Fenton, and M. J. O’Farrell, “Echolocation and pursuit of prey by bats,” Science, Vol.203, No.4375, pp. 16-21, 1979.

- [16] D. R. Griffin, “Listening in the dark: The acoustic orientation of bats and men,” Comstock Pub. Associates, 1986.

- [17] H. Riquimaroux, S. J. Gaioni, and N. Suga, “Cortical computational maps control the auditory perception,” Science, Vol.251, pp. 565-568, 1991.

- [18] J. A. Thomas, C. F. Moss, and M. Vater (Eds.), “Echolocation in Bats and Dolphins,” The University of Chicago Press, Chicago, 2002.

- [19] M. Kashino and T. Hirahara, “One, two, many – judging the number of concurrent talkers,” J. of Acoust. Soc. Am., Vol.99, No.4, pp. 2596-2603, 1996.

- [20] R. MacCurdy and K. Fristrup, “Automatic animal tracking using matched filters and time difference of arrival,” J. of Communications, Vol.4, No.7, pp. 487-495, 2009.

- [21] A. M. Simmons, J. A. Simmons, and M. E. Bates, “Analyzing acoustic interactions in natural bullfrog (protectrana catesbeiana) choruses,” J. of Comparative Physiology A, Vol.122, No.3, pp. 274-282, 2008.

- [22] D. L. Jones and R. Ratnam, “Blind location and separation of callers in a natural chorus using a microphone array,” J. of Acoust. Soc. Am., Vol.126, No.2, pp. 895-910, 2009.

- [23] D. T. Blumstein, D. J. Mennill, P. Clemins, L. Girod, K. Yao, G. Patricelli, J. L. Deppe, A. H. Krakauer, C. Clark, K. A. Cortopassi, S. F. Hanser, B. McCowan, A. M. Ali, and A. N. G. Kirschel, “Acoustic monitoring in terrestrial environments using microphone arrays: applications, technological considerations and prospectus,” J. of Applied Ecology, Vol.48, No.3, pp. 758-767, 2011.

- [24] F. Asano, H. Asoh, and T. Matsui, “Sound source localization and signal separation for office robot “JiJo-2”,” Proc. of Multisensor Fusion and Integration for Intelligent Systems, pp. 243-248, 1999.

- [25] T. Mizumoto, I. Aihara, T. Otsuka, R. Takeda, K. Aihara, and H. G. Okuno, “Sound imaging of nocturnal animal calls in their natural habitat,” J. of Comparative Physiology A, Vol.197, No.9, pp. 915-921, 2011.

- [26] D. Llusia, R. Marquez, and R. Bowker, “Terrestrial sound monitoring systems, a methodology for quantitative calibration,” Bioacoustics, Vol.20, pp. 277-286, 2011.

- [27] T. Mizumoto, I. Aihara, T. Otsuka, R. Takeda, K. Aihara, and H. G. Okuno, “Supplementary material of sound imaging of nocturnal animal calls in their natural habitat,” J. of Comparative Physiology A, Vol.197, No.9, doi:10.1007/s00359-011-0652-7, 2011.

- [28] J. P. Hailman and R. G. Jaeger, “Phototactic responses to spectrally dominant stimuli and use of color vision by adult anuran amphibians: a comparative survey,” Animal Behavior, Vol.22, pp. 757-795, 1974.

- [29] N. Otsu. “A threshold selection method from gray-level histograms,” IEEE Trans. on Systems, Man, and Cybernetics, Vol.SMC-9, No.1, pp. 62-66, 1979.

- [30] ITU-R, “Recommendation ITU-R BT.606-6: Studio encoding parameters of digital television for standard 4:3 and wide screen 16:9 aspect ratios,” Int. Telecommunication Union Radiocommunication Sector, 2007.

- [31] M. Matsui, “Natural history of the amphibia,” University of Tokyo Press, pp. 150-152, 1996.

- [32] N. Maeda and M. Matsui, “Frogs and toads of Japan,” Bun-ichi Sogo Shuppan Co. Ltd., pp. 36-39, 1999.

- [33] I. Aihara, R. Takeda, T. Mizumoto, T. Otsuka, T. Takahashi, H. G. Okuno, and K. Aihara, “Complex and transitive synchronization in a frustrated system of calling frogs,” Phys. Rev. E, Vol.83, No.3, 031913, 2011.

- [34] I. Aihara, T. Mizumoto, T. Otsuka, H. Awano, K. Nagira, H. G. Okuno, and K. Aihara, “Spatio-temporal dynamics in collective frog choruses examined by mathematical modeling and field observation,” Scientific Reports, Vol.4, 3891, 2014.

- [35] I. Aihara, P. de Silva, and X. E. Bernal, “Acoustic preference of frog-biting midges (protectcorethrella spp) attacking Túngara frogs in their natural habitat,” Ethology, Vol.122, No.2, pp. 105-113, 2016.

- [36] M. D. Greenfield and A. S. Rand, “Frogs have rules: Selective attention algorithms regulate chorusing in protectphysalaemus pustulosus (protectloptodactylidae),” Ethology, Vol.106, pp. 331-347, 2000.

- [37] J. S. Brush and P. M. Narins, “Chorus dynamics of a neotropical amphibian assemblage: Comparison of computer simulation and natural behavior,” Animal Behavior, Vol.37, No.33, pp. 33-44, 1989.

- [38] J. Lanslots, F. Deblauwe, and K. Janssens, “Selecting sound source localization techniques for industrial applications,” Sound & Vibration, 2010.

- [39] S. Haykin, “Adaptive Filter Theory,” Prentice Hall, New Jersey, 4th edition, 2001.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.