Paper:

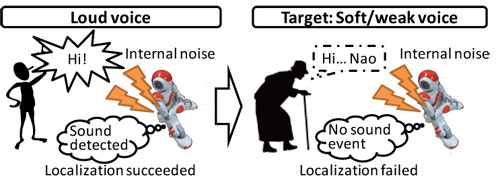

Noise-Robust MUSIC-Based Sound Source Localization Using Steering Vector Transformation for Small Humanoids

Ryu Takeda and Kazunori Komatani

The Institute of Scientific and Industrial Research, Osaka University

8-1 Mihogaoka, Ibaraki, Osaka 567-0047, Japan

Sound source localization and problem

- [1] K. Nakadai, T. Lourens, H. G. Okuno, and H. Kitano, “Active audition for humanoid,” Proc. of the Seventeenth National Conference on Artificial Intelligence, pp. 832-839, 2000.

- [2] D. Gouaillier, V. Hugel, P. Blazevic, C. Kilner, J. O. Monceaux, P. Lafourcade, B. Marnier, J. Serre, and B. Maisonnier, “Mechatronic design of Nao humanoid,” Proc. of IEEE Int. Conf. on Robotics and Automation, pp. 769-774, 2009.

- [3] T. Miyazaki, M. Mizumachi, and K. Niyada, “Acoustic analysis of breathy and rough voice characterizing elderly speech,” J. of Advanced Computational Intelligence and Intelligent Informatics, Vol.14, No.2, pp. 135-141, 2010.

- [4] K. Nakadai, H. G. Okuno, H. Nakajima, Y. Hasegawa, and H. Tsujino, “An open source software system for robot audition HARK and its evaluation,” Proc. of IEEE-RAS Int. Conf. on Humanoid Robots, pp. 561-566, 2008.

- [5] K. Nakamura, K. Nakadai, and G. Ince, “Real-time super-resolution sound source localization for robots,” Proc. of IEEE/RSJ Int. Conf. on Intelligent Robots and Systems, pp. 694-699, 2012.

- [6] R. O. Schmidt, “Multiple emitter location and signal parameter estimation,” IEEE Trans. on Antennas and Propagation, Vol.AP-32, No.3, pp. 276-280, 1986.

- [7] S. Argentieri, P. Danès, and P. Souères, “A survey on sound source localization in robotics: From binaural to array processing methods,” Computer Speech & Language, Vol.34, No.1, pp. 87-112, 2015.

- [8] K. Nakamura, N. Kazuhiro, F. Asano, Y. Hasegawa, and H. Tsujino, “Intelligent sound source localization for dynamic environment,” Proc. of IEEE/RSJ Int. Conf. on Intelligent Robots and Systems, pp. 664-669, 2009.

- [9] T. Ohata, K. Nakamura, T. Mizumoto, T. Tezuka, and K. Nakadai, “Improvement in outdoor sound source detection using a quadrotor-embedded microphone array,” Proc. of IEEE/RSJ Int. Conf. on Intelligent Robots and Systems, pp. 1902-1907, 2014.

- [10] R. Takeda and K. Komatani, “Performance comparison of MUSIC-based sound localization methods on small humanoid under low SNR conditions,” Proc. of IEEE-RAS Int. Conf. on Humanoid Robots, pp. 859-865, 2015.

- [11] H. Krim and M. Viberg, “Two decades of array signal processing research: The parametric approach,” Signal Processing Magazine, Vol.13, No.4, pp. 67-94, 1996.

- [12] J. Capon. “High-resolution frequency-wavenumber spectrum analysis,” Proc. of IEEE, Vol.57, No.8, pp. 1408-1418, 1969.

- [13] K. Nakadai, K. Hidai, H. G. Okuno, H. Mizoguchi, and H. Kitano, “Real-time auditory and visual multiple-speaker tracking for human-robot interaction,” J. of Robotics and Mechatronics, Vol.14, No.5, pp. 479-489, 2002.

- [14] C. Knapp and G. Carter, “The generalized correlation method for estimation of time delay,” IEEE Trans. on Acoustics, Speech, and Signal Processing, Vol.24, No.4, pp. 320-327, 1976.

- [15] A. Badali, J.-M. Valin, F. Michaud, and P. Aarabi, “Evaluating real-time audio localization algorithms for artificial audition in robotics,” Proc. of IEEE/RSJ Int. Conf. on Intelligent Robots and Systems, pp. 2033-2038, 2009.

- [16] R. Roy and T. Kailath, “ESPRIT – estimation of signal parameters via rotational invariance techniques,” IEEE Trans. Acoust., Speech, Signal Processing, Vol.37, No.7, pp. 984-995, 1989.

- [17] M. J. Taghizadeh, S. Haghighatshoar, A. Asaei, P. N. Garner, and H. Bourlard, “Robust microphone placement for source localization from noisy distance measurements,” Proc. of IEEE Int. Conf. on Acoustics, Speech and Signal Processing, pp. 2579-2583, 2015.

- [18] R. Takeda, K. Nakadai, K. Komatani, T. Ogata, and H. G. Okuno, “Exploiting known sound sources to improve ICA-based robot audition in speech separation and recognition,” Proc. of IEEE/RSJ Int. Conf. on Intelligent Robots and Systems, pp. 1757-1762, 2007.

- [19] T. Nakatani, T. Yoshioka, K. Kinoshita, M. Miyoshi, and B.-H. Juang, “Blind speech dereverberation with multi-channel linear prediction based on short time Fourier transform representation,” Proc. of IEEE Int. Conf. on Acoustics, Speech and Signal Processing, pp. 85-88, 2008.

- [20] S. Argentieri and P. Danes, “Broadband variations of the music high-resolution method for sound source localization in robotics,” Proc. of IEEE/RSJ Int. Conf. on Intelligent Robots and Systems, pp. 2009-2014, 2007.

- [21] F. Asano, H. Asoh, and K. Nakadai, “Sound source localization using joint Bayesian estimation with a hierarchical noise model,” IEEE Trans. on Audio, Speech and Language Processing, Vol.21, No.9, pp. 1953-1965, 2013.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.