Paper:

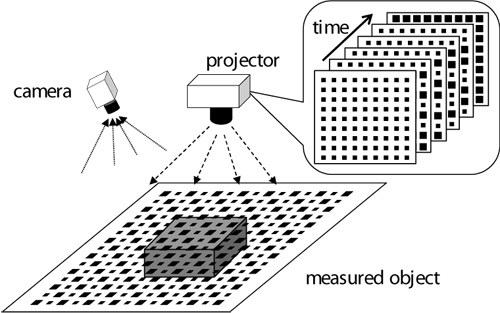

Blink-Spot Projection Method for Fast Three-Dimensional Shape Measurement

Jun Chen, Qingyi Gu, Tadayoshi Aoyama, Takeshi Takaki, and Idaku Ishii

Hiroshima University

1-4-1 Kagamiyama, Higashi-Hiroshima, Hiroshima 739-8527, Japan

Blink-spot projection method

Blink-spot projection method- [1] D. Kobayashi, T. Takubo, and A. Ueno, “Model-Based Footstep Planning Method for Biped Walking on 3D Field,” J. of Robotics and Mechatronics, Vol.27, No.2, pp. 156-166, 2015.

- [2] S. Akizuki and M. Hashimoto, “Stable Position and Pose Estimation of Industrial Parts Using Evaluation of Observability of 3D Vector Pairs,” J. of Robotics and Mechatronics, Vol.27, No.2, pp. 174-181, 2015.

- [3] J. Pages, J. Salvi, R. Garcia, and C. Matabosch, “Overview of coded light projection techniques for automatic 3D profiling,” IEEE Int. Conf. on Robotics and Automation, pp. 133-138, 2003.

- [4] S. Zhang, “Recent progresses on real-time 3D shape measurement using digital fringe projection techniques,” Optics and lasers in engineering, Vol.48, No.2, pp. 149-158, 2010.

- [5] G. Geng, “Structured-light 3D surface imaging: a tutorial,” Advances in Optics and Photonics, Vol.3, No.2, pp. 128-160, 2011.

- [6] M. Morita, T. Saito, S. Kurihara, and K. Kohiyama, “A Design and Implementation of 3D Scanning System Using a Slit Lighting,” Imaging & Visual Computing The J. of the Institute of Image Electronics Engineers of Japan, Vol.33, No.4B, pp. 555-564, 2004.

- [7] F. Forster, “A high-resolution and high accuracy real-time 3D sensor based on structured light,” Int. Symposium on 3D Data Processing, Visualization, and Transmission, pp. 208-215, 2006.

- [8] H. Nguyen, D. Nguyen, Z. Wang, H. Kieu, and M. Le, “Real-Time, High-Accuracy 3D Imaging and Shape Measurement,” Applied Optics, Vol.54, No.1, pp. A9-A17, 2015.

- [9] B. Huang and Y. Tang, “Fast 3D Reconstruction using One-shot Spatial Structured Light,” IEEE Int. Conf. on Systems, Man, and Cybernetics, pp. 531-536, 2014.

- [10] Y. Zhang, Z. Xiong, Z. Yang, and F. Wu, “Real-Time Scalable Depth Sensing with Hybrid Structured Light Illumination,” IEEE Trans. on image processing, Vol.23, No.1, pp. 97-109, 2014.

- [11] Q. Gu, T. Takaki, and I. Ishii, “Fast FPGA-based multi-object feature extraction,” IEEE Trans. Circuits and Systems for Video Technology, Vol.23, No.1, pp. 30-45, 2013.

- [12] Q. Gu, T. Takaki, and I. Ishii, “A fast multi-object extraction algorithm based on cell-based connected components labeling,” IEICE Trans. Information and Systems, Vol.E95-D, No.2, pp. 636-645, 2012.

- [13] X. Su and Q. Zhang, “Dynamic 3-D shape measurement method: A review,” Optics and Lasers in Engineering, Vol.48, No.2, pp. 191-204, 2010.

- [14] J. Davis, R. Ramamoothi, and S. Rusinkiewicz, “Spacetime stereo: A unifying framework for depth from triangulation,” IEEE Trans. on Pattern Analysis and Machine Intelligence (PAMI), Vol.27, No.2, pp. 296-302, 2005.

- [15] S. Yamazaki, A. Nukada, and M. Mochimaru, “Hamming Color Code for Dense and Robust One-shot 3D Scanning,” The British Machine Vision Conf. (BMVC), pp. 96.1-96.9, 2011.

- [16] A. Osman Ulusoy, F. Calakli, and G. Taubin, “One-shot scanning using De Bruijn spaced grids,” IEEE 12th Int. Conf. on Computer Vision Workshops (ICCV Workshops), pp. 1786-1792, 2009.

- [17] H. Kawasaki, R. Furukawa, R. Sagawa, and Y. Yagi, “Dynamic scene shape reconstruction using a single structured light pattern,” IEEE Conf. on Computer Vision and Pattern Recognition (CVPR), pp. 1-8, 2008.

- [18] R. Sagawa, H. Kawasaki, S. Kiyota, and R. Furukawa, “Dense one-shot 3d reconstruction by detecting continuous regions with parallel line projection,” IEEE Int. Conf. on Computer Vision (ICCV), pp. 1911-1918, 2011.

- [19] K. Nakazawa and C. Suzuki, “Development of 3-D robot vision sensor with fiber grating: Fusion of 2-D intensity image and discrete range image,” Int. Conf. on Industrial Electronics, Control and Instrumentation, pp. 2368-2372, 1991.

- [20] K. Umeda, “A compact range image sensor suitable for robots,” IEEE Int. Conf. on Robotics and Automation (ICRA), pp. 3167-3172, 2004.

- [21] Y. Watanabe, T. Komura, and M. Ishikawa, “955-fps real-time shape measurement of a moving/deforming object using high-speed vision for numerous-point analysis,” IEEE Int. Conf. on Robotics and Automation (ICRA), pp. 3192-3197, 2007.

- [22] S. Inokuchi, K. Sato, and F. Matsuda, “Range imaging system for 3-D object recognition,” Int. Conf. on Pattern Recognition, pp. 806-808, 1984.

- [23] S. S. Gorthi and P. Rastogi, “Fringe Projection Techniques: Whither we are?,” Optics and Lasers in Engineering, Vol.48, No.2, pp. 133-140, 2010.

- [24] M. Kumagai, “Development of a 3D Vision Range Sensor Using Equiphase Light Section Method,” J. of Robotics and Mechatronics, Vol.17, No.2, pp. 110-115, 2005.

- [25] H. Gao, T. Takaki, and I. Ishii, “GPU-based real-time structure light 3D scanner at 500 fps,” in SPIE 8437 (SPIE Photonics Europe / Real-Time Image and Video Processing), pp. 8437-18, 2012.

- [26] J. Chen, T. Yamamoto, T. Aoyama, T. Takaki, and I. Ishii, “Simultaneous Projection Mapping Using High-frame-rate Depth Vision,” IEEE Int. Conf. on Robotics and Automation (ICRA), pp. 4506-4511, 2014.

- [27] Y. Liu, H. Gao, Q. Gu, T. Aoyama, T. Takaki, and I. Ishii, “High-Frame-Rate Structured Light 3-D Vision for Fast Moving Objects,” J. of Robotics and Mechatronics, Vol.26, No.3, pp. 311-320, 2014.

- [28] Y. Zhang, Z. Xiong, and F. Wu, “Unambiguous 3D measurement from speckle-embedded fringe,” Applied Optics, Vol.52, No.32, pp. 7797-7805, 2013.

- [29] S. Zhang, D. Van Der Weide, and J. Oliver, “Superfast phase-shifting method for 3-D shape measurement,” Optics express, Vol.18, No.9, pp. 9684-9689, 2010.

- [30] Y. Wang, K. Liu, Q. Hao, D. L. Lau, and L. G. Hassebrook, “Period coded phase shifting strategy for real-time 3-D structured light illumination,” IEEE Trans. Image Process, Vol.20, No.11, pp. 3001-3013, 2011.

- [31] S. Zhang, X. Li, and S. Yau, “Multilevel quality-guided phase unwrapping algorithm for real-time three-dimensional shape reconstruction,” Applied Optics, Vol.46, No.1, pp. 50-57, 2007.

- [32] L. Zhang, B. Curless, and S. Seitz, “Spacetime stereo: Shape recovery for dynamic scenes,” IEEE Conf. on Computer Vision and Pattern Recognition (CVPR), pp. 367-374, 2003.

- [33] O. Hall-Holt and S. Rusinkiewicz, “Stripe boundary codes for real-time structured-light range scanning of moving objects,” IEEE Int. Conf. on Computer Vision (ICCV), pp. 359-366, 2001.

- [34] L. Zhang, B. Curless, and S. Seitz, “Rapid Shape Acquisition Using Color Structured Light and Multi-pass Dynamic Programming,” Proc. of the 1st Int. Symposium on 3D Data Processing, Visualization, and Transmission (3DPVT), pp. 24-36, 2002.

- [35] T. Weise, B. Leibe, and L. Van Gool, “Fast 3d scanning with automatic motion compensation,” IEEE Conf. on Computer Vision and Pattern Recognition, pp. 1-8, 2007.

- [36] I. Ishii, T. Tatebe, Q. Gu, Y. Moriue, T. Takaki, and K. Tajima, “2000 fps real-time vision system with high-frame-rate video recording,” IEEE Int. Conf. on Robotics and Automation (ICRA), pp. 1536-1541, 2010.

- [37] J. Chen, Y. Liu, Q. Gu, T. Aoyama, T. Takaki, and I. Ishii, “Robot-mounted 500-fps 3-D Shape Measurement Using Motion-compensated Coded Structured Light Method,” IEEE Int. Conf. on Robotics and Biomimetics (ROBIO), pp. 1989-1994, 2014.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.