Paper:

Visual Navigation of a Wheeled Mobile Robot Using Front Image in Semi-Structured Environment

Keita Kurashiki, Mareus Aguilar, and Sakon Soontornvanichkit

Graduate School of Engineering, Osaka University

2-1 Yamada-oka, Suita, Osaka 565-0871, Japan

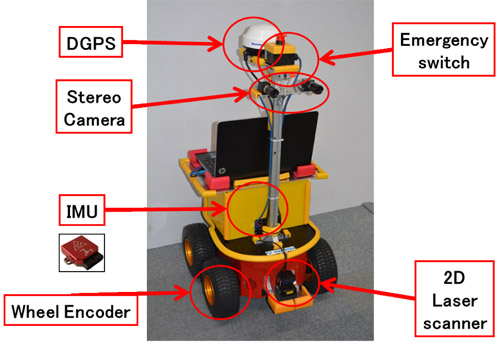

Mobile robot with a stereo camera

Mobile robot with a stereo camera- [1] S. Thrun, M. Montemerlo, H. Dahlkamp, et al., “Stanley: The Robot that Won the DARPA Grand Challenge,” J. of Field Robotics, Vol.23, No.1, pp. 661-692, 2006.

- [2] C. Urmson, J. Anhalt, H. Bae, et al., “Autonomous Driving in Urban Environments: Boss and the Urban Challenge,” J. of Field Robotics Special Issue on the 2007 DARPA Urban Challenge, Part I, Vol.25, No.1, pp. 425-466, 2008.

- [3] S. Yuta, M. Mizukawa, H. Hashimoto, H. Tashiro, and T. Okubo, “An Open Experiment of Mobile Robot Autonomous Navigation at the Pedestrian Streets in the City – Tsukuba Challenge,” Int. Conf. on Mechatronics and Automation (ICMA), pp. 904-909, Aug 2011.

- [4] T. Suzuki, M. Kitamura, Y. Amano, and N. Kubo, “Autonomous Navigation of a Mobile Robot Based on GNSS/DR Integration in Outdoor Environments,” J. of Robotics and Mechatronics, Vol.26, No.2, pp. 214-224, 2014.

- [5] K. Okawa, “Three Tiered Self-Localization of Two Position Estimation Using Three Dimensional Environment Map and Gyro-Odometry,” J. of Robotics and Mechatronics, Vol.26, No.2, pp. 196-203, 2014.

- [6] N. Akai, K. Inoue, and K. Ozaki, “Autonomous Navigation Based on Magnetic and Geometric Landmarks on Environmental Structure in Real World,” J. of Robotics and Mechatronics, Vol.26, No.2, pp. 158-165, 2014.

- [7] M. Agrawal, K. Konolige, and R. Bolles, “Localization and Mapping for Autonomous Navigation in Outdoor Terrains’ A Stereo Vision Approach,” IEEE Workshop on Applications of Computer Vision, 2007.

- [8] F. Chaumette and S. Huthchinson, “Visual servo control I. Basic approaches,” Robotics and Automation Magazine, Vol.13, No.4, pp. 82-90, 2006.

- [9] E. Malis, “Survey of vision-based robot control,” ENSIETA European Naval Ship Design Short Course, 2002.

- [10] K. Kurashiki and T. Fukao, “Image-based Robust Arbitrary Path Following of Nonholonomic Mobile Robots,” Trans. on the Society of Instrument and Control Engineers, Vol.47, No.5, pp. 238-246, 2011 (in Japanese).

- [11] J.-J. E. Slotine and W. Li, “Applied Nonlinear Control,” Prentice Hall, 1991.

- [12] Y. Kanayama, Y. Kimura, F. Miyazaki, and T. Noguchi, “A stable tracking control method for an autonomous mobile robot,” IEEE Int. Conf. on robotics and automation, pp. 384-389, 1990.

- [13] G. Bradski and A. Kaehler, “Learning OpenCV, Computer Vision with the OpenCV Library,” O’Reilly Media, 2008.

- [14] A. Oliva and A. Torralba, “Building the gist of a scene: the role of global image features in recognition,” Progress in Brain Research, Vol.155, 2006.

- [15] J. Hays and A. A. Efros, “im2gps: estimating geographic information from a single image,” Proc. of the IEEE Conf. on Computer Vision and Pattern Recognition (CVPR), 2008.

- [16] S. Yuta, “Tsukuba Challenge 2014: Open Experiment of Autonomous Navigation of Mobile Robots in the City,” SI2014, number 1F3-1, 2014 (in Japanese).

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.