Research Paper:

Online Topological Mapping on a Quadcopter with Fast Growing Neural Gas

Alfin Junaedy†

, Hiroyuki Masuta

, Hiroyuki Masuta

, Yotaro Fuse

, Yotaro Fuse

, Kei Sawai, Tatsuo Motoyoshi, and Noboru Takagi

, Kei Sawai, Tatsuo Motoyoshi, and Noboru Takagi

Department of Intelligent Robotics, Toyama Prefectural University

5180 Kurokawa, Imizu, Toyama 939-0398, Japan

†Corresponding author

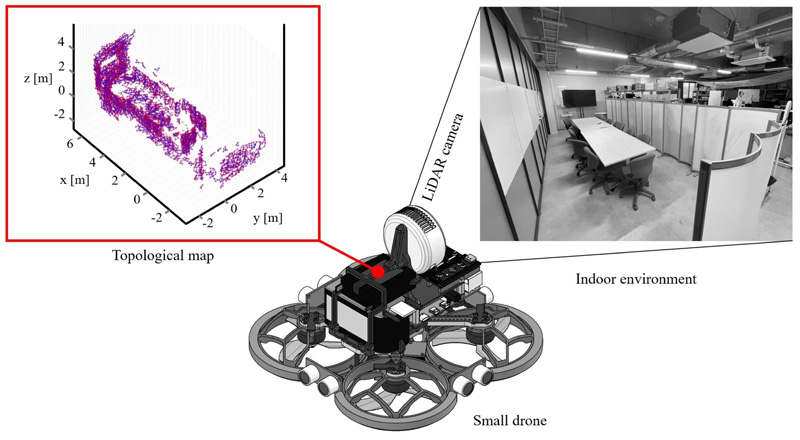

This paper presents an online topological mapping method on a quadcopter with fast-growing neural gas. Recently, perceiving the real world in 3D space has become increasingly important, and robotics is no exception. Quadcopters are the most common type of robot working in 3D space. The ability to perceive 3D space is even required in order to enable real-time autonomous control. Dense maps are simply unpractical, while sparse maps are not suitable due to a lack of appropriate information. Topological maps then offer a balance between computational cost and accuracy. One of the most well-known unsupervised learning methods for topological mapping is growing neural gas (GNG). Unfortunately, it is difficult to increase the learning speed due to the traditional iterative method. Consequently, we propose a new method for topological mapping, called simplified multi-scale batch-learning GNG, by applying a mini-batch strategy in the learning process. The proposed method has been implemented on a quadcopter for indoor mapping applications. In addition, the topological maps are also combined with the tracking data of the quadcopter to generate a new global map. The combination is simple yet robust, based on rotation and translation strategies. Thus, the quadcopter is able to run the algorithms in real-time and maintain its performance above 30 fps.

Topological mapping on a quadcopter

- [1] H. Balta et al., “Integrated data management for a fleet of search-and-rescue robots,” J. Field Robot., Vol.34, No.3, pp. 539-582, 2017. https://doi.org/10.1002/rob.21651

- [2] J. Chi, H. Wu, and G. Tian, “Object-oriented 3D semantic mapping based on instance segmentation,” J. Adv. Comput. Intell. Intell. Inform., Vol.23, No.4, pp. 695-704, 2019. https://doi.org/10.20965/jaciii.2019.p0695

- [3] K. Helin, J. Karjalainen, T. Kuula, and N. Philippon, “Virtual/mixed/augmented reality laboratory research for the study of augmented human and human-machine systems,” 2016 12th Int. Conf. Intell. Environ. (IE), pp. 163-166, 2016. https://doi.org/10.1109/IE.2016.35

- [4] T. Buratowski, J. Garus, M. Giergiel, and A. Kudriashov, “Real-time 3D mapping in isolated industrial terrain with use of mobile robotic vehicle,” Electronics, Vol.11, No.13, Article No.2086, 2022. https://doi.org/10.3390/electronics11132086

- [5] A. Bodenmann, B. Thornton, R. Nakajima, and T. Ura, “Methods for quantitative studies of seafloor hydrothermal systems using 3D visual reconstructions,” Robomech J., Vol.4, Article No.22, 2017. https://doi.org/10.1186/s40648-017-0091-5

- [6] F. Endres, J. Hess, J. Sturm, D. Cremers, and W. Burgard, “3-D mapping with an RGB-D camera,” IEEE Trans. Robot., Vol.30, No.1, pp. 177-187, 2014. https://doi.org/10.1109/TRO.2013.2279412

- [7] K. Okada, S. Kagami, M. Inaba, and H. Inoue, “Plane segment finder: algorithm, implementation, and applications,” Proc. 2001 IEEE Int. Conf. Robot. Autom. (ICRA), Vol.2, pp. 2120-2125, 2001. https://doi.org/10.1109/ROBOT.2001.932920

- [8] A. Concha and J. Civera, “Using superpixels in monocular SLAM,” 2014 IEEE Int. Conf. Robot. Autom. (ICRA), pp. 365-372, 2014. https://doi.org/10.1109/ICRA.2014.6906883

- [9] C. C. Martinez, J. M. Carranza, W. Mayol-Cuevas, and M. O. A. Estrada, “Enhancing 3D mapping via real-time superpixel-based segmentation,” 2016 IEEE Int. Symp. Mix. Augment. Real. (ISMAR-Adjunct), pp. 90-95, 2016. https://doi.org/10.1109/ISMAR-Adjunct.2016.0048

- [10] M. Inaba, S. Kagami, F. Kanehiro, Y. Hoshino, and H. Inoue, “A platform for robotics research based on the remote-brained robot approach,” Int. J. Robot. Research., Vol.19, No.10, pp. 933-954, 2000. https://doi.org/10.1177/02783640022067878

- [11] F. Ma, L. Carlone, U. Ayaz, and S. Karaman, “Sparse depth sensing for resource-constrained robots,” Int. J. Robot. Research, Vol.38, No.8, pp. 935-980, 2019. https://doi.org/10.1177/0278364919850296

- [12] K. Muravyev and K. Yakovlev, “Evaluation of topological mapping methods in indoor environments,” IEEE Access, Vol.11, pp. 132683-132698, 2023. https://doi.org/10.1109/ACCESS.2023.3335818

- [13] T. Martinetz and K. Schulten, “A ‘neural-gas’ network learns topologies,” T. Kohonen, K. Makisara, O. Simula, and J. Kangas (Eds.), “Artificial Neural Networks,” pp. 397-402, Elsevier, 1991.

- [14] B. Fritzke, “A growing neural gas network learns topologies,” Adv. Neural Informat. Process. Syst. 7 (NIPS 1994), pp. 625-632, 1995.

- [15] B. Fritzke, “A self-organizing network that can flow non-stationary distributions,” Proc. 7th Int. Conf. Artificial Neural Netw., pp. 613-618, 1997.

- [16] H. Frezza-Buet, “Following non-stationary distributions by controlling the vector quantization accuracy of a growing neural gas network,” Neurocomputing, Vol.71, Nos.7-9, pp. 1191-1202, 2008. https://doi.org/10.1016/j.neucom.2007.12.024

- [17] G. Cheng, Z. Song, J. Yang, and R. Gao, “On growing self-organizing neural networks without fixed dimensionality,” 2006 Int. Conf. Comput. Intell. Model. Control Autom. Int. Conf. Intell. Agents Web Tech. Int. Commerce (CIMCA’06), pp. 164-168, 2006. https://doi.org/10.1109/CIMCA.2006.158

- [18] C. A. T. Mendes, M. Gattass, and H. Lopes, “FGNG: A fast multi-dimensional growing neural gas implementation,” Neurocomputing, Vol.128, pp. 328-340, 2014. https://doi.org/10.1016/j.neucom.2013.08.033

- [19] Y. Toda, T. Matsuno, and M. Minami, “Multilayer batch learning growing neural gas for learning multiscale topologies,” J. Adv. Comput. Intell. Intell. Inform., Vol.25, No.6, pp. 1011-1023, 2021. https://doi.org/10.20965/jaciii.2021.p1011

- [20] M. Iwasa, N. Kubota, and Y. Toda, “Multi-scale batch-learning growing neural gas for topological feature extraction in navigation of mobility support robots,” 7th Int. Workshop Adv. Comput. Intell. Intell. Informat. (IWACIII2021), 2021.

- [21] M. Ester, H.-P. Kriegel, J. Sander, and X. Xu, “A density-based algorithm for discovering clusters in large spatial databases with noise,” Proc. 2nd Int. Conf. Knowl. Discov. Data Min., pp. 226-231, 1996.

- [22] B. D. Lucas and T. Kanade, “An iterative image registration technique with an application to stereo vision,” Proc. 7th Int. Join Conf. Artif. Intell., Vol.2, pp. 674-679, 1981.

- [23] G. Farneback, “Very high accuracy velocity estimation using orientation tensors, parametric motion, and simultaneous segmentation of the motion field,” Proc. 8th IEEE Int. Conf. Comp. Vis. (ICCV2001), pp. 171-177, 2001. https://doi.org/10.1109/ICCV.2001.937514

- [24] A. Talukder and L. Matthies, “Real-time detection of moving objects from moving vehicles using dense stereo and optical flow,” 2004 IEEE/RSJ Int. Conf. Intell. Robots Syst. (IROS), pp. 3718-3725, 2004. https://doi.org/10.1109/IROS.2004.1389993

- [25] E. Rublee, V. Rabaud, K. Konolige, and G. Bradski, “ORB: An efficient alternative to SIFT or SURF,” 2011 Int. Conf. Comp. Vis., pp. 2564-2571, 2011. https://doi.org/10.1109/ICCV.2011.6126544

- [26] R. Mur-Artal, J. M. M. Montiel, and J. D. Tardós, “ORB-SLAM: A versatile and accurate monocular SLAM system,” IEEE Trans. Robot., Vol.31, No.5, pp. 1147-1163, 2015. https://doi.org/10.1109/TRO.2015.2463671

- [27] R. Mur-Artal and J. D. Tardós, “ORB-SLAM2: An open-source SLAM system for monocular, stereo, and RGB-D cameras,” IEEE Trans. Robot., Vol.33, No.5, pp. 1255-1262, 2017. https://doi.org/10.1109/TRO.2017.2705103

- [28] C. Campos, R. Elvira, J. J. G. Rodríguez, J. M. M. Montiel, and J. D. Tardós, “ORB-SLAM3: An accurate open-source library for visual, visual-inertial and multi-map SLAM,” IEEE Trans. Robot., Vol.37, No.6, pp. 1874-1890, 2021. https://doi.org/10.1109/TRO.2021.3075644

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.