Research Paper:

A Lightweight and Accurate Method for Detecting Traffic Flow in Real Time

Zewen Du, Ying Jin

, Hongbin Ma†

, Hongbin Ma†

, and Ping Liu

, and Ping Liu

School of Automation, Beijing Institute of Technology

No.5 South Street, Zhongguancun, Haidian District, Beijing 100081, China

†Corresponding author

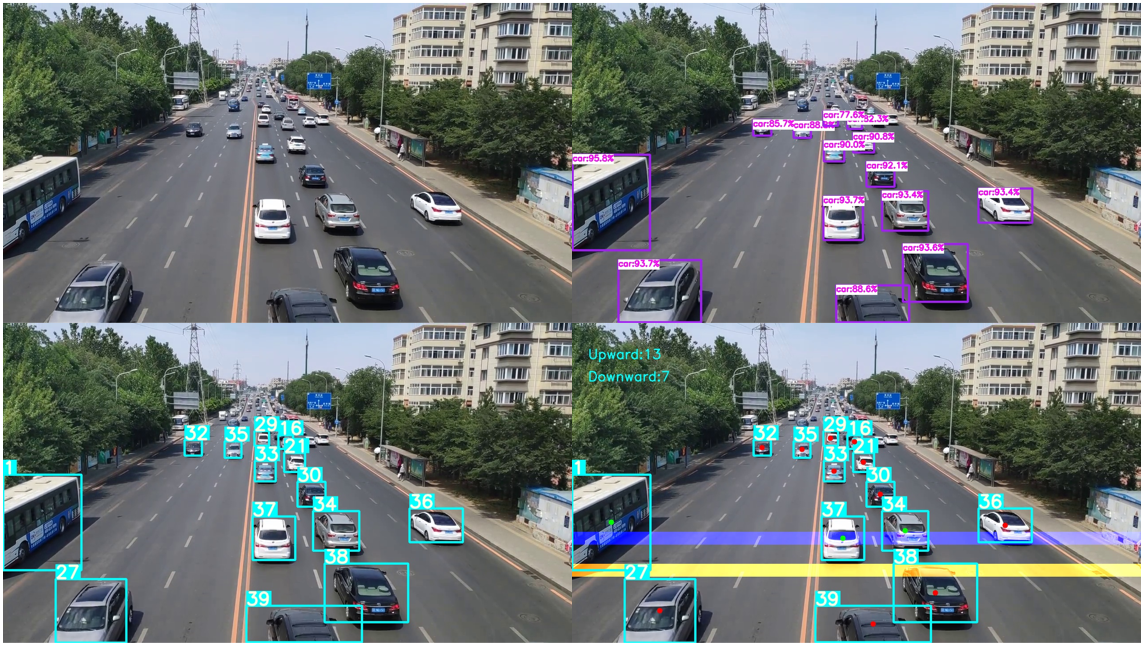

Traffic flow detection provides significant information for intelligent transportation systems. However, as the mainstream research direction, vision-based traffic flow detection methods currently face the challenges of a trade-off between accuracy and speed. Furthermore, it is crucial that modularization be incorporated into the system design process to enhance the maintainability and flexibility of the system. To achieve this, we propose a modular design method that divides this task into three parts: vehicle detecting, vehicle tracking, and vehicle counting. As an important link of the system, vehicle detection greatly influences the accuracy and speed of the system. We therefore introduce a lightweight network called feature adaptive fusion-YOLOX, which is based on YOLOX. Specifically, in order to eliminate redundant information brought by bilinear interpolation, we propose a feature-level upsampling method called channel to spatial, which enables upsampling without additional calculations. Based on this module, we design a lightweight, multi-scale feature fusion module, feature adaptive fusion pyramid network (FAFPN). Compared with PA-FPN, FAFPN reduces FLOPs by 61% and parameters of the neck by 50% while maintaining comparable or even slightly improved performance. Through experimental tests, the traffic flow detection method proposed in this paper achieves high accuracy and adaptability in a series of traffic surveillance videos in different types of weather and perspectives and can realize traffic flow detection in real time.

Traffic flow detection based on deep learning.

- [1] M. Nama et al., “Machine learning-based traffic scheduling techniques for intelligent transportation system: Opportunities and challenges,” Int. J. of Communication Systems, Vol.34, No.9, Article No.e4814, 2021. https://doi.org/10.1002/dac.4814

- [2] A. Almeida et al., “Vehicular traffic flow prediction using deployed traffic counters in a city,” Future Generation Computer Systems, Vol.128, pp. 429-442, 2022. https://doi.org/10.1016/j.future.2021.10.022

- [3] Z. Tian et al., “FCOS: A simple and strong anchor-free object detector,” IEEE Trans. on Pattern Analysis and Machine Intelligence, Vol.44, No.4, pp. 1922-1933, 2022. https://doi.org/10.1109/TPAMI.2020.3032166

- [4] H. Zhang et al., “VarifocalNet: An IoU-aware dense object detector,” 2021 IEEE/CVF Conf. on Computer Vision and Pattern Recognition (CVPR), pp. 8510-8519, 2021. https://doi.org/10.1109/CVPR46437.2021.00841

- [5] C. Feng et al., “TOOD: Task-aligned one-stage object detection,” 2021 IEEE/CVF Int. Conf. on Computer Vision (ICCV), pp. 3490-3499, 2021. https://doi.org/10.1109/ICCV48922.2021.00349

- [6] D. K. Gupta, D. Arya, and E. Gavves, “Rotation equivariant Siamese networks for tracking,” 2021 IEEE/CVF Conf. on CVPR, pp. 12357-12366, 2021. https://doi.org/10.1109/CVPR46437.2021.01218

- [7] B. Yan et al., “Learning spatio-temporal transformer for visual tracking,” 2021 IEEE/CVF ICCV, pp. 10428-10437, 2021. https://doi.org/10.1109/ICCV48922.2021.01028

- [8] C. Chen et al., “An edge traffic flow detection scheme based on deep learning in an intelligent transportation system,” IEEE Trans. on Intelligent Transportation Systems, Vol.22, No.3, pp. 1840-1852, 2021. https://doi.org/10.1109/TITS.2020.3025687

- [9] D. Roy et al., “Multi-modality sensing and data fusion for multi-vehicle detection,” IEEE Trans. on Multimedia, Vol.25, pp. 2280-2295, 2023. https://doi.org/10.1109/TMM.2022.3145663

- [10] S. Arabi, A. Haghighat, and A. Sharma, “A deep-learning-based computer vision solution for construction vehicle detection,” Computer-Aided Civil and Infrastructure Engineering, Vol.35, No.7, pp. 753-767, 2020. https://doi.org/10.1111/mice.12530

- [11] J. Yu and S. Gu, “A spatial-temporal information based traffic-flow detection method for video surveillance,” 2021 9th Int. Conf. on Advanced Cloud and Big Data (CBD), pp. 77-82, 2022. https://doi.org/10.1109/CBD54617.2021.00022

- [12] Y.-Q. Huang et al., “Optimized YOLOv3 algorithm and its application in traffic flow detections,” Applied Sciences, Vol.10, No.9, Article No.3079, 2020. https://doi.org/10.3390/app10093079

- [13] D. G. Lowe, “Object recognition from local scale-invariant features,” Proc. of the 7th IEEE Int. Conf. on Computer Vision, Vol.2, pp. 1150-1157, 1999. https://doi.org/10.1109/ICCV.1999.790410

- [14] H. Bay, T. Tuytelaars, and L. V. Gool, “SURF: Speeded up robust features,” Proc. of the 9th European Conf. on Computer Vision (ECCV 2006), Part I, pp. 404-417, 2006. https://doi.org/10.1007/11744023_32

- [15] N. Dalal and B. Triggs, “Histograms of oriented gradients for human detection,” 2005 IEEE Computer Society Conf. on CVPR, Vol.1, pp. 886-893, 2005. https://doi.org/10.1109/CVPR.2005.177

- [16] P. Viola and M. Jones, “Rapid object detection using a boosted cascade of simple features,” Proc. of the 2001 IEEE Computer Society Conf. on CVPR, 2001. https://doi.org/10.1109/CVPR.2001.990517

- [17] L. Zhang, J. Wang, and Z. An, “Vehicle recognition algorithm based on Haar-like features and improved Adaboost classifier,” J. of Ambient Intelligence and Humanized Computing, Vol.14, No.2, pp. 807-815, 2023. https://doi.org/10.1007/s12652-021-03332-4

- [18] A. Alam, Z. A. Jaffery, and H. Sharma, “A cost-effective computer vision-based vehicle detection system,” Concurrent Engineering, Vol.30, No.2, pp. 148-158, 2022. https://doi.org/10.1177/1063293X211069193

- [19] V. Ukani et al., “Efficient vehicle detection and classification for traffic surveillance system,” Proc. of the 1st Int. Conf. on Advances in Computing and Data Sciences (ICACDS 2016), pp. 495-503, 2016. https://doi.org/10.1007/978-981-10-5427-3_51

- [20] X. Li and X. Guo, “A HOG feature and SVM based method for forward vehicle detection with single camera,” 2013 5th Int. Conf. on Intelligent Human-Machine Systems and Cybernetics, pp. 263-266, 2013. https://doi.org/10.1109/IHMSC.2013.69

- [21] N. Tomikj and A. Kulakov, “Vehicle detection with HOG and linear SVM,” J. of Emerging Computer Technologies, Vol.1, No.1, pp. 6-9, 2021.

- [22] Z. Wang et al., “Vehicle detection in severe weather based on pseudo-visual search and HOG–LBP feature fusion,” Proc. of the Institution of Mechanical Engineers, Part D: J. of Automobile Engineering, Vol.236, No.7, pp. 1607-1618, 2022. https://doi.org/10.1177/09544070211036311

- [23] R. Girshick et al., “Rich feature hierarchies for accurate object detection and semantic segmentation,” 2014 IEEE Conf. on CVPR, pp. 580-587, 2014. https://doi.org/10.1109/CVPR.2014.81

- [24] S. Ren et al., “Faster R-CNN: Towards real-time object detection with region proposal networks,” Proc. of the 28th Int. Conf. on Neural Information Processing Systems (NIPS’15), Vol.1, pp. 91-99, 2015.

- [25] W. Liu et al., “SSD: Single shot multibox detector,” Proc. of the 14th European Conf. on Computer Vision (ECCV 2016), Springer, Cham, pp. 21-37, 2016. https://doi.org/10.1007/978-3-319-46448-0_2

- [26] J. Redmon et al., “You only look once: Unified, real-time object detection,” 2016 IEEE Conf. on CVPR, pp. 779-788, 2016. https://doi.org/10.1109/CVPR.2016.91

- [27] Z. Ge et al., “YOLOX: Exceeding YOLO series in 2021,” arXiv: 2107.08430, 2021. https://doi.org/10.48550/arXiv.2107.08430

- [28] B. Singh and L. S. Davis, “An analysis of scale invariance in object detection – SNIP,” 2018 IEEE/CVF Conf. on CVPR, pp. 3578-3587, 2018. https://doi.org/10.1109/CVPR.2018.00377

- [29] B. Singh, M. Najibi, and L. S. Davis, “SNIPER: Efficient multi-scale training,” Proc. of the 32nd Int. Conf. on Neural Information Processing Systems (NIPS’18), pp. 9333-9343, 2018.

- [30] T.-Y. Lin et al., “Feature pyramid networks for object detection,” 2017 IEEE Conf. on CVPR, pp. 936-944, 2017. https://doi.org/10.1109/CVPR.2017.106

- [31] S. Liu et al., “Path aggregation network for instance segmentation,” 2018 IEEE/CVF Conf. on CVPR, pp. 8759-8768, 2018. https://doi.org/10.1109/CVPR.2018.00913

- [32] M. Tan, R. Pang, and Q. V. Le, “EfficientDet: Scalable and efficient object detection,” 2020 IEEE/CVF Conf. on CVPR, pp. 10778-10787, 2020. https://doi.org/10.1109/CVPR42600.2020.01079

- [33] H. Tayara, K. G. Soo, and K. T. Chong, “Vehicle detection and counting in high-resolution aerial images using convolutional regression neural network,” IEEE Access, Vol.6, pp. 2220-2230, 2017. https://doi.org/10.1109/ACCESS.2017.2782260

- [34] Z. Wang et al., “Compressed-domain highway vehicle counting by spatial and temporal regression,” IEEE Trans. on Circuits and Systems for Video Technology, Vol.29, No.1, pp. 263-274, 2019. https://doi.org/10.1109/TCSVT.2017.2761992

- [35] S. A. H. Alhuthali, M. Y. I. Zia, and M. Rashid, “A simplified traffic flow monitoring system using computer vision techniques,” 2022 2nd Int. Conf. on Computing and Information Technology (ICCIT), pp. 167-170, 2022. https://doi.org/10.1109/ICCIT52419.2022.9711550

- [36] N. Wojke, A. Bewley, and D. Paulus, “Simple online and realtime tracking with a deep association metric,” 2017 IEEE Int. Conf. on Image Processing (ICIP), pp. 3645-3649, 2017. https://doi.org/10.1109/ICIP.2017.8296962

- [37] M. Tan and Q. Le, “EfficientNet: Rethinking model scaling for convolutional neural networks,” Proc. of the 36th Int. Conf. on Machine Learning, pp. 6105-6114, 2019.

- [38] K. Chen et al., “MMDetection: Open MMLab detection toolbox and benchmark,” arXiv:1906.07155, 2019. https://doi.org/10.48550/arXiv.1906.07155

- [39] Z. Chen et al., “Disentangle your dense object detector,” Proc. of the 29th ACM Int. Conf. on Multimedia (MM’21), pp. 4939-4948, 2021. https://doi.org/10.1145/3474085.3475351

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.