Research Paper:

Trash Detection Algorithm Suitable for Mobile Robots Using Improved YOLO

Ryotaro Harada, Tadahiro Oyama†, Kenji Fujimoto

, Toshihiko Shimizu, Masayoshi Ozawa, Julien Samuel Amar, and Masahiko Sakai

, Toshihiko Shimizu, Masayoshi Ozawa, Julien Samuel Amar, and Masahiko Sakai

Kobe City College of Technology

8-3 Gakuen-higashimachi, Nishi-ku, Kobe, Hyogo 651-2194, Japan

†Corresponding author

The illegal dumping of aluminum and plastic into cities and marine areas leads to negative impacts on the ecosystem and contributes to increased environmental pollution. Although volunteer trash pickup activities have increased in recent years, they require significant effort, time, and money. Therefore, we propose automated trash pickup robot, which incorporates autonomous movement and trash pickup arms. Although these functions have been actively developed, relatively little research has focused on trash detection. As such, we have developed a trash detection function by using deep learning models to improve the accuracy. First, we created a new trash dataset that classifies four types of trash with high illegal dumping volumes (cans, plastic bottles, cardboard, and cigarette butts). Next, we developed a new you only look once (YOLO)-based model with low parameters and computations. We trained the model on a created dataset and a dataset consisting of marine trash created during previous research. In consequence, the proposed models achieve the same detection accuracy as the existing models on both datasets, with fewer parameters and computations. Furthermore, the proposed models accelerate the edge device’s frame rate.

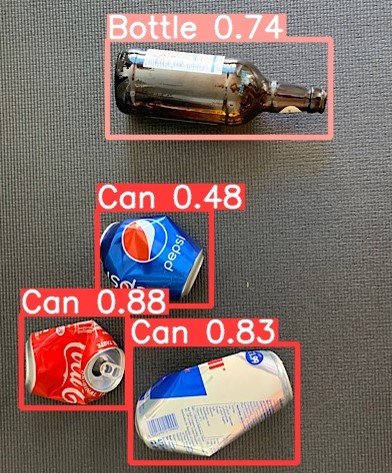

Result of trash detection

- [1] Organisation for Economic Co-Operation and Development (OECD), “Environment at a glance 2020,” OECD Publishing, 2020. https://doi.org/10.1787/4ea7d35f-en

- [2] National Oceanic and Atmospheric Administration (NOAA), “What is marine debris?” https://oceanservice.noaa.gov/facts/marinedebris.html [Accessed May 3, 2021]

- [3] Keep America Beautiful, “Keep America Beautiful’s Volunteer Portal.” https://Volunteer.kab.org [Accessed May 3, 2021]

- [4] OR&R’s Marine Debris Division, NOAA, “Removal.” https://marinedebris.noaa.gov/our-work/removal [Accessed May 3, 2021]

- [5] S. Hossain et al., “Autonomous trash collector based on object detection using deep neural network,” 2019 IEEE Reg. 10 Conf. (TENCON 2019), pp. 1406-1410, 2019. https://doi.org/10.1109/TENCON.2019.8929270

- [6] M. Kraft et al., “Autonomous, onboard vision-based trash and litter detection in low altitude aerial images collected by an unmanned aerial vehicle,” Remote Sens., Vol.13, No.5, Article No.965, 2021. https://doi.org/10.3390/rs13050965

- [7] R. Miyagusuku et al., “Toward autonomous garbage collection robots in terrains with different elevations,” J. Robot. Mechatron., Vol.32, No.6, pp. 1164-1172, 2020. https://doi.org/10.20965/jrm.2020.p1164

- [8] S. Gupta et al., “Gar-Bot: Garbage collecting and segregating robot,” J. Phys.: Conf. Ser., Vol.1950, Article No.012023, 2021. https://doi.org/10.1088/1742-6596/1950/1/012023

- [9] J. Redmon et al., “You only look once: Unified, real-time object detection,” 2016 IEEE Conf. Comput. Vis. Pattern Recognit. (CVPR), pp. 779-788, 2016. https://doi.org/10.1109/CVPR.2016.91

- [10] P. F. Proença and P. Simões, “TACO: Trash annotations in context for litter detection,” arXiv: 2003.06975, 2020. https://doi.org/10.48550/arXiv.2003.06975

- [11] Y. Wang and X. Zhang, “Autonomous garbage detection for intelligent urban management,” MATEC Web Conf., Vol.232, Article No.01056, 2018. https://doi.org/10.1051/matecconf/201823201056

- [12] M. Kulshreshtha et al., “OATCR: Outdoor autonomous trash-collecting robot design using YOLOv4-tiny,” Electronics, Vol.10, No.18, Article No.2292, 2021. https://doi.org/10.3390/electronics10182292

- [13] B. D. Carolis, F. Ladogana, and N. Macchiarulo, “YOLO TrashNet: Garbage detection in video streams,” 2020 IEEE Conf. Evol. Adapt. Intell. Syst. (EAIS), 2020. https://doi.org/10.1109/EAIS48028.2020.9122693

- [14] Q. Chen and Q. Xiong, “Garbage classification detection based on improved YOLOV4,” J. Comput. Commun., Vol.8, No.12, pp. 285-294, 2020. https://doi.org/10.4236/jcc.2020.812023

- [15] L. Zhao et al., “Skip-YOLO: Domestic garbage detection using deep learning method in complex multi-scenes,” Research Square, 2021. https://doi.org/10.21203/rs.3.rs-757539/v1

- [16] A. Aishwarya et al., “A waste management technique to detect and separate non-biodegradable waste using machine learning and YOLO algorithm,” 2021 11th Int. Conf. Cloud Comput. Data Sci. Eng. (Conflu.), pp. 443-447, 2021. https://doi.org/10.1109/Confluence51648.2021.9377163

- [17] J. Xue et al., “Garbage detection using YOLOv3 in Nakanoshima Challenge,” J. Robot. Mechatron., Vol.32, No.6, pp. 1200-1210, 2020. https://doi.org/10.20965/jrm.2020.p1200

- [18] M. Valdenegro-Toro, “Submerged marine debris detection with autonomous underwater vehicles,” 2016 Int. Conf. Robot. Autom. Humanit. Appl. (RAHA), 2016. https://doi.org/10.1109/RAHA.2016.7931907

- [19] M. Fulton et al., “Robotic detection of marine litter using deep visual detection models,” 2019 Int. Conf. Robot. Autom. (ICRA), pp. 5752-5758, 2019. https://doi.org/10.1109/ICRA.2019.8793975

- [20] J. Hong, M. Fulton, and J. Sattar, “TrashCan: A semantically-segmented dataset towards visual detection of marine debris,” arXiv: 2007.08097, 2020. https://doi.org/10.48550/arXiv.2007.08097

- [21] H. Panwar et al., “AquaVision: Automating the detection of waste in water bodies using deep transfer learning,” Case Stud. Chem. Environ. Eng., Vol.2, Article No.100026, 2020. https://doi.org/10.1016/j.cscee.2020.100026

- [22] C. Wu et al., “Underwater trash detection algorithm based on improved YOLOv5s,” J. Real-Time Image Process., Vol.19, No.5, pp. 911-920, 2022. https://doi.org/10.1007/s11554-022-01232-0

- [23] X. Ma et al., “Light-YOLOv4: An edge-device oriented target detection method for remote sensing images,” IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens., Vol.14, pp. 10808-10820, 2021. https://doi.org/10.1109/JSTARS.2021.3120009

- [24] C.-Y. Wang, A. Bochkovskiy, and H.-Y. M. Liao, “Scaled-YOLOv4: Scaling cross stage partial network,” 2021 IEEE/CVF Conf. Comput. Vis. Pattern Recognit. (CVPR), pp. 13024-13033, 2021. https://doi.org/10.1109/CVPR46437.2021.01283

- [25] R. Harada et al., “Development of an AI-based illegal dumping trash detection system,” Artif. Intell. Data Sci., Vol.3, No.3, pp. 1-9, 2022. https://doi.org/10.11532/jsceiii.3.3_1

- [26] G. Jocher et al., “ultralytics/yolov5: v6.1 - TensorRT, TensorFlow Edge TPU and OpenVINO export and inference,” Zenodo, 2022. https://doi.org/10.5281/ZENODO.6222936

- [27] K. Han et al., “GhostNet: More features from cheap operations,” 2020 IEEE/CVF Conf. Comput. Vis. Pattern Recognit. (CVPR), pp. 1577-1586, 2020. https://doi.org/10.1109/CVPR42600.2020.00165

- [28] F. Meslet-Millet, E. Chaput, and S. Mouysset, “SPPNet: An approach for real-time encrypted traffic classification using deep learning,” 2021 IEEE Glob. Commun. Conf. (GLOBECOM), 2021. https://doi.org/10.1109/GLOBECOM46510.2021.9686037

- [29] C.-Y. Wang et al., “CSPNet: A new backbone that can enhance learning capability of CNN,” 2020 IEEE/CVF Conf. Comput. Vis. Pattern Recognit. Workshops (CVPRW), pp. 1571-1580, 2020. https://doi.org/10.1109/CVPRW50498.2020.00203

- [30] S. Elfwing, E. Uchibe, and K. Doya, “Sigmoid-weighted linear units for neural network function approximation in reinforcement learning,” Neural Netw., Vol.107, pp. 3-11, 2018. https://doi.org/10.1016/j.neunet.2017.12.012

- [31] A. Kuznetsova et al., “The Open Images Dataset V4,” Int. J. Comput. Vis., Vol.128, No.7, pp. 1956-1981, 2020. https://doi.org/10.1007/s11263-020-01316-z

- [32] I. Krasin et al., “OpenImages: A public dataset for large-scale multi-label and multi-class image classification,” 2017. https://storage.googleapis.com/openimages/web/index.html [Accessed June 6, 2021]

- [33] S. Sekar, “Waste Classification Data,” Kaggle. https://www.kaggle.com/datasets/techsash/waste-classification-data?resource=download [Accessed June 6, 2021]

- [34] A. Serezhkin, “Drinking Waste Classification,” Kaggle. https://www.kaggle.com/datasets/arkadiyhacks/drinking-waste-classification [Accessed June 6, 2021]

- [35] M. Yang and G. Thung, “Classification of trash for recyclability status,” Stanford University, 2016.

- [36] Immersive Limit LLC, “Cigarette Butt Dataset.” https://www.immersivelimit.com/datasets/cigarette-butts [Accessed June 6, 2021]

- [37] E. Uzun et al., “An effective and efficient Web content extractor for optimizing the crawling process,” Softw.: Pract. Exp., Vol.44, No.10, pp. 1181-1199, 2014. https://doi.org/10.1002/spe.2195

- [38] B. Zhou et al., “Places: A 10 million image database for scene recognition,” IEEE Trans. Pattern Anal. Mach. Intell., Vol.40, No.6, pp. 1452-1464, 2018. https://doi.org/10.1109/TPAMI.2017.2723009

- [39] G. Qin, B. Vrusias, and L. Gillam, “Background filtering for improving of object detection in images,” 2010 20th Int. Conf. Pattern Recognit., pp. 922-925, 2010. https://doi.org/10.1109/ICPR.2010.231

- [40] Z. Ge et al., “YOLOX: Exceeding YOLO series in 2021,” arXiv: 2107.08430, 2021. https://doi.org/10.48550/arXiv.2107.08430

- [41] C. Shorten and T. M. Khoshgoftaar, “A survey on image data augmentation for deep learning,” J. Big Data, Vol.6, No.1, Article No.60, 2019. https://doi.org/10.1186/s40537-019-0197-0

- [42] R. Padilla et al., “A survey on performance metrics for object-detection algorithms,” 2020 Int. Conf. Syst. Signals Image Process. (IWSSIP), pp. 237-242, 2020. https://doi.org/10.1109/IWSSIP48289.2020.9145130

- [43] D. Franklin, “Jetson Nano brings AI computing to everyone,” NVIDIA Technical Blog, 2019. https://developer.nvidia.com/blog/jetson-nano-ai-computing/ [Accessed November 6, 2022]

- [44] D. Franklin, “Introducing Jetson Xavier NX, the world’s smallest AI supercomputer,” NVIDIA Technical Blog, 2019. https://developer.nvidia.com/blog/jetson-xavier-nx-the-worlds-smallest-ai-supercomputer/ [Accessed November 6, 2022]

- [45] Z. Qin et al., “Diagonalwise refactorization: An efficient training method for depthwise convolutions,” 2018 Int. Jt. Conf. Neural Netw. (IJCNN), 2018. https://doi.org/10.1109/IJCNN.2018.8489312

- [46] P. Zhang, E. Lo, and B. Lu, “High performance depthwise and pointwise convolutions on mobile devices,” 34th AAAI Conf. Artif. Intell. (AAAI-20), pp. 6795-6802, 2020.

- [47] ONNX, “Open Neural Network Exchange.” https://onnx.ai/ [Accessed December 2, 2022]

- [48] H. Vanholder, “Efficient inference with TensorRT.” https://on-demand.gputechconf.com/gtc-eu/2017/presentation/23425-han-vanholder-efficient-inference-with-tensorrt.pdf [Accessed December 2, 2022]

- [49] R. David et al., “TensorFlow Lite Micro: Embedded machine learning on TinyML systems,” arXiv: 2010.08678, 2020. https://doi.org/10.48550/arXiv.2010.08678

- [50] Intel, “Intel® RealSense™ Depth Camera D435i.” https://www.intelrealsense.com/depth-camera-d435i/ [Accessed December 2, 2022]

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.