Research Paper:

Elastic Adaptively Parametric Compounded Units for Convolutional Neural Network

Changfan Zhang*, Yifu Xu*, and Zhenwen Sheng**,†

*Hunan University of Technology

88 Taishan Xi Road, Tianyuan District, Zhuzhou, Hunan 412007, China

**College of Engineering, Shandong Xiehe University

6277 Jiqing Road, Licheng District, Jinan, Shandong 250109, China

†Corresponding author

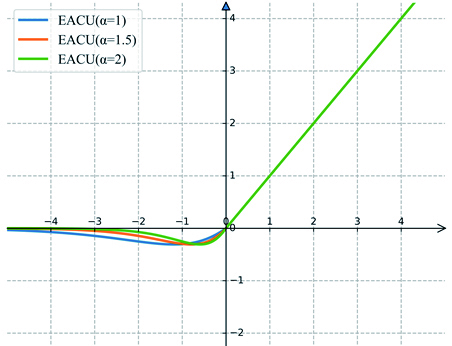

The activation function introduces nonlinearity into convolutional neural network, which greatly promotes the development of computer vision tasks. This paper proposes elastic adaptively parametric compounded units to improve the performance of convolutional neural networks for image recognition. The activation function takes the structural advantages of two mainstream functions as the function’s fundamental architecture. The SENet model is embedded in the proposed activation function to adaptively recalibrate the feature mapping weight in each channel, thereby enhancing the fitting capability of the activation function. In addition, the function has an elastic slope in the positive input region by simulating random noise to improve the generalization capability of neural networks. To prevent the generated noise from producing overly large variations during training, a special protection mechanism is adopted. In order to verify the effectiveness of the activation function, this paper uses CIFAR-10 and CIFAR-100 image datasets to conduct comparative experiments of the activation function under the exact same model. Experimental results show that the proposed activation function showed superior performance beyond other functions.

EACU is a new activation function

- [1] J. P. Rogelio et al., “Object Detection and Segmentation Using Deeplabv3 Deep Neural Network for a Portable X-Ray Source Model,” J. Adv. Comput. Intell. Intell. Inform., Vol.26, No.5, pp. 842-850, 2022. https://doi.org/10.20965/jaciii.2022.p0842

- [2] C. Zhang et al., “Reconstruction Method for Missing Measurement Data Based on Wasserstein Generative Adversarial Network,” J. Adv. Comput. Intell. Intell. Inform., Vol.25, No.2, pp. 195-203, 2021. https://doi.org/10.20965/jaciii.2021.p0195

- [3] V. Nair and G. E. Hinton, “Rectified Linear Units Improve Restricted Boltzmann Machines,” Proc. of the 27th Int. Conf. on Machine Learning (ICML’10), pp. 807-814, 2010.

- [4] D. Misra, “Mish: A Self Regularized Non-Monotonic Activation Function,” The 31st British Machine Vision Conf. (BMVC 2020), 2020.

- [5] X. Jiang et al., “Deep Neural Networks with Elastic Rectified Linear Units for Object Recognition,” Neurocomputing, Vol.275, pp. 1132-1139, 2018. https://doi.org/10.1016/j.neucom.2017.09.056

- [6] D. Kim, J. Kim, and J. Kim, “Elastic Exponential Linear Units for Convolutional Neural Networks,” Neurocomputing, Vol.406, pp. 253-266, 2020. https://doi.org/10.1016/j.neucom.2020.03.051

- [7] M. Lin, Q. Chen, and S. Yan, “Network in Network,” Proc. of the 2nd Int. Conf. on Learning Representations (ICLR 2014), 2014.

- [8] K. Simonyan and A. Zisserman, “Very Deep Convolutional Networks for Large-Scale Image Recognition,” Proc. of the 3rd Int. Conf. on Learning Representations (ICLR 2015), 2015.

- [9] A. Krizhevsky, “Learning multiple layers of features from tiny images,” University of Tronto, 2009.

- [10] D.-A. Clevert, T. Unterthiner, and S. Hochreiter, “Fast and Accurate Deep Network Learning by Exponential Linear Units (ELUs),” Proc. of 4th Int. Conf. on Learning Representations (ICLR 2016), 2016.

- [11] X. Jin et al., “Deep Learning with S-Shaped Rectified Linear Activation Units,” Proc. of the 30th AAAI Conf. on Artificial Intelligence (AAAI’16), pp. 1737-1743, 2016.

- [12] K. He et al., “Delving Deep into Rectifiers: Surpassing Human-Level Performance on ImageNet Classification,” 2015 IEEE Int. Conf. on Computer Vision (ICCV), pp. 1026-1034, 2015. https://doi.org/10.1109/ICCV.2015.123

- [13] P. Ramachandran, B. Zoph, and Q. V. Le, “Searching for Activation Functions,” Proc. of the 6th Int. Conf. on Learning Representations (ICLR 2018), 2018.

- [14] A. L. Maas, A. Y. Hannun, and A. Y. Ng, “Rectifier Nonlinearities Improve Neural Network Acoustic Models,” Proc. of the 30th Int. Conf. on Machine Learning (ICML 2013), 2013.

- [15] Q. Cheng et al., “Parametric Deformable Exponential Linear Units for Deep Neural Networks,” Neural Networks, Vol.125, pp. 281-289, 2020. https://doi.org/10.1016/j.neunet.2020.02.012

- [16] S. Qiu, X. Xu, and B. Cai, “FReLU: Flexible Rectified Linear Units for Improving Convolutional Neural Networks,” 2018 24th Int. Conf. on Pattern Recognition (ICPR), pp. 1223-1228, 2018. https://doi.org/10.1109/ICPR.2018.8546022

- [17] X. Liang and J. Xu, “Biased ReLU Neural Networks,” Neurocomputing, Vol.423, pp. 71-79, 2021. https://doi.org/10.1016/j.neucom.2020.09.050

- [18] M. Zhu et al., “PFLU and FPFLU: Two Novel Non-Monotonic Activation Functions in Convolutional Neural Networks,” Neurocomputing, Vol.429, pp. 110-117, 2020. https://doi.org/10.1016/j.neucom.2020.11.068

- [19] L. Sha, J. Schwarcz, and P. Hong, “Context Dependent Modulation of Activation Function,” 2019 7th Int. Conf. on Learning Representations (ICLR 2019), 2019.

- [20] Y. Liu et al., “Survey on Image Classification Technology Based on Small Sample Learning,” Acta Automatica Sinica, Vol.47, No.2, pp. 297-315, 2021 (in Chinese). https://doi.org/10.16383/j.aas.c190720

- [21] J. Hu, L. Shen, and G. Sun, “Squeeze-and-Excitation Networks,” 2018 IEEE/CVF Conf. on Computer Vision and Pattern Recognition (CVPR), pp. 7132-7141, 2018. https://doi.org/10.1109/CVPR.2018.00745

- [22] Y. Dai et al., “Attention as Activation,” 2020 25th ICPR, pp. 9156-9163, 2021. https://doi.org/10.1109/ICPR48806.2021.9413020

- [23] D. Chen, J. Li, and K. Xu, “AReLU: Attention-based Rectified Linear Unit,” 2021 9rd Int. Conf. on Learning Representations (ICLR 2021), 2021.

- [24] M. Zhao et al., “Deep Residual Networks with Adaptively Parametric Rectifier Linear Units for Fault Diagnosis,” IEEE Trans. on Industrial Electronics, Vol.68, No.3, pp. 2587-2597, 2021. https://doi.org/10.1109/TIE.2020.2972458

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.