Research Paper:

Exploring Self-Attention for Visual Intersection Classification

Haruki Nakata, Kanji Tanaka, and Koji Takeda

Graduate School of Engineering, University of Fukui

3-9-1 Bunkyo, Fukui 910-8507, Japan

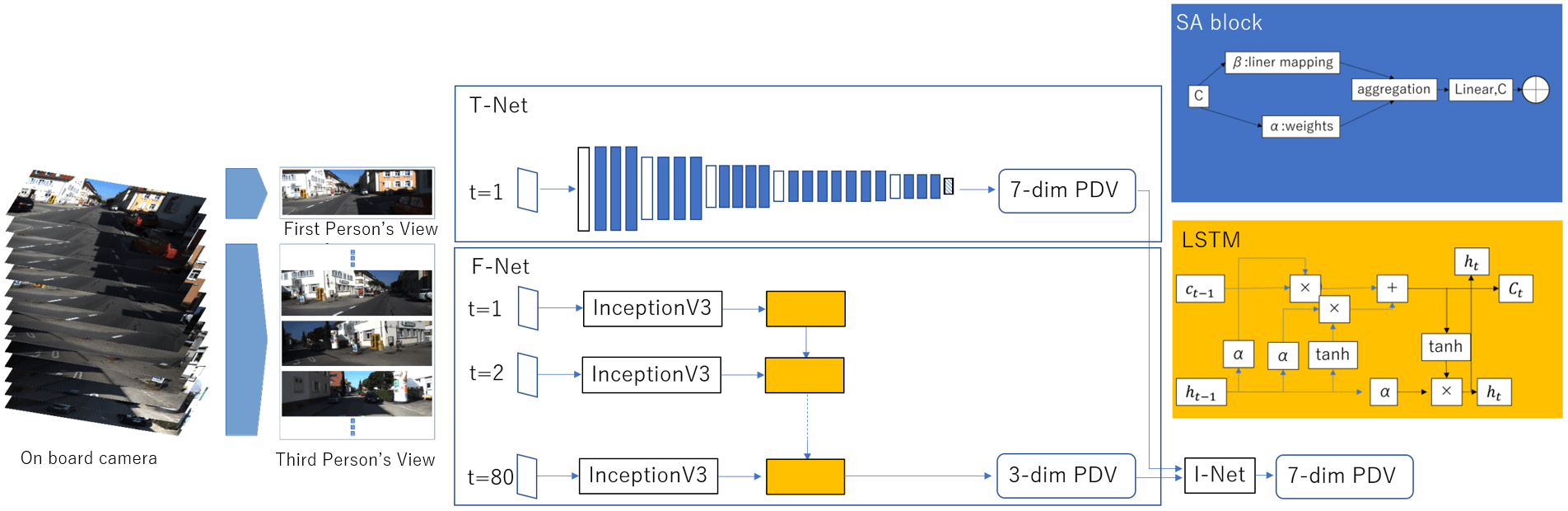

Self-attention has recently emerged as a technique for capturing non-local contexts in robot vision. This study introduced a self-attention mechanism into an intersection recognition system to capture non-local contexts behind the scenes. This mechanism is effective in intersection classification because most parts of the local pattern (e.g., road edges, buildings, and sky) are similar; thus, the use of a non-local context (e.g., the angle between two diagonal corners around an intersection) would be effective. This study makes three major contributions to existing literature. First, we proposed a self-attention-based approach for intersection classification. Second, we integrated the self-attention-based classifier into a unified intersection classification framework to improve the overall recognition performance. Finally, experiments using the public KITTI dataset showed that the proposed self-attention-based system outperforms conventional recognition based on local patterns and recognition based on convolution operations.

Self-attention for enhancing third person views

- [1] A. Ess, T. Muller, H. Grabner, and L. V. Gool, “Segmentation-Based Urban Traffic Scene Understanding,” BMVC, Vol.1, 2009.

- [2] H. Zhao, J. Jia, and V. Koltun, “Exploring self-attention for image recognition,” Proc. of the IEEE/CVF Conf. on Computer Vision and Pattern Recognition (CVPR), pp. 10073-10082, 2020. https://doi.org/10.1109/CVPR42600.2020.01009

- [3] M. Oeljeklaus, F. Hoffmann, and T. Bertram, “A combined recognition and segmentation model for urban traffic scene understanding,” 2017 IEEE 20th Int. Conf. on Intelligent Transportation Systems (ITSC), 2017. https://doi.org/10.1109/ITSC.2017.8317713

- [4] K. Takeda and K. Tanaka, “Deep intersection classification using first and third person views,” 2019 IEEE Intelligent Vehicles (IV) Symp., pp. 454-459, 2019. https://doi.org/10.1109/IVS.2019.8813859

- [5] C. Richter, W. Vega-Brown, and N. Roy, “Bayesian learning for safe high-speed navigation in unknown environments,” A. Bicchi and W. Burgard (Eds.), “Robotics Research,” pp. 325-341, Springer, 2018. https://doi.org/10.1007/978-3-319-60916-4_19

- [6] M. Nolte, N. Kister, and M. Maurer, “Assessment of deep convolutional neural networks for road surface classification,” 2018 21st ITSC, pp. 381-386, 2018. https://doi.org/10.1109/ITSC.2018.8569396

- [7] F. Kruber, J. Wurst, and M. Botsch, “An unsupervised random forest clustering technique for automatic traffic scenario categorization,” 2018 21st ITSC, pp. 2811-2818, 2018. https://doi.org/10.1109/ITSC.2018.8569682

- [8] K. Zhang, H.-D. Cheng, and S. Gai, “Efficient dense-dilation network for pavement cracks detection with large input image size,” 2018 21st ITSC, pp. 884-889, 2018. https://doi.org/10.1109/ITSC.2018.8569958

- [9] T. Suleymanov, P. Amayo, and P. Newman, “Inferring road boundaries through and despite traffic,” 2018 21st ITSC, pp. 409-416, 2018. https://doi.org/10.1109/ITSC.2018.8569570

- [10] M. Koschi, C. Pek, M. Beikirch, and M. Althoff, “Set-based prediction of pedestrians in urban environments considering formalized traffic rules,” 2018 21st ITSC, pp. 2704-2711, 2018. https://doi.org/10.1109/ITSC.2018.8569434

- [11] A. Geiger, M. Lauer, C. Wojek, C. Stiller, and R. Urtasun, “3D traffic scene understanding from movable platforms,” IEEE Trans. on Pattern Analysis and Machine Intelligence, Vol.36, No.5, pp. 1012-1025, 2013. https://doi.org/10.1109/TPAMI.2013.185

- [12] A. Kumar, G. Gupta, A. Sharma, and K. M. Krishna, “Towards view-invariant intersection recognition from videos using deep network ensembles,” 2018 IEEE/RSJ Int. Conf. on Intelligent Robots and Systems (IROS), pp. 1053-1060, 2018. https://doi.org/10.1109/IROS.2018.8594449

- [13] X. Liu, M. Neuyen, and W. Q. Yan, “Vehicle-related scene understanding using deep learning,” Asian Conf. on Pattern Recognition, pp. 61-73, 2020. https://doi.org/10.1007/978-981-15-3651-9_7

- [14] D. Feng, Y. Zhou, C. Xu, M. Tomizuka, and W. Zhan, “A simple and efficient multi-task network for 3D object detection and road understanding,” 2021 IEEE/RSJ Int. Conf. on IROS, pp. 7067-7074, 2021. https://doi.org/10.1109/IROS51168.2021.9635858

- [15] R. Prykhodchenko and P. Skruch, “Road scene classification based on street-level images and spatial data,” Array, Vol.15, Article No.100195, 2022. https://doi.org/10.1016/j.array.2022.100195

- [16] M. A. Brubaker, A. Geiger, and R. Urtasun, “Map-based probabilistic visual self-localization,” IEEE Trans. on Pattern Analysis and Machine Intelligence, Vol.38, No.4, pp. 652-665, 2015. https://doi.org/10.1109/TPAMI.2015.2453975

- [17] A. Geiger, J. Ziegler, and C. Stiller, “StereoScan: Dense 3D reconstruction in real-time,” 2011 IEEE IV Symp., pp. 963-968, 2011. https://doi.org/10.1109/IVS.2011.5940405

- [18] J. Graeter, T. Strauss, and M. Lauer, “Momo: Monocular motion estimation on manifolds,” 2017 IEEE 20th ITSC, 2017. https://doi.org/10.1109/ITSC.2017.8317679

- [19] S. Wang, R. Clark, H. Wen, and N. Trigoni, “DeepVO: Towards end-to-end visual odometry with deep recurrent convolutional neural networks,” 2017 IEEE Int. Conf. on Robotics and Automation (ICRA), pp. 2043-2050, 2017. https://doi.org/10.1109/ICRA.2017.7989236

- [20] L. Kunze, T. Bruls, T. Suleymanov, and P. Newman, “Reading between the lanes: Road layout reconstruction from partially segmented scenes,” 2018 21st ITSC, pp. 401-408, 2018. https://doi.org/10.1109/ITSC.2018.8569270

- [21] H. Q. Dang, J. Fürnkranz, A. Biedermann, and M. Hoepfl, “Time-to-lane-change prediction with deep learning,” 2017 IEEE 20th ITSC, 2017. https://doi.org/10.1109/ITSC.2017.8317674

- [22] R. Izquierdo, I. Parra, J. Muñoz-Bulnes, D. Fernández-Llorca, and M. Sotelo, “Vehicle trajectory and lane change prediction using ANN and SVM classifiers,” 2017 IEEE 20th ITSC, 2017. https://doi.org/10.1109/ITSC.2017.8317838

- [23] V. Leonhardt and G. Wanielik, “Feature evaluation for lane change prediction based on driving situation and driver behavior,” 2017 20th Int. Conf. on Information Fusion (Fusion), 2017. https://doi.org/10.23919/ICIF.2017.8009848

- [24] S. Boschenriedter, P. Hossbach, C. Linnhoff, S. Luthardt, and S. Wu, “Multi-session visual roadway mapping,” 2018 21st ITSC, pp. 394-400, 2018. https://doi.org/10.1109/ITSC.2018.8570004

- [25] D. Bhatt, D. Sodhi, A. Pal, V. Balasubramanian, and M. Krishna, “Have I reached the intersection: A deep learning-based approach for intersection detection from monocular cameras,” 2017 IEEE/RSJ Int. Conf. on IROS, pp. 4495-4500, 2017. https://doi.org/10.1109/IROS.2017.8206317

- [26] Y. Zhou, E. Chung, M. E. Cholette, and A. Bhaskar, “Real-time joint estimation of traffic states and parameters using cell transmission model and considering capacity drop,” 2018 21st ITSC, pp. 2797-2804, 2018. https://doi.org/10.1109/ITSC.2018.8569805

- [27] S. Li, X. Wu, Y. Cao, and H. Zha, “Generalizing to the open world: Deep visual odometry with online adaptation,” 2021 IEEE/CVF Conf. on CVPR, pp. 13179-13188, 2021. https://doi.org/10.1109/CVPR46437.2021.01298

- [28] W. Ye, X. Lan, S. Chen, Y. Ming, X. Yu, H. Bao, Z. Cui, and G. Zhang, “PVO: Panoptic visual odometry,” arXiv:2207.01610, 2022. https://doi.org/10.48550/arXiv.2207.01610

- [29] B. Yang, X. Xu, J. Ren, L. Cheng, L. Guo, and Z. Zhang, “SAM-Net: Semantic probabilistic and attention mechanisms of dynamic objects for self-supervised depth and camera pose estimation in visual odometry applications,” Pattern Recognition Letters, Vol.153, pp. 126-135, 2022. https://doi.org/10.1016/j.patrec.2021.11.028

- [30] Y. Byon, A. Shalaby, and B. Abdulhai, “Travel time collection and traffic monitoring via GPS technologies,” 2006 IEEE ITSC, pp. 677-682, 2006. https://doi.org/10.1109/ITSC.2006.1706820

- [31] B. Flade, M. Nieto, G. Velez, and J. Eggert, “Lane detection based camera to map alignment using open-source map data,” 2018 21st ITSC, pp. 890-897, 2018. https://doi.org/10.1109/ITSC.2018.8569304

- [32] A. Gupta and A. Choudhary, “A framework for real-time traffic sign detection and recognition using Grassmann manifolds,” 2018 21st ITSC, pp. 274-279, 2018. https://doi.org/10.1109/ITSC.2018.8569556

- [33] M. Bach, D. Stumper, and K. Dietmayer, “Deep convolutional traffic light recognition for automated driving,” 2018 21st ITSC, pp. 851-858, 2018. https://doi.org/10.1109/ITSC.2018.8569522

- [34] P. Amayo, T. Bruls, and P. Newman, “Semantic classification of road markings from geometric primitives,” 2018 21st ITSC, pp. 387-393, 2018. https://doi.org/10.1109/ITSC.2018.8569382

- [35] J. Müller and K. Dietmayer, “Detecting traffic lights by single shot detection,” 2018 21st ITSC, pp. 266-273, 2018. https://doi.org/10.1109/ITSC.2018.8569683

- [36] M. Weber, M. Huber, and J. M. Zöllner, “HDTLR: A CNN based hierarchical detector for traffic lights,” 2018 21st ITSC, pp. 255-260, 2018. https://doi.org/10.1109/ITSC.2018.8569794

- [37] C. Fernández, C. Guindel, N.-O. Salscheider, and C. Stiller, “A deep analysis of the existing datasets for traffic light state recognition,” 2018 21st ITSC, pp. 248-254, 2018. https://doi.org/10.1109/ITSC.2018.8569914

- [38] M. Suraj, H. Grimmett, L. Platinskỳ, and P. Ondrúŝka, “Predicting trajectories of vehicles using large-scale motion priors,” 2018 IEEE IV Symp., pp. 1639-1644, 2018. https://doi.org/10.1109/IVS.2018.8500604

- [39] Z. Yang, Z. Dai, Y. Yang, J. Carbonell, R. Salakhutdinov, and Q. V. Le, “XLNet: Generalized autoregressive pretraining for language understanding,” Advances in Neural Information Processing Systems 32 (NeurIPS 2019), 2019.

- [40] V. Nair and G. E. Hinton, “Rectified linear units improve restricted Boltzmann machines,” 27th Int. Conf. on Machine Learning (ICML-10), pp. 807-814, 2010.

- [41] G. Farnebäck, “Two-frame motion estimation based on polynomial expansion,” J. Bigun and T. Gustavsson (Eds.), “SCIA 2003: Image Analysis,” pp. 363-370, Springer, 2003. https://doi.org/10.1007/3-540-45103-X_50

- [42] A. Geiger, P. Lenz, and R. Urtasun, “Are we ready for autonomous driving? The KITTI vision benchmark suite,” 2012 IEEE Conf. on CVPR, pp. 3354-3361, 2012. https://doi.org/10.1109/CVPR.2012.6248074

- [43] K. Simonyan and A. Zisserman, “Very deep convolutional networks for large-scale image recognition,” arXiv:1409.1556, 2014. https://doi.org/10.48550/arXiv.1409.1556

- [44] D. G. Lowe, “Object recognition from local scale-invariant features,” Proc. of the 7th IEEE Int. Conf. on Computer Vision, Vol.2, pp. 1150-1157, 1999. https://doi.org/10.1109/ICCV.1999.790410

- [45] T. Tommasi and B. Caputo, “Frustratingly easy NBNN domain adaptation,” 2013 IEEE Int. Conf. on Computer Vision, pp. 897-904, 2013. https://doi.org/10.1109/ICCV.2013.116

- [46] S. Takuma, T. Kanji, and Y. Kousuke, “Leveraging object proposals for object-level change detection,” 2018 IEEE IV Symp., pp. 397-402, 2018. https://doi.org/10.1109/IVS.2018.8500475

- [47] O. Boiman, E. Shechtman, and M. Irani, “In defense of nearest-neighbor based image classification,” 2008 IEEE Conf. on CVPR, 2008. https://doi.org/10.1109/CVPR.2008.4587598

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.