Research Paper:

Fused Architecture with Enhanced Bag of Visual Words for Efficient Drowsiness Detection

Vineetha Vijayan

and K. P. Pushpalatha

and K. P. Pushpalatha

Mahatma Gandhi University

Priyadarsini Hills, Kottayam, Kerala 686560, India

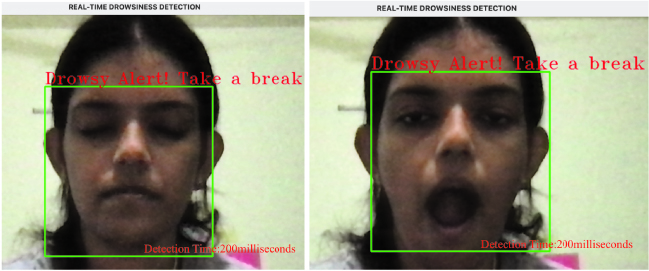

Drowsy driving is more hazardous than reckless driving. This study concentrates on capturing the behavioral features of drowsiness from facial images of a driver. The methodology considers scale invariant feature transform matched with the fast library for approximate nearest neighbors for low-level drowsy features extraction. These features are fused with the high-level features extracted from the convolutional layers of a convolutional neural network (CNN). The convolution operation incorporates a model parallelization technique to increase the efficiency of the training and improve the feature identification. Further classification is performed by considering the occurrences of visual words using the softmax layers of the CNN. In contrast to existing state-of-the-art models which require a few seconds to detect drowsiness, this model detects drowsiness in milliseconds. With the model parallelization approach, this model exhibits a high accuracy rate of 83.8% relative to normal CNNs.

Realtime drowsiness detection

- [1] V. Vineetha and K. P. Pushpalatha, “FLANN based matching with SIFT descriptors for drowsy features extraction,” 5th Int. Conf. on Image Information Processing (ICIIP), pp. 600-605, 2019. https://doi.org/10.1109/ICIIP47207.2019.8985924

- [2] A. Krizhevsky, I. Sutskever, and G. E. Hinton, “ImageNet classification with deep convolutional neural networks,” Advances in Neural Information Processing Systems, Vol.25, pp. 1097-1105, 2012.

- [3] S. J. Pan and Q. Yang, “A survey on transfer learning,” IEEE Trans. on Knowledge and Data Engineering, Vol.22, No.10, pp. 1345-1359, 2009. https://doi.org/10.1109/TKDE.2009.191

- [4] V. Maeda-Gutiérrez, C. E. Galvan-Tejada, L. A. Zanella-Calzada, J. M. Celaya-Padilla, J. I. Galván-Tejada, H. Gamboa-Rosales, H. Luna-Garcia, R. Magallanes-Quintanar, C. A. Guerrero Mendez, and C. A. Olvera-Olvera, “Comparison of convolutional neural network architectures for classification of tomato plant diseases,” Applied Sciences, Vol.10, No.4, Article No.1245, 2020. https://doi.org/10.3390/app10041245

- [5] A. Bosch, X. Muñoz, and R. Marti, “Which is the best way to organize/classify images by content?,” Image and Vision Computing, Vol.25, No.6, pp. 778-791, 2007. https://doi.org/10.1016/j.imavis.2006.07.015

- [6] L. Fei-Fei and P. Perona, “A Bayesian hierarchical model for learning natural scene categories,” 2005 IEEE Computer Society Conf. on Computer Vision and Pattern Recognition (CVPR’05), Vol.2, pp. 524-531, 2005. https://doi.org/10.1109/CVPR.2005.16

- [7] W. Rawat and Z. Wang, “Deep convolutional neural networks for image classification: A comprehensive review,” Neural Computation, Vol.29, No.9, pp. 2352-2449, 2017. https://doi.org/10.1162/neco_a_00990

- [8] L. Zheng, Y. Yang, and Q. Tian, “SIFT meets CNN: A decade survey of instance retrieval,” IEEE Trans. on Pattern Analysis and Machine Intelligence, Vol.40, No.5, pp. 1224-1244, 2018. https://doi.org/10.1109/TPAMI.2017.2709749

- [9] A. Babenko and V. Lempitsky, “Aggregating deep convolutional features for image retrieval,” arXiv:1510.07493, 2015.

- [10] H. Jégou, M. Douze, C. Schmid, and P. Pérez, “Aggregating local descriptors into a compact image representation,” IEEE Computer Society Conf. on CVPR, pp. 3304-3311, 2010. https://doi.org/10.1109/CVPR.2010.5540039

- [11] F. Perronnin, Y. Liu, J. Sánchez, and H. Poirier, “Large-scale image retrieval with compressed Fisher vectors,” IEEE Computer Society Conf. on CVPR, pp. 3384-3391, 2010. https://doi.org/10.1109/CVPR.2010.5540009

- [12] K. Yan, Y. Wang, D. Liang, T. Huang, and Y. Tian, “CNN vs. SIFT for image retrieval: Alternative or complementary?,” Proc. of the 24th ACM Int. Conf. on Multimedia, pp. 407-411, 2016. https://doi.org/10.1145/2964284.2967252

- [13] T. Connie, M. Al-Shabi, W. P. Cheah, and M. Goh, “Facial expression recognition using a hybrid CNN-SIFT aggregator,” Int. Workshop on Multi-Disciplinary Trends in Artificial Intelligence, pp. 139-149, 2017. https://doi.org/10.1007/978-3-319-69456-6_12

- [14] X.-S. Wei, J.-H. Luo, J. Wu, and Z.-H. Zhou, “Selective convolutional descriptor aggregation for fine-grained image retrieval,” IEEE Trans. on Image Processing, Vol.26, No.6, pp. 2868-2881, 2017. https://doi.org/10.1109/TIP.2017.2688133

- [15] S. Huang and H.-M. Hang, “Multi-query image retrieval using CNN and SIFT features,” Asia-Pacific Signal and Information Processing Association Annual Summit and Conf. (APSIPA ASC), pp. 1026-1034, 2017. https://doi.org/10.1109/APSIPA.2017.8282180

- [16] S. S. Shanta, S. T. Anwar, and M. R. Kabir, “Bangla sign language detection using SIFT and CNN,” 9th Int. Conf. on Computing, Communication and Networking Technologies (ICCCNT), 2018. https://doi.org/10.1109/ICCCNT.2018.8493915

- [17] A. Kumar, N. Jain, C. S. Singh, and S. Tripathi, “Exploiting SIFT descriptor for rotation invariant convolutional neural network,” 15th IEEE India Council Int. Conf. (INDICON), 2018. https://doi.org/10.1109/INDICON45594.2018.8987153

- [18] W. Chen, Q. Sun, J. Wang, J.-J. Dong, and C. Xu, “A novel model based on AdaBoost and deep CNN for vehicle classification,” IEEE Access, Vol.6, pp. 60445-60455, 2018. https://doi.org/10.1109/ACCESS.2018.2875525

- [19] H.-W. Ng, V. D. Nguyen, V. Vonikakis, and S. Winkler, “Deep learning for emotion recognition on small datasets using transfer learning,” Proc. of the 2015 ACM on Int. Conf. on Multimodal Interaction, pp. 443-449, 2015. https://doi.org/10.1145/2818346.2830593

- [20] X. Zheng and N. Liu, “Color recognition of clothes based on k-means and mean shift,” IEEE Int. Conf. on Intelligent Control, Automatic Detection and High-End Equipment, pp. 49-53, 2012. https://doi.org/10.1109/ICADE.2012.6330097

- [21] Y. Chen, P. Hu, and W. Wang, “Improved K-means algorithm and its implementation based on mean shift,” 11th Int. Congress on Image and Signal Processing, Biomedical Engineering and Informatics (CISP-BMEI), 2018. https://doi.org/10.1109/CISP-BMEI.2018.8633100

- [22] D. G. Lowe, “Distinctive image features from scale-invariant keypoints,” Int. J. of Computer Vision, Vol.60, pp. 91-110, 2004. https://doi.org/10.1023/B:VISI.0000029664.99615.94

- [23] R. N. Bracewell, “The Fourier transform and its applications,” McGraw-Hill, 1986.

- [24] K. Diaz-Chito, A. Hernández-Sabaté, and A. M. López, “A reduced feature set for driver head pose estimation,” Applied Soft Computing, Vol.45, pp. 98-107, 2016. https://doi.org/10.1016/j.asoc.2016.04.027

- [25] A. Kasiński, A. Florek, and A. Schmidt, “The PUT face database,” Image Processing and Communications, Vol.13, Nos.3-4, pp. 59-64, 2008.

- [26] C.-H. Weng, Y.-H. Lai, and S.-H. Lai, “Driver drowsiness detection via a hierarchical temporal deep belief network,” Asian Conf. on Computer Vision, pp. 117-133, 2016. https://doi.org/10.1007/978-3-319-54526-4_9

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.