Paper:

Moving Object Grasping Method of Mechanical Arm Based on Deep Deterministic Policy Gradient and Hindsight Experience Replay

Jian Peng*,**,***,† and Yi Yuan*,**,***

*School of Automation, China University of Geosciences

388 Lumo Road, Hongshan District, Wuhan, Hubei 430074, China

**Hubei Key Laboratory of Advanced Control and Intelligent Automation for Complex Systems

388 Lumo Road, Hongshan District, Wuhan, Hubei 430074, China

***Engineering Research Center of Intelligent Technology for Geo-Exploration, Ministry of Education

388 Lumo Road, Hongshan District, Wuhan, Hubei 430074, China

†Corresponding author

The mechanical arm is an important component in many types of robots; however, in certain production lines, the conventional grasp strategy cannot satisfy the demands of modern production because of several interference factors such as vibration, noise, and light pollution. This paper proposes a new grasping method for manipulators in stamping automatic production lines. Considering the factors that affect grasping in the production environment, the deep deterministic policy gradient (DDPG) method is selected in this study as the basic reinforcement-learning algorithm, and this algorithm is used to grasp moving objects in stamping automatic production lines. Owing to the low success rate of the conventional DDPG algorithm, the hindsight experience replay (HER) is used to improve the sample utilization efficiency of the agent and learn more effective tracking strategies. Simulation results show an 82% mean success rate of the optimized DDPG-HER algorithm, which is 31% better than that of the conventional DDPG algorithm. This method provides ideas for the research and design of the sorting system used in stamping automation production lines.

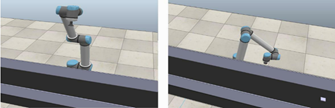

The initial positions P1 and P2 of two different mechanical arms are selected

- [1] L. Li, Y. Zhang, M. Ripperger, J. Nicho, M. Veeraraghavan, and A. Fumagalli, “Autonomous Object Pick-and-Sort Procedure for Industrial Robotics Application,” Int. J. Semantic Comput., Vol.13, No.2, pp. 161-183, 2019.

- [2] J. Hu, Y. Sun, G. Li, G. Jiang, and B. Tao, “Probability analysis for grasp planning facing the field of medical robotics,” Measurement, Vol.141, pp. 227-234, 2019.

- [3] T. W. Utomo, A. I. Cahyadi, and I. Ardiyanto, “Suction-based Grasp Point Estimation in Cluttered Environment for Robotic Manipulator Using Deep Learning-based Affordance Map,” Int. J. Autom. Comput., Vol.18, No.2, pp. 277-287, 2021.

- [4] F. Husain, A. Colomé, B. Dellen, G. Alenyà, and Carme Torras, “Realtime tracking and grasping of a moving object from range video,” 2014 IEEE Int. Conf. on Robotics and Automation (ICRA), pp. 2617-2622, 2014.

- [5] B. Luo, H. Chen, F. Quan, S. Zhang, and Y. Liu, “Natural Feature-based Visual Servoing for Grasping Target with an Aerial Manipulator,” J. of Bionic Engineering, Vol.17, Issue 2, pp. 215-228, 2020.

- [6] P. Ramon-Soria, B. C. Arrue, and A. Ollero, “Grasp Planning and Visual Servoing for an Outdoors Aerial Dual Manipulator,” Engineering, Vol.6, Issue 1, pp. 77-88, 2020.

- [7] T. Li, S. Zheng, X. Shu, C. Wang, and C. Liu, “Self-Recognition Grasping Operation with a Vision-Based Redundant Manipulator System,” Applied Sciences, Vol.9, Article No.5172, 2019.

- [8] V. Mnih, K. Kavukcuoglu, D. Silver, A. A. Rusu, J. Veness, M. G. Bellemare, A. Graves, M. Riedmiller, A. K. Fidjeland, G. Ostrovski, S. Petersen, C. Beattie, A. Sadik, I. Antonoglou, H. King, D. Kumaran, D. Wierstra, S. Legg, and D. Hassabis, “Human-level control through deep reinforcement learning,” Nature, Vol.518, pp. 529-533, 2015.

- [9] T.-H. Pham, G. De Magistris, and R. Tachibana, “OptLayer-Practical Constrained Optimization for Deep Reinforcement Learning in the Real World,” 2018 IEEE Int. Conf. on Robotics and Automation (ICRA), pp. 6236-6243, 2018.

- [10] Z. Chang, L. Hao, Q. Yan, and T. Ye, “Research on Manipulator Tracking Control Algorithm Based on RBF Neural Network,” J. of Physics: Conf. Series, Vol.1802, The 7th Int. Conf. on Computer-Aided Design, Manufacturing, Modeling and Simulation (CDMMS 2020), 2021.

- [11] C.-H. Lin, T.-Y. Sie, W.-L. Chu, H.-T. Yau, and C.-H. Ding, “Tracking Control of Pneumatic Artificial Muscle-Activated Robot Arm Based on Sliding-Mode Control,” Actuators, Vol.10, Article No.66, 2021.

- [12] Y. Liu, H. Handroos, O. Alkkiomaki, V. Kyrki, and H. Kalviainen, “Tracking of a Moving Target by Combination of Force/Velocity Control Based on Vision for a Hydraulic Manipulator,” 2007 Int. Conf. on Mechatronics and Automation, pp. 3226-3231, 2007.

- [13] W.-F. Xu, C. Li, B. Liang, Y. Liu, and W.-Y. Qiang, “Coordinated Planning and Control Method of Space Robot for Capturing Moving Target: Coordinated Planning and Control Method of Space Robot for Capturing Moving Target,” Acta Automatica Sinica, Vol.35, pp. 1216-1225, 2009 (in Chinese and English abstract).

- [14] B. Siciliano and O. Khatib (Eds.), “Springer Handbook of Robotics,” Springer, 2016.

- [15] X. Ye and S. Liu, “Velocity Decomposition Based Planning Algorithm for Grasping Moving Object,” 2018 IEEE 7th Data Driven Control and Learning Systems Conf. (DDCLS), pp. 644-649, 2018.

- [16] K. Hashimoto, “A review on vision-based control of robot manipulators,” Advanced Robotics, Vol.17, Issue 10, pp. 969-991, 2003.

- [17] D. C. Crowder, J. Abreu, and R. F. Kirsch, “Hindsight Experience Replay Improves Reinforcement Learning for Control of a MIMO Musculoskeletal Model of the Human Arm,” IEEE Trans. on Neural Systems and Rehabilitation Engineering, Vol.29, pp. 1016-1025, 2021.

- [18] E. Prianto, M. Kim, J.-H. Park, J.-H. Bae, and J.-S. Kim, “Path Planning for Multi-Arm Manipulators Using Deep Reinforcement Learning: Soft Actor–Critic with Hindsight Experience Replay,” Sensors, Vol.20, No.20, Article No.5911, 2020.

- [19] D. Lee, H. Kim, S. Kim, C.-W. Park, and J. H. Park, “Learning Control Policy with Previous Experiences from Robot Simulator,” 2020 Int. Conf. on Information and Communication Technology Convergence (ICTC), pp. 863-865, 2020.

- [20] M. Kim, D.-K. Han, J.-H. Park, and J.-S. Kim, “Motion Planning of Robot Manipulators for a Smoother Path Using a Twin Delayed Deep Deterministic Policy Gradient with Hindsight Experience Replay,” Applied Sciences, Vol.10, No.2, Article No.575, 2020.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.