Paper:

Decision-Making of Communication Robots Through Robot Ethics

Tomomi Hashimoto*, Xingyu Tao**, Takuma Suzuki*, Takafumi Kurose*, Yoshio Nishikawa***, and Yoshihito Kagawa***

*Faculty of Engineering, Saitama Institute of Technology

1690 Fusaiji, Fukaya, Saitama 369-0293, Japan

**Graduate School of Engineering, Saitama Institute of Technology

1690 Fusaiji, Fukaya, Saitama 369-0293, Japan

***Faculty of Engineering, Takushoku University

815-1 Tatemachi, Hachioji, Tokyo 193-0985, Japan

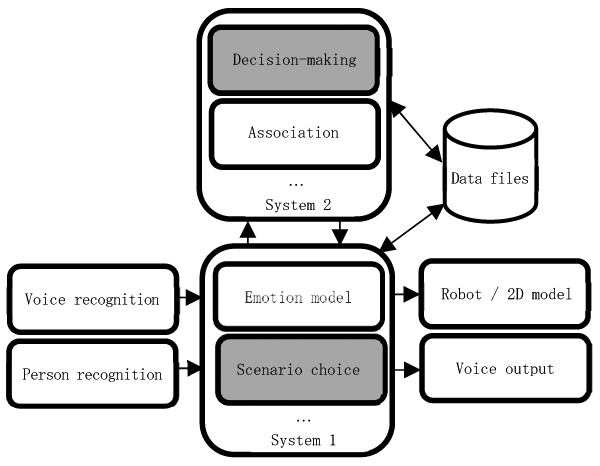

With the recent developments in robotics, the ability of robots to recognize their environment has significantly improved. However, the manner in which robots behave depending on a particular situation remains an unsolved problem. In this study, we propose a decision-making method for robots based on robot ethics. Specifically, we applied the two-level theory of utilitarianism, comprising SYSTEM 1 (intuitive level) for quick decisions and SYSTEM 2 (critical level) for slow but careful decisions. SYSTEM 1 represented a set of heuristically determined responses and SYSTEM 2 represented a rule-based discriminator. The decision-making method was as follows. First, SYSTEM 1 selected the response to the input. Next, SYSTEM 2 selected the rule that the robot’s behavior should follow depending on the amount of happiness and unhappiness of the human, robot, situation, and society. We assumed three choices for SYSTEM 2. We assigned “non-cooperation” to asocial comments, “cooperation” to when the amount of happiness was considered to be high beyond the status quo bias, and “withholding” to all other cases. In the case of choosing between cooperation or non-cooperation, we modified the behavior selected in SYSTEM 1. An impression evaluation experiment was conducted, and the effectiveness of the proposed method was demonstrated.

Structure of Communication Robot LEI

- [1] H. Ishiguro, “Andoroido wo tsukuru (Build android),” Ohmsha, Ltd., 2011 (in Japanese).

- [2] SoftBank Corp., Pepper, https://www.softbank.jp/robot/ [accessed December 21, 2020]

- [3] OMRON Corporation, PLUS SENSING, https://plus-sensing.omron.co.jp/ [accessed December 21, 2020]

- [4] Empath Inc., https://webempath.net/lp-jpn/ [accessed December 21, 2020]

- [5] Y. Saeki, ““Kimekata” no ronri: Syakaitekiketteiriron heno syoutai (The logic of “Decision making”: Invitation to social decision theory),” University of Tokyo Press, 1980 (in Japanese).

- [6] M. Motterlini (N. Izumi (Trans.)), “Economia emotiva: Che cosa si nasconde dietro i nostri conti quotidiani (The economy is driven by emotions: First behavioral economics),” Kinokuniya Company Ltd., 2008 (in Japanese).

- [7] Y. Tsutsui, S. Sasaki, S. Yamane, and G. Mardyla, “Koudoukeizaigaku nyuumon (Introduction to behavioral economics),” Toyo Keizai Inc., 2017 (in Japanese).

- [8] S. Yamamoto, ““Kuuki” no kenkyuu (Study of “atmosphere”),” Bungeishunju Ltd., 2018 (in Japanese).

- [9] Homepage of G. Veruggio, http://www.veruggio.it/ [accessed December 21, 2020]

- [10] S. Okamoto, “Nihon ni okeru robotto rinrigaku (Robot ethics in Japan),” Society and Ethics, Vol.28, pp. 5-19, 2013 (in Japanese).

- [11] M. Kukita, N. Kanzaki, and T. Sasaki, “Robot karano rinrigaku nyuumon (Introduction to ethics from robot),” The University of Nagoya Press, 2017 (in Japanese).

- [12] M. Anderson, S. L. Anderson, and C. Armen, “MedEthEx: Toward a medical ethics advisor,” 2005 AAAI Fall Symp. (Caring Machines: AI in Eldercare), AAAI Technical Report FS-05-02, pp. 9-16, 2005.

- [13] M. Yamamoto and M. Hagiwara, “A moral judgment system using distributed representation and associative information,” Trans. of of Japan Society of Kansei Engineering, Vol.15, No.4, pp. 493-501, 2016 (in Japanese).

- [14] B. M. McLaren, “Extensionally defining principles and cases in ethics: An AI model,” Artificial Intelligence, Vol.150, Issues 1-2, pp. 145-181, 2003.

- [15] J. H. Moor, “What is computer ethics?,” Metaphilosophy, Vol.16, No.4, pp. 266-275, 1985.

- [16] R. C. Arkin, P. Ulam, and A. R. Wagner, “Moral decision making in autonomous systems: Enforcement, moral emotions, dignity, trust, and deception,” Proc. of the IEEE, Vol.100, No.3, pp. 571-589, 2012.

- [17] C. Allen, W. Wallach, and I. Smit, “Why machine ethics?,” IEEE Intelligent Systems, Vol.21, No.4, pp. 12-17, 2006.

- [18] L. Floridi, “Information ethics: On the philosophical foundation of computer ethics,” Ethics and Information Technology, Vol.1, No.1, pp. 33-52, 1999.

- [19] M. Anderson, S. L. Anderson, and C. Armen, “An approach to computing ethics,” IEEE Intelligent Systems, Vol.21, No.4, pp. 56-63, 2006.

- [20] K. Arkoudas, S. Bringsjord, and P. Bello, “Toward ethical robots via mechanized deontic logic,” 2005 AAAI Fall Symp. (Machine Ethics), AAAI Technical Report FS-05-06, pp. 17-23, 2005.

- [21] C. Allen, I. Smit, and W. Wallach, “Artificial morality: Top-down, bottom-up, and hybrid approaches,” Ethics and Information Technology, Vol.7, No.3, pp. 149-155, 2005.

- [22] F. Fossa, “Artificial moral agents: Moral mentors or sensible tools?,” Ethics and Information Technology, Vol.20, No.2, pp. 115-126, 2018.

- [23] W. Wallach, “Robot minds and human ethics: The need for a comprehensive model of decision making,” Ethics and Information Technology, Vol.12, No.3, pp. 243-250, 2010.

- [24] L. Floridi and J. W. Sanders, “On the morality of artificial agents,” Minds and Machines, Vol.14, No.3, pp. 349-379, 2004.

- [25] C. Allen, G. Varner, and J. Zinser, “Prolegomena to any future artificial moral agent,” J. of Experimental & Theoretical Artificial Intelligence, Vol.12, No.3, pp. 251-261, 2000.

- [26] J. P. Tangney, J. Stuewig, and D. J. Mashek, “Moral emotions and moral behavior,” Annual Review of Psychology, Vol.58, pp. 345-372, 2007.

- [27] A. Kurosu, H. Shimizu, and T. Hashimoto, “Suggestion of emotion model for a communication agent,” J. of Japan Society for Fuzzy Theory and Intelligent Informatics, Vol.29, No.1, pp. 501-506, 2017 (in Japanese).

- [28] T. Hashimoto, Y. Munakata, R. Yamanaka, and A. Kurosu, “Proposal of episodic memory retrieval method on mood congruence effects,” J. Adv. Comput. Intell. Intell. Inform., Vol.21, No.4, pp. 722-729, 2017.

- [29] K. E. Stanovich and R. F. West, “Individual difference in reasoning: Implications for the rationality debate?,” Behavioral and Brain Sciences, Vol.23, No.5, pp. 645-665, 2000.

- [30] T. B. Nhat, K. Mera, Y. Kurosawa, and T. Takezawa, “Natural language dialogue system considering emotion guessed from acoustic features,” Human-Agent Interaction Symp. 2014 (HAI), pp. 87-91, 2014 (in Japanese).

- [31] F. Eyben, M. Wöllmer, and B. Schuller, “openSMILE – The munich versatile and fast open-source audio feature extractor,” Proc. of the 18th ACM Int. Conf. on Multimedia (MM’10),” pp. 1459-1462, 2010.

- [32] T. Iseda, “Introduction to ethics through animals,” The University of Nagoya Press, 2008 (in Japanese).

- [33] N. Abe, “Ishikettei no shinrigaku: Nou to kokoro no keikou to taisaku (Psychology of decision making: Brain and mind tendencies and countermeasures),” Kodansha Ltd., 2017 (in Japanese).

- [34] S. Kodama, “Kourisyugi nyuumon: Hajimete no rinrigaku (Introduction to utilitarianism: Ethics to learn for the first time),” Chikumashobo, 2012 (in Japanese).

- [35] R. B. Brandt, “Ethical theory: The problems of normative and critical ethics,” Prentice-Hall, 1959.

- [36] A. Akabayashi and S. Kodama, “Nyuumon rinrigaku (Introductory ethics),” Keiso Shobo, 2018 (in Japanese).

- [37] R. M. Hare (S. Uchii and T. Yamauchi (Trans.)), “Moral thinking: Its levels, method, and point,” Keiso Shobo, 1994 (in Japanese).

- [38] S. Morimura, “Koufuku towa nanika: Shikoujikken de manabu rinrigaku nyuumon (What is happiness?: An introduction to ethics learned through thought experiments),” Chikumashobo, 2018 (in Japanese).

- [39] P. Ekman and W. V. Friesen (T. Kudo (Trans.)), “Unmasking the face: A guide to recognizing emotions from facial expressions,” Seishin Shobo Ltd., 1987 (in Japanese).

- [40] I. Saito, “Taijinkanjyou no shinrigaku (Psychology of interpersonal emotions),” Seishin Shobo Ltd., 1990 (in Japanese).

- [41] W. Samuelson and R. Zeckhauser, “Status quo bias in decision making,” J. of Risk and Uncertainty, Vol.1, No.1, pp. 7-59, 1988.

- [42] F. Heider and M. Simmel, “An Experimental Study of Apparent Behavior,” The American J. of Psychology, Vol.57, No.2, pp. 243-259, 1944.

- [43] H. Mushiake, “Manabu nou: Bonyarinikoso imi ga aru (Brain to learn: Ambiguity makes sense),” Iwanami Shoten, 2018 (in Japanese).

- [44] E. Hatfield, J. T. Cacioppo, and R. L. Rapson, “Emotional contagion,” Cambridge University Press, 1993.

- [45] Y. Suzuki, L. Galli, A. Ikeda, S. Itakura, and M. Kitazaki, “Measuring empathy for human and robot hand pain using electroencephalography,” Scientific Reports, Vol.5, Article No.15924, 2015.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.