Paper:

Fault-Diagnosing Deep-Visual-SLAM for 3D Change Object Detection

Kanji Tanaka

Graduate School of Engineering, University of Fukui

3-9-1 Bunkyo, Fukui, Fukui 910-8507, Japan

Although image change detection (ICD) methods provide good detection accuracy for many scenarios, most existing methods rely on place-specific background modeling. The time/space cost for such place-specific models is prohibitive for large-scale scenarios, such as long-term robotic visual simultaneous localization and mapping (SLAM). Therefore, we propose a novel ICD framework that is specifically customized for long-term SLAM. This study is inspired by the multi-map-based SLAM framework, where multiple maps can perform mutual diagnosis and hence do not require any explicit background modeling/model. We extend this multi-map-based diagnosis approach to a more generic single-map-based object-level diagnosis framework (i.e., ICD), where the self-localization module of SLAM, which is the change object indicator, can be used in its original form. Furthermore, we consider map diagnosis on a state-of-the-art deep convolutional neural network (DCN)-based SLAM system (instead of on conventional bag-of-words or landmark-based systems), in which the blackbox nature of the DCN complicates the diagnosis problem. Additionally, we consider a three-dimensional point cloud (PC)-based (instead of typical monocular color image-based) SLAM and adopt a state-of-the-art scan context PC descriptor for map diagnosis for the first time.

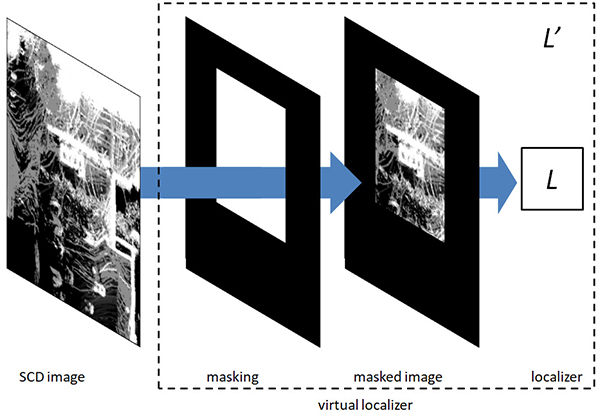

Masking input images for subimage-level change detection using a deep visual SLAM system

- [1] K. Kim, T. H. Chalidabhongse, D. Harwood, and L. Davis, “Real-time foreground–background segmentation using codebook model,” Real-Time Imaging, Vol.11, No.3, pp. 172-185, 2005.

- [2] M. Shah, J. D. Deng, and B. J. Woodford, “A self-adaptive codebook (SACB) model for real-time background subtraction,” Image and Vision Computing, Vol.38, pp. 52-64, 2015.

- [3] M. Wu and X. Peng, “Spatio-temporal context for codebook-based dynamic background subtraction,” AEU–Int. J. of Electronics and Communications, Vol.64, No.8, pp. 739-747, 2010.

- [4] A. Taneja, L. Ballan, and M. Pollefeys, “Geometric change detection in urban environments using images,” IEEE Trans. on Pattern Analysis and Machine Intelligence, Vol.37, No.11, pp. 2193-2206, 2015.

- [5] E. Palazzolo and C. Stachniss, “Fast Image-Based Geometric Change Detection Given a 3D Model,” Proc. the IEEE Int. Conf. Robotics and Automation (ICRA), 2018.

- [6] L. Luft, A. Schaefer, T. Schubert, and W. Burgard, “Detecting changes in the environment based on full posterior distributions over real-valued grid maps,” IEEE Robotics and Automation Letters, Vol.3, No.2, pp. 1299-1305, 2018.

- [7] D. Fox, W. Burgard, and S. Thrun, “Markov localization for mobile robots in dynamic environments,” J. of Artificial Intelligence Research, Vol.11, pp. 391-427, 1999.

- [8] J. Andrade-Cetto and A. Sanfeliu, “Concurrent map building and localization on indoor dynamic environments,” Int. J. of Pattern Recognition and Artificial Intelligence, Vol.16, No.3, pp. 361-374, 2002.

- [9] L. Gueguen and R. Hamid, “Large-scale damage detection using satellite imagery,” Proc. the IEEE Conf. on Computer Vision and Pattern Recognition, pp. 1321-1328, 2015.

- [10] M. Babaee, D. T. Dinh, and G. Rigoll, “A deep convolutional neural network for video sequence background subtraction,” Pattern Recognition, Vol.76, pp. 635-649, 2018.

- [11] P. Christiansen, L. N. Nielsen, K. A. Steen, R. N. Jørgensen, and H. Karstoft, “Deepanomaly: Combining background subtraction and deep learning for detecting obstacles and anomalies in an agricultural field,” Sensors, Vol.16, No.11, Article No.1904, 2016.

- [12] L. J. Manso, P. Núñez, S. da Silva, and P. Drews-Jr, “A novel robust scene change detection algorithm for autonomous robots using mixtures of Gaussians,” Int. J. of Advanced Robotic Systems, Vol.11, No.2, 2014.

- [13] M. Fehr, F. Furrer, I. Dryanovski, J. Sturm, I. Gilitschenski, R. Siegwart, and C. Cadena, “TSDF-based change detection for consistent long-term dense reconstruction and dynamic object discovery,” IEEE Int. Conf. on Robotics and Automation (ICRA), pp. 5237-5244, 2017.

- [14] W. Churchill and P. M. Newman, “Practice makes perfect? managing and leveraging visual experiences for lifelong navigation,” IEEE Int. Conf. on Robotics and Automation, pp. 4525-4532, 2012.

- [15] M. Bürki, M. Dymczyk, I. Gilitschenski, C. Cadena, R. Siegwart, and J. I. Nieto, “Map management for efficient long-term visual localization in outdoor environments,” IEEE Intelligent Vehicles Symp. (IV), pp. 682-688, 2018.

- [16] P. Mühlfellner, M. Bürki, M. Bosse, W. Derendarz, R. Philippsen, and P. Furgale, “Summary maps for lifelong visual localization,” J. of Field Robotics, Vol.33, No.5, pp. 561-590, 2016.

- [17] M. Paton, K. MacTavish, M. Warren, and T. D. Barfoot, “Bridging the appearance gap: Multi-experience localization for long-term visual teach and repeat,” IEEE/RSJ Int. Conf. on Intelligent Robots and Systems (IROS), pp. 1918-1925, 2016.

- [18] T. Sugimoto, K. Yamaguchi, and K. Tanaka, “Fault-diagnosing SLAM for varying scale change detection,” arXiv preprint, arXiv: 1909.09592, 2019.

- [19] K. Tanaka, “Detection-by-localization: Maintenance-free change object detector,” Int. Conf. Robotics and Automation (ICRA), pp. 4348-4355, 2019.

- [20] G. Kim and A. Kim, “Scan context: Egocentric spatial descriptor for place recognition within 3D point cloud map,” IEEE/RSJ Int. Conf. Intelligent Robots and Systems (IROS), pp. 4802-4809, 2018.

- [21] N. Carlevaris-Bianco, A. K. Ushani, and R. M. Eustice, “University of Michigan North Campus long-term vision and lidar dataset,” The Int. J. of Robotics Research, Vol.35, No.9, pp. 1023-1035, 2015.

- [22] R. Iqbal, T. Maniak, F. Doctor, and C. Karyotis, “Fault detection and isolation in industrial processes using deep learning approaches,” IEEE Trans. Industrial Informatics, Vol.15, No.5, pp. 3077-3084, 2019.

- [23] X. Wu and C. Pradalier, “Illumination robust monocular direct visual odometry for outdoor environment mapping,” Int. Conf. Robotics and Automation (ICRA), pp. 2392-2398, 2019.

- [24] N. Rottmann, R. Bruder, A. Schweikard, and E. Rueckert, “Loop closure detection in closed environments,” European Conf. on Mobile Robots (ECMR), pp. 1-8, 2019.

- [25] E. Palazzolo and C. Stachniss, “Fast image-based geometric change detection given a 3D model,” IEEE Int. Conf. Robotics and Automation, pp. 6308-6315, 2018.

- [26] M. Fehr, M. Dymczyk, S. Lynen, and R. Siegwart, “Reshaping our model of the world over time,” IEEE Int. Conf. Robotics and Automation (ICRA), pp. 2449-2455, 2016.

- [27] H. Andreasson, M. Magnusson, and A. Lilienthal, “Has somethong changed here? autonomous difference detection for security patrol robots,” Proc. IEEE/RSJ Int. Conf. on Intelligent Robots and Systems, pp. 3429-3435, 2007.

- [28] J. Košecka, “Detecting changes in images of street scenes,” Asian Conf. on Computer Vision (ACCV 2012), pp. 590-601, 2012.

- [29] C. Zhang and S. C. H. Hoi, “Partially observable multi-sensor sequential change detection: A combinatorial multi-armed bandit approach,” Proc. of the AAAI Conf. on Artificial Intelligence, Vol.33 No.01, pp. 5733-5740, 2019.

- [30] W. Churchill and P. Newman, “Experience-based navigation for long-term localisation,” The Int. J. of Robotics Ressearch, Vol.32, No.14, pp. 1645-1661, 2013.

- [31] N. Lv, C. Chen, T. Qiu, and A. K. Sangaiah, “Deep learning and superpixel feature extraction based on contractive autoencoder for change detection in SAR images,” IEEE Trans. Industrial Informatics, Vol.14, No.12, pp. 5530-5538, 2018.

- [32] M. Gong, X. Niu, P. Zhang, and Z. Li, “Generative adversarial networks for change detection in multispectral imagery,” IEEE Geoscience and Remote Sensing Letters, Vol.14, No.12, pp. 2310-2314, 2017.

- [33] N. Sünderhauf, S. Shirazi, F. Dayoub, B. Upcroft, and M. Milford, “On the performance of ConvNet features for place recognition,” IEEE/RSJ Int. Conf. on Intelligent Robots and Systems (IROS), pp. 4297-4304, 2015.

- [34] E. Garcia-Fidalgo and A. Ortiz, “iBoW-LCD: An appearance-based loop-closure detection approach using incremental bags of binary words,” IEEE Robotics and Automation Letters, Vol.3, No.4, pp. 3051-3057, 2018.

- [35] J. Mason and B. Marthi, “An object-based semantic world model for long-term change detection and semantic querying,” IEEE/RSJ Int. Conf. Intelligent Robots and Systems, pp. 3851-3858, 2012.

- [36] J. Redmon, S. Divvala, R. Girshick, and A. Farhadi, “You only look once: Unified, real-time object detection,” IEEE Conf. on Computer Vision and Pattern Recognition (CVPR), pp. 779-788, 2016.

- [37] M. Siam, C. Jiang, S. Lu, L. Petrich, M. Gamal, M. Elhoseiny, and M. Jagersand, “Video object segmentation using teacher-student adaptation in a human robot interaction (HRI) setting,” Int. Conf. Robotics and Automation (ICRA), pp. 50-56, 2019.

- [38] P. Goel, G. Dedeoglu, S. I. Roumeliotis, and G. S. Sukhatme, “Fault detection and identification in a mobile robot using multiple model estimation and neural network,” Proc. IEEE Int. Conf. Robotics and Automation (ICRA), pp. 2302-2309, 2000.

- [39] Y. M. Saiki, E. Takeuchi, and T. Tsubouchi, “Vehicle localization in outdoor woodland environments with sensor fault detection,” IEEE Int. Conf. Robotics and Automation (ICRA), pp. 449-454, 2008.

- [40] S. Thrun, W. Burgard, and D. Fox, “Probabilistic robotics,” MIT Press, 2005.

- [41] D. Meyer-Delius, J. Hess, G. Grisetti, and W. Burgard, “Temporary maps for robust localization in semi-static environments,” IEEE/RSJ Int. Conf. Intelligent Robots and Systems, pp. 5750-5755, 2010.

- [42] B. Yamauchi and R. Beer, “Spatial learning for navigation in dynamic environments,” IEEE Trans. on Systems, Man, and Cybernetics, Part B (Cybernetics), Vol.26, No.3, pp. 496-505, 1996.

- [43] P. Biber and T. Duckett, “Dynamic maps for long-term operation of mobile service robots,” Proc. of Robotics: Science and Systems, pp. 17-24, 2005.

- [44] T. Krajnik, J. P. Fentanes, G. Cielniak, C. Dondrup, and T. Duckett, “Spectral analysis for long-term robotic mapping,” IEEE Int. Conf. Robotics and Automation (ICRA), pp. 3706-3711, 2014.

- [45] J. Saarinen, H. Andreasson, and A. J. Lilienthal, “Independent Markov chain occupancy grid maps for representation of dynamic environment,” IEEE/RSJ Int. Conf. Intelligent Robots and Systems, pp. 3489-3495, 2012.

- [46] A. Walcott-Bryant, M. Kaess, H. Johannsson, and J. J. Leonard, “Dynamic pose graph SLAM: Long-term mapping in low dynamic environments,” IEEE/RSJ Int. Conf. Intelligent Robots and Systems, pp. 1871-1878, 2012.

- [47] D. Hahnel, R. Triebel, W. Burgard, and S. Thrun, “Map building with mobile robots in dynamic environments,” IEEE Int. Conf. Robotics and Automation, Vol.2, pp. 1557-1563, 2003.

- [48] F. Schuster, M. Wörner, C. G. Keller, M. Haueis, and C. Curio, “Robust localization based on radar signal clustering,” IEEE Intelligent Vehicles Symp. (IV), pp. 839-844, 2016.

- [49] F. Abrate, B. Bona, M. Indri, S. Rosa, and F. Tibaldi, “Map updating in dynamic environments,” The 41st Int. Symp. on Robotics (ISR) and the 6th German Conf. on Robotics (ROBOTIK) , pp. 1-8, 2010.

- [50] G. D. Tipaldi, D. Meyer-Delius, and W. Burgard, “Lifelong localization in changing environments,” The Int. J. of Robotics Research, Vol.32, No.14, pp. 1662-1678, 2013.

- [51] N. Shaik, T. Liebig, C. Kirsch, and H. Müller, “Dynamic map update of non-static facility logistics environment with a multi-robot system,” Joint German/Austrian Conf. on Artificial Intelligence (KI 2017), pp. 249-261, 2017.

- [52] A. O. Ulusoy and J. L. Mundy, “Image-based 4-d reconstruction using 3-d change detection,” European Conf. on Computer Vision (ECCV 2014), pp. 31-45, 2014.

- [53] D. Meyer-Delius, M. Beinhofer, and W. Burgard, “Occupancy grid models for robot mapping in changing environments,” Proc. of AAAI Conf. on Artificial Intelligence, pp. 2024-2030, 2012.

- [54] A. Taneja, L. Ballan, and M. Pollefeys, “Image based detection of geometric changes in urban environments,” Int. Conf. on Computer Vision, pp. 2336-2343, 2011.

- [55] A. Taneja, L. Ballan, and M. Pollefeys, “City-scale change detection in cadastral 3D models using images,” IEEE Conf. on Computer Vision and Pattern Recognition, pp. 113-120, 2013.

- [56] C. J. Taylor and D. J. Kriegman, “Structure and motion from line segments in multiple images,” Proc. 1992 IEEE Int. Conf. on Robotics and Automation, pp. 1615-1620, 1992.

- [57] T. Pollard and J. L. Mundy, “Change Detection in a 3-D World,” Proc. of the IEEE Conf. on Computer Vision and Pattern Recognition (CVPR), pp. 1-6, 2007.

- [58] R. Ambruş, N. Bore, J. Folkesson, and P. Jensfelt, “Meta-rooms: Building and maintaining long term spatial models in a dynamic world,” IEEE/RSJ Int. Conf. on Intelligent Robots and Systems, pp. 1854-1861, 2014.

- [59] Y. Takahashi, K. Tanaka, and N. Yang, “Scalable change detection from 3D point cloud maps: Invariant map coordinate for joint viewpoint-change localization,” 21st Int. Conf. on Intelligent Transportation Systems (ITSC), pp. 1115-1121, 2018.

- [60] K. Tanaka, “Local map descriptor for compressive change retrieval,” IEEE Int. Conf. on Robotics and Biomimetics (ROBIO), pp. 2151-2158, 2016.

- [61] T. Sugimoto, K. Tanaka, and K. Yamaguchi, “Leveraging object proposals for object-level change detection,” IEEE Intelligent Vehicles Symp. (IV), pp. 397-402, 2018.

- [62] K. Yamaguchi, K. Tanaka, T. Sugimoto, R. Ide, and K. Takeda, “Recursive background modeling with place-specific autoencoders for large-scale image change detection,” IEEE Intelligent Transportation Systems Conf. (ITSC), pp. 2046-2051, 2019.

- [63] T. Murase, K. Tanaka, and A. Takayama, “Change detection with global viewpoint localization,” 4th IAPR Asian Conf. on Pattern Recognition (ACPR), pp. 31-36, 2017.

- [64] K. Yamaguchi, K. Tanaka, Y. Kojima, and T. Sugimoto, “An experimental study on generative adversarial network and visual experience mining for domain adaptive change detection,” IEEE Int. Conf. on Systems, Man, and Cybernetics (SMC), pp. 1269-1274, 2018.

- [65] R. Paul, D. Feldman, D. Rus, and P. Newman, “Visual precis generation using coresets,” IEEE Int. Conf. on Robotics and Automation (ICRA), pp. 1304-1311, 2014.

- [66] Ö. Erkent and I. Bozma, “Place representation in topological maps based on bubble space,” IEEE Int. Conf. on Robotics and Automation, pp. 3497-3502, 2012.

- [67] K. Tanaka, “Mining minimal map-segments for visual place classifiers,” arXiv preprint, arXiv: 1909.09594, 2019.

- [68] G. Kim, B. Park, and A. Kim, “1-day learning, 1-year localization: Long-term LiDAR localization using scan context image,” IEEE Robotics and Automation Letters, Vol.4, No.2, pp. 1948-1955, 2019.

- [69] K. Simonyan and A. Zisserman, “Very deep convolutional networks for large-scale image recognition,” arXiv preprint, arXiv:1409.1556, 2014.

- [70] C. Szegedy, S. Ioffe, V. Vanhoucke, and A. A. Alemi, “Inception-v4, inception-resnet and the impact of residual connections on learning,” Proc. of the 31st AAAI Conf. on Artificial Intelligence, pp. 4278-4284, 2017.

- [71] R. Arandjelovic, P. Gronat, A. Torii, T. Pajdla, and J. Sivic, “NetVLAD: CNN architecture for weakly supervised place recognition,” IEEE Conf. on Computer Vision and Pattern Recognition (CVPR), pp. 5297-5307, 2016.

- [72] E. Osherov and M. Lindenbaum, “Increasing cnn robustness to occlusions by reducing filter support,” IEEE Int. Conf. on Computer Vision (ICCV), pp. 550-561, 2017.

- [73] Y. Wei, J. Feng, X. Liang, M.-M. Cheng, Y. Zhao, and S. Yan, “Object region mining with adversarial erasing: A simple classification to semantic segmentation approach,” IEEE Conf. on Computer Vision and Pattern Recognition (CVPR), pp. 1568-1576, 2017.

- [74] S. Iizuka, E. Simo-Serra, and H. Ishikawa, “Globally and locally consistent image completion,” ACM Trans. on Graphics, Vol.36, No.4, Article No.107, 2017.

- [75] M. Imhof and M. Braschler, “A study of untrained models for multimodal information retrieval,” Information Retrieval J., Vol.21, No.1, pp. 81-106, 2018.

- [76] Y. Kojima, K. Tanaka, Y. Naiming, Y. Hirota, and K. Yamaguchi, “From comparison to retrieval: Scalable change retrieval from discriminatively learned deep three-dimensional neural codes,” IEEE Intelligent Transportation Systems Conf. (ITSC), pp. 789-795, 2019.

- [77] M. Bosse, P. Newman, J. Leonard, M. Soika, W. Feiten, and S. Teller, “An Atlas framework for scalable mapping,” IEEE Int. Conf. on Robotics and Automation, pp. 1899-1906, 2003.

- [78] R. J. Radke, S. Andra, O. Al-Kofahi, and B. Roysam, “Image change detection algorithms: a systematic survey,” IEEE Trans. on Image Processing, Vol.14, No.3, pp. 294-307, 2005.

- [79] P. Jensfelt and S. Kristensen, “Active global localization for a mobile robot using multiple hypothesis tracking,” IEEE Trans. on Robotics and Automation, Vol.17, No.5, pp. 748-760, 2001.

- [80] F. Dellaert, D. Fox, W. Burgard, and S. Thrun, “Monte Carlo localization for mobile robots,” IEEE Int. Conf. on Robotics and Automation, pp. 1322-1328, 1999.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.